This week I focused on improving our comparison algorithm logic and exploring the dynamic-time warping post processing algorithm. In regards to the frame-by-frame comparison algorithm, last week, I made an algorithm that takes in two videos and outputs if the dance moves were similar or not. However, the actual comparison was giving too many false positives. I worked on debugging this with Danny to see what some of the problems were with this, and I found that some of the thresholds were too high in the comparison logic. After tweaking these and spending time testing these with other video points, the comparisons got better, but they aren’t 100% accurate.

With that, I decided to begin working on the dynamic-time warping algorithm to get a sense of what we could do to improve our overall performance and feedback to the user. I spent some time thinking about how we would implement the dynamic-time warping algorithm and also how we would use this to actually provide useful feedback for the user. I broke it down to measure similarity but also highlight specific areas for improvement, such as timing, posture, or limb positioning using specific points in the mediapipe dataset. I began implementation, but am currently running into some bugs that I will fix next week.

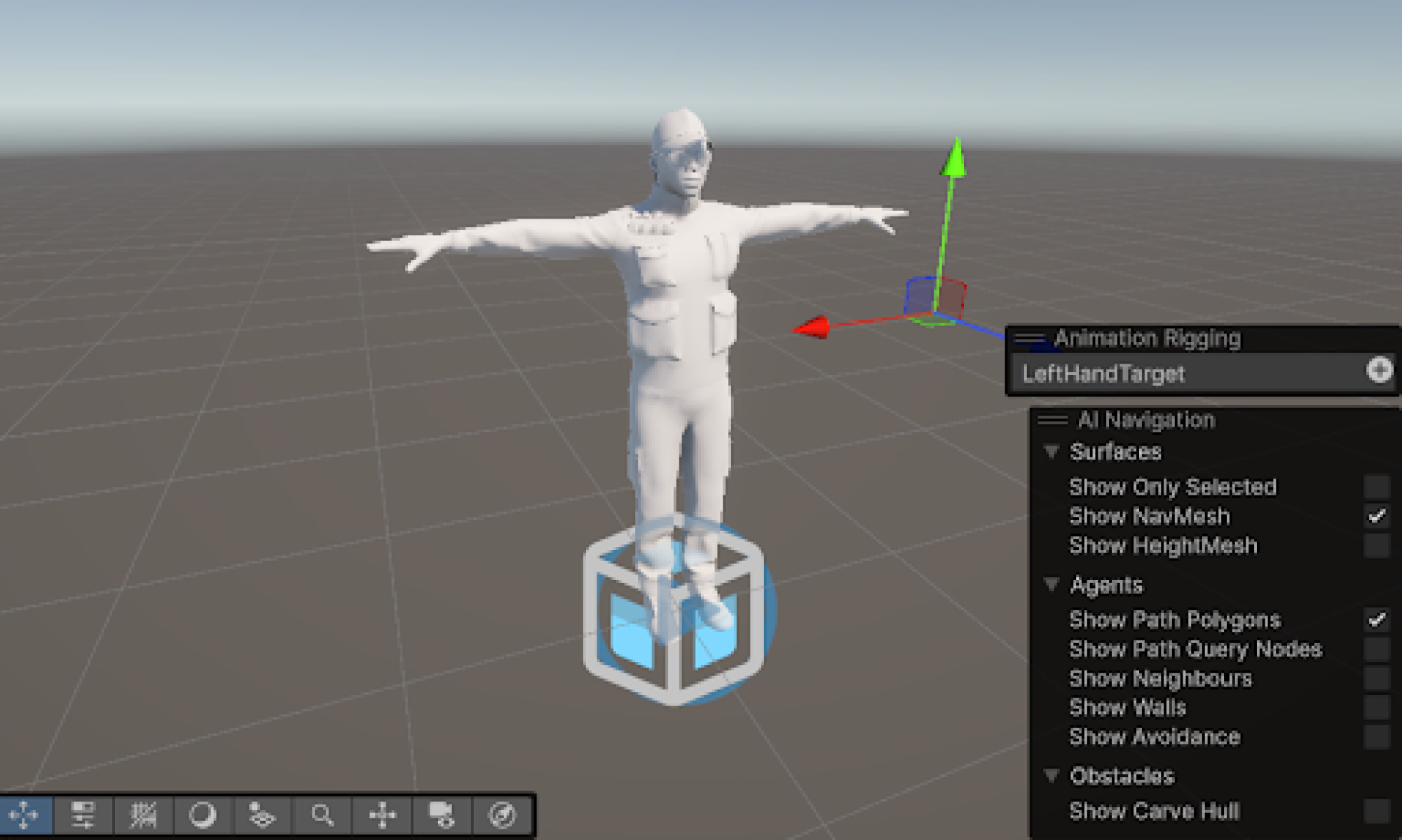

I also worked with Rex to begin incorporating the comparison logic to the Unity game. We met to catch each other up on our progress and to plan how we will integrate our parts. There were some things that we needed to modify such as the JSON formatting to make sure everything would be okay compatibility wise. For next week, one goal we definitely have is to incorporate our codebases more fully so we can have a successful interim demo the week after.