This week I focused on developing the comparison algorithm. Now that we had the code to normalize the points based on different camera angles, we had the capability to create a more fleshed out comparison engine to see if two videos contain the same dance moves.

I spent my time this week creating a script that will take in two videos (one a reference video, one a user video) and see if the videos match via frame-to-frame comparisons. In our actual final project, the second video will be replaced with real-time video processing, but just for testing’s sake I made it so I could upload two videos. I used two videos of my partner Danny who does the same dance moves at different angles from the camera and at some different times. Using these videos, I had to extract the landmarks, get the pose data, and normalize the data in case there were any differences in camera poses. After that, I parsed through the JSONs, trying to see if each of the JSONs at each comparable frame are similar enough. I then created a side-by-side comparison UI that allows us to tell which frames are similar, and which frames are different. The comparison is pretty good for the most part, but I did find that there were some false positives, so I modified the thresholds and it got better as well.

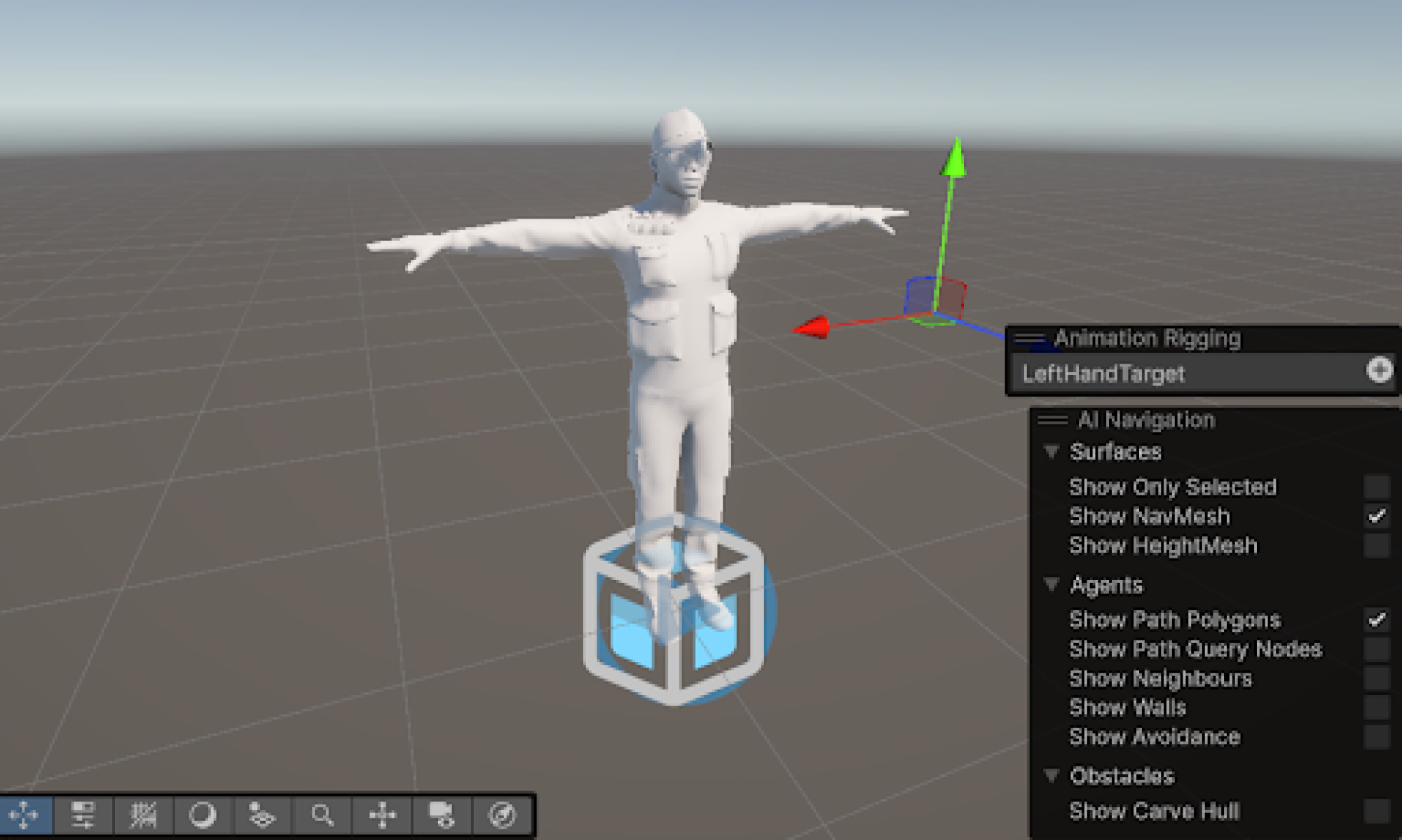

Overall, our progress seems to be on schedule. The next steps will be integrating this logic into the Unity side instead of just the server side code. Additionally, I will need to change the logic to take inputs from a webcam and a reference video instead of uploading two videos, but this should be trivial. Overall, the biggest thing will be to test our system more thoroughly with more data points and videos. Next week, we will work on testing the system more thoroughly as well as beginning to work on our DTW post-video analysis engine.

I couldn’t upload a less blurry picture due to maximum file upload size constraints so apologies for any blurriness in the following images.

Match

No Match