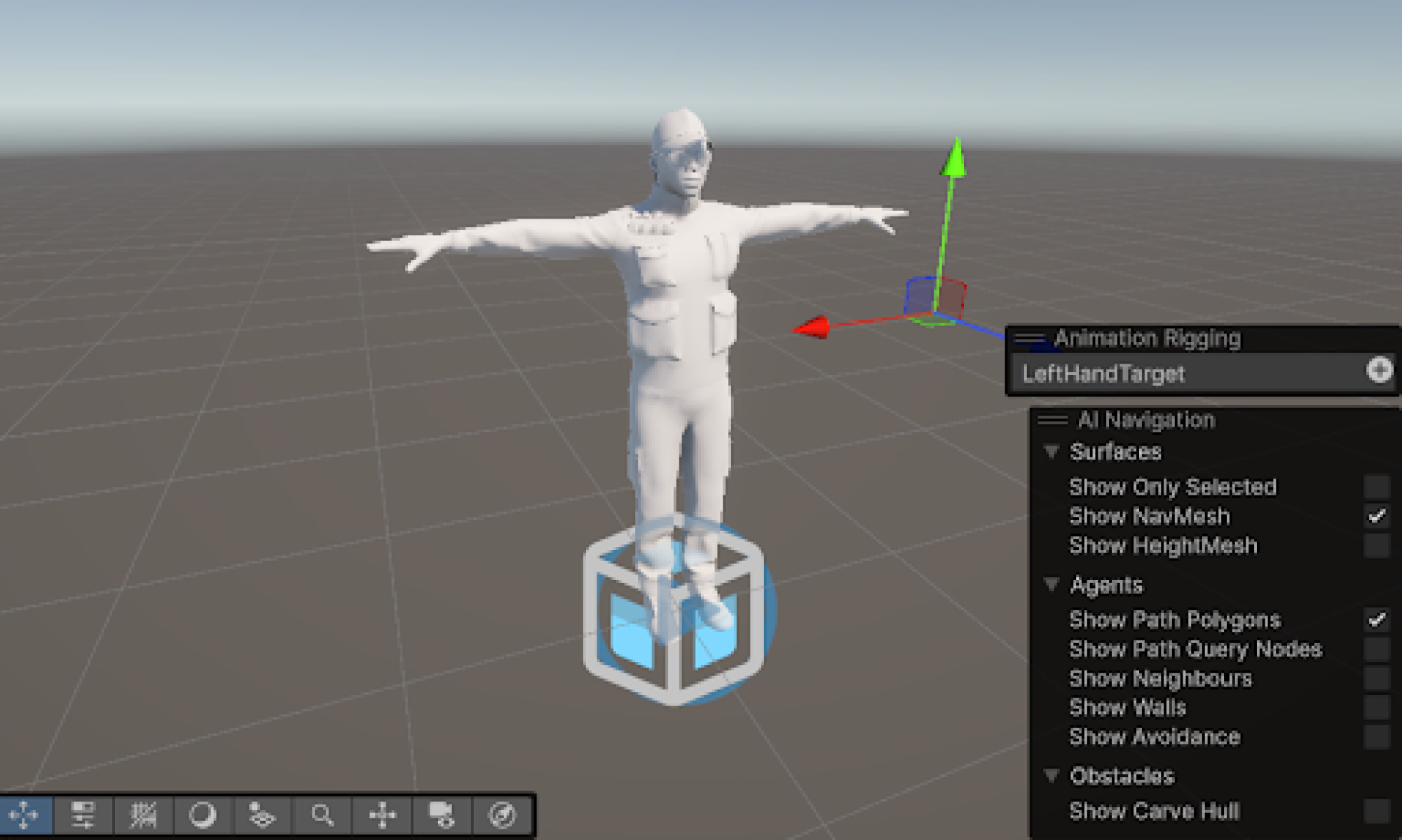

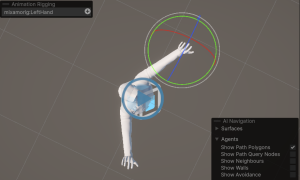

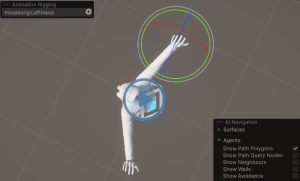

This week, I made progress by most importantly getting the real time and reference .mp4 video coordinates working with the avatar. I also spent time optimizing the physics of joint movements and improving the accuracy of how OpenCV MediaPipe’s 2D coordinates are mapped onto the 3D avatar. This was a crucial step in making the motion tracking system more precise and realistic, ensuring that the avatar’s movements correctly reflect the detected body positions. Additionally, I worked on expanding the GUI functionality within Unity, specifically implementing the ability to select a reference .mp4 video for analysis. This feature allows users to load pre-recorded videos, which the system processes by extracting JSON-based pose data derived from the .mp4 file. As a result, the dance coach can now analyze both live webcam input and pre-recorded dance performances, significantly enhancing its usability as mentioned before. I have attached a real-time demo video below to showcase the system’s current capabilities in tracking and analyzing movements. Debugging and refining the motion tracking pipeline took considerable effort, but this milestone was essential to ensuring the system’s core functionality is robust and scalable.

(THIS LINK TO DEMO VIDEO EXPIRES ON MONDAY 3/15, please let me know if new link needed)

I am on track with the project timeline, as this was a major development step that greatly improves the system’s versatility. I primarily want to focus on refining the coordinate transformations, adjusting physics-based joint movements for smoother tracking, and enhancing the UI experience. Debugging the .mp4 processing workflow and ensuring proper synchronization between the extracted JSON pose data and Unity’s animation system were also key challenges that I successfully addressed. Looking ahead, my goal for the upcoming week is to refine the UI pipeline further so that the application becomes a polished, standalone application. This includes improving the user interface for seamless video selection, enhancing the visualization of movement analysis, and optimizing performance for smooth real-time feedback. With these improvements, the project is on a solid path toward completion, and I am confident in achieving the remaining milestones on schedule.