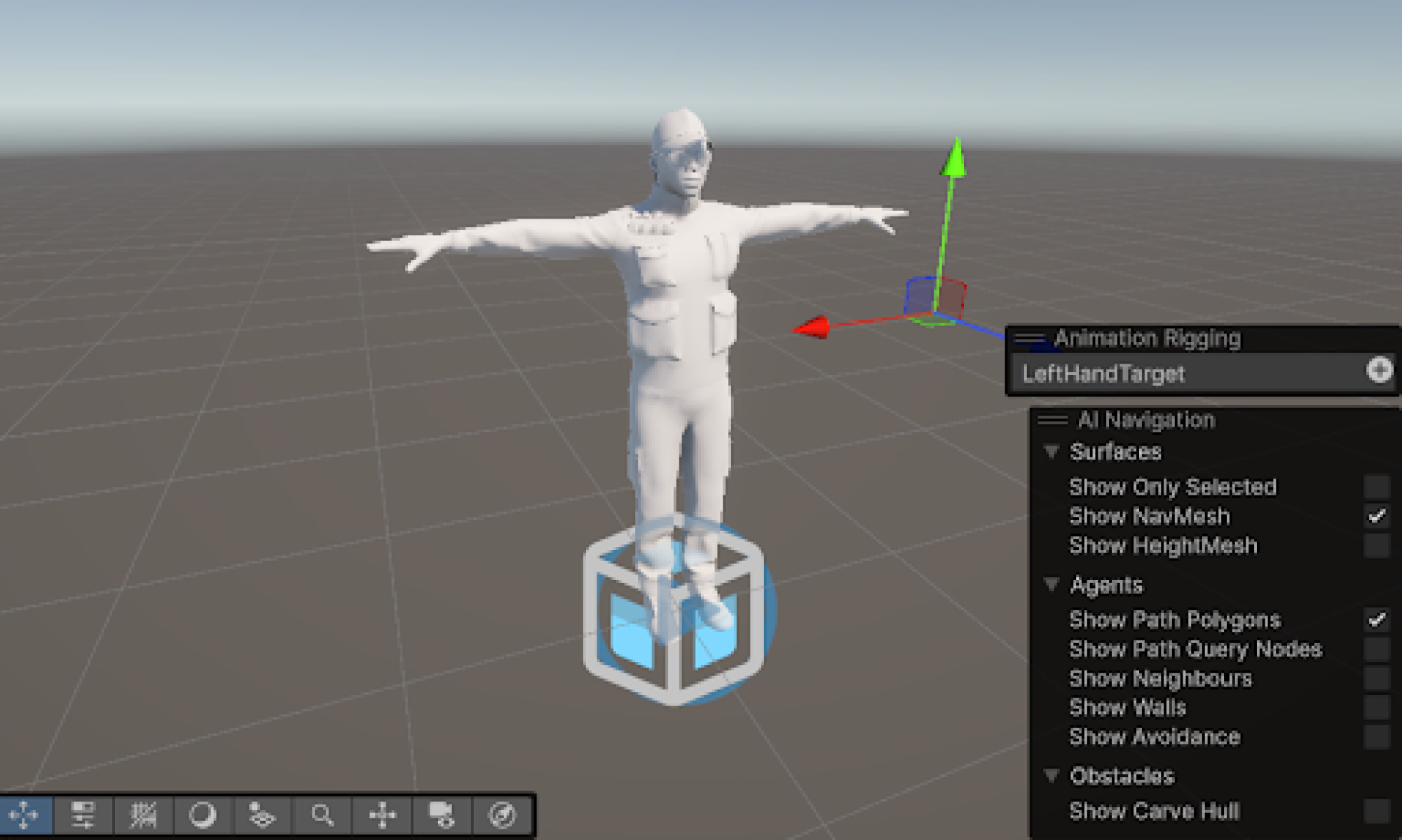

This week, I spent a lot of time refining the dance coach’s joint rotations and movements in Unity, making sure they feel as natural and responsive as possible which involves using physics/physiology and rotations. One of the focuses this week was adding logic to recolor the avatar’s mesh based on movement accuracy, giving users clear visual feedback on which parts of their body need adjustment. I also worked on integrating the comparison algorithm with Danny and Akul, the algorithms which evaluates the user’s pose against the reference movements. A major challenge was optimizing the frame rate while ensuring that the physics and physiological equations accurately represent real-world motion. It took a lot of trial and error to fine-tune the balance between performance and accuracy, but it’s starting to come together. I collaborated closely to test and debug these changes, ensuring that it works correctly for basic movements.

Overall, I’d say progress is on schedule, but some of the optimization work took longer than expected. The biggest slowdown was making sure the calculations didn’t introduce lag while still maintaining accurate movement tracking. I also believe that there is more improvement to be made on the rotations of some of the joints, especially the neck to model the movement more accurately. To stay on track, I plan to refine the physics model further and improve computational efficiency so the system runs smoothly even with more complex movements. Next week, I hope to finalize the avatar recoloring mechanism, refine movement accuracy detection, and conduct more extensive testing with a wider range of dance poses. The goal is to make the feedback system more intuitive and responsive before moving on to more advanced features.

Attached below are the demo videos for how the dynamic CV to unity avatar is right now, the physics movements will need to be further tweaked for advanced movement (Note: speeds are not the same for both GIFs)