Risk Management:

Risk: Comparison algorithm not being able to handle depth data

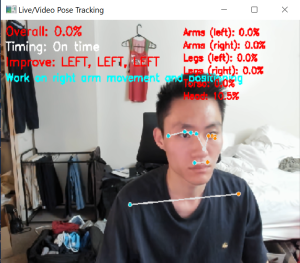

Mitigation Strategy/Contingency plan: We plan to normalize the test and reference videos so that they both represent absolute coordinates, allowing us to use euclidean distance for our comparison algorithms. If this does not work, we can fall back to neglecting the relative and unreliable depth data from the CV and rely purely on the xy coordinates, which should still provide good quality feedback for casual dancers.

Risk: Comparison algorithm not matching up frame by frame – continued risk

Mitigation Strategy/Contingency plan: We will attempt to implement a change to our algorithm that takes into account a constant delay between the user and the reference video. If correctly implemented, this will allow the user to not start precisely at the same time as the reference video and still receive accurate feedback. If we are unable to implement this solution, we will incorporate warnings and counters to make sure the users know when to correctly start dancing so that their footage is matched up with the reference video

Design Changes:

There were no design changes this week. We have continued to execute our schedule.

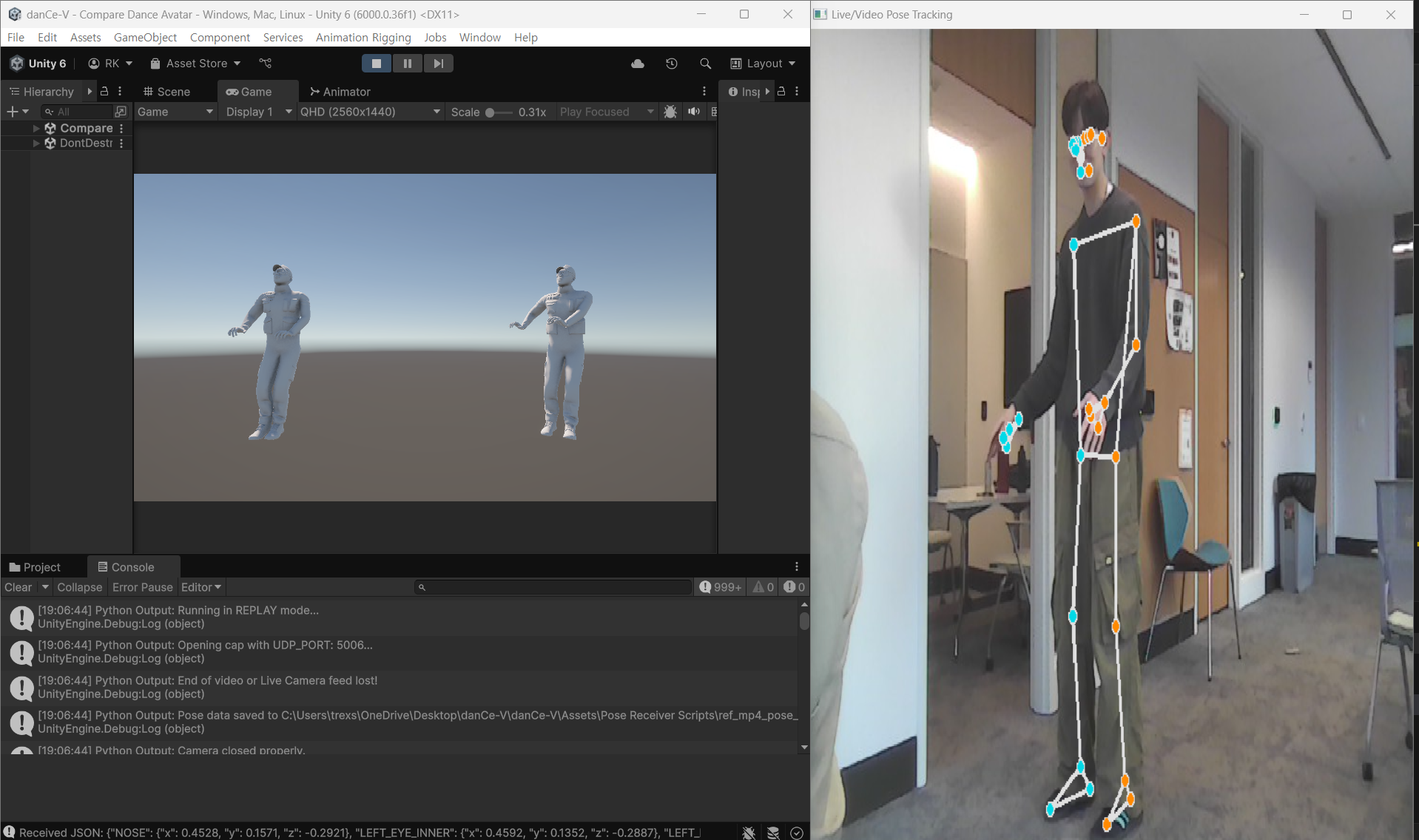

Progress on the project is generally on schedule. While optimizing the avatar processing in parallel took slightly longer than anticipated due to synchronization challenges, the integration of the DTW algorithm proceeded decently once we established the data pipeline. If necessary, I will allocate additional hours next week to refine the comparison algorithm and improve the UI feedback for the player.

Progress on the project is generally on schedule. While optimizing the avatar processing in parallel took slightly longer than anticipated due to synchronization challenges, the integration of the DTW algorithm proceeded decently once we established the data pipeline. If necessary, I will allocate additional hours next week to refine the comparison algorithm and improve the UI feedback for the player.

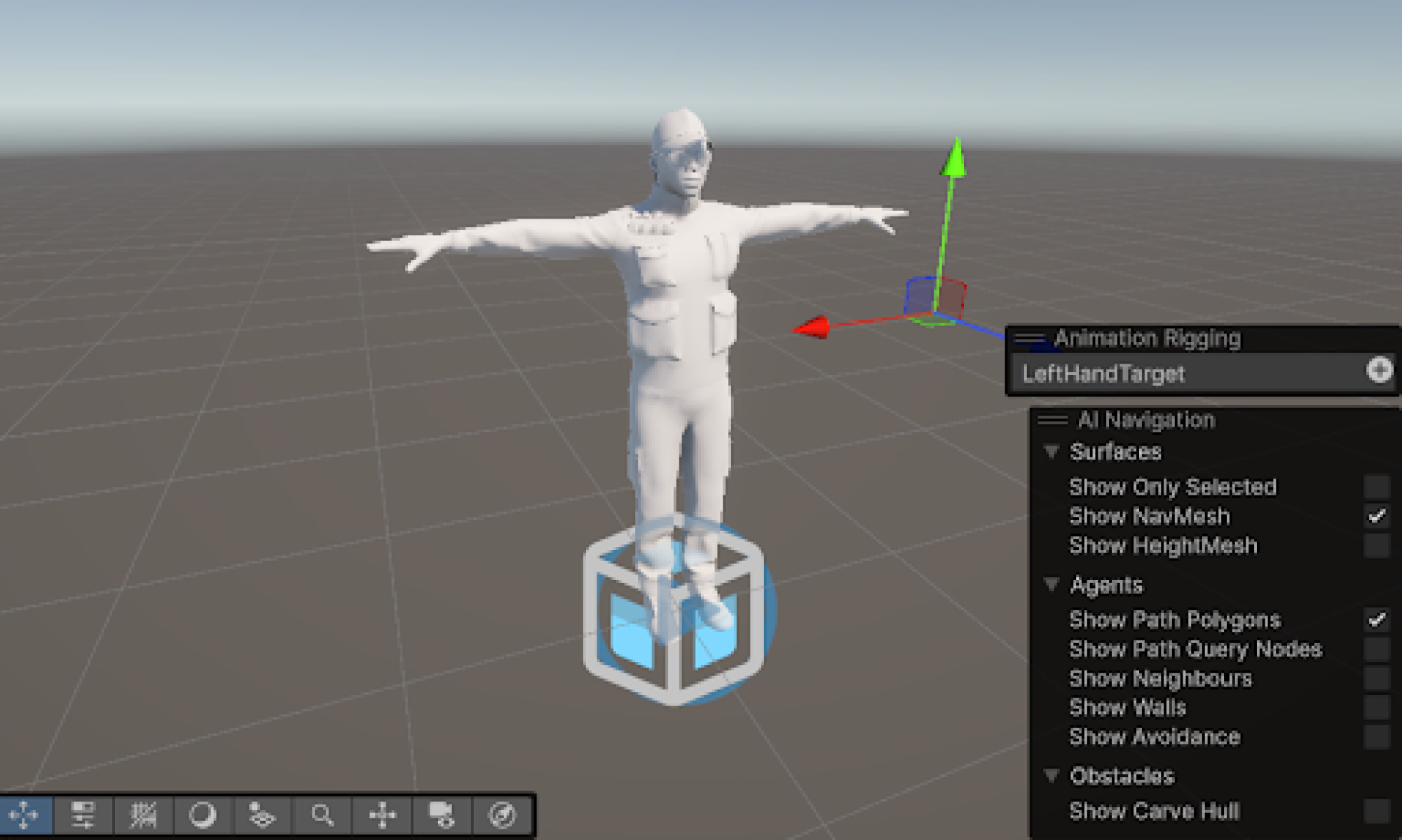

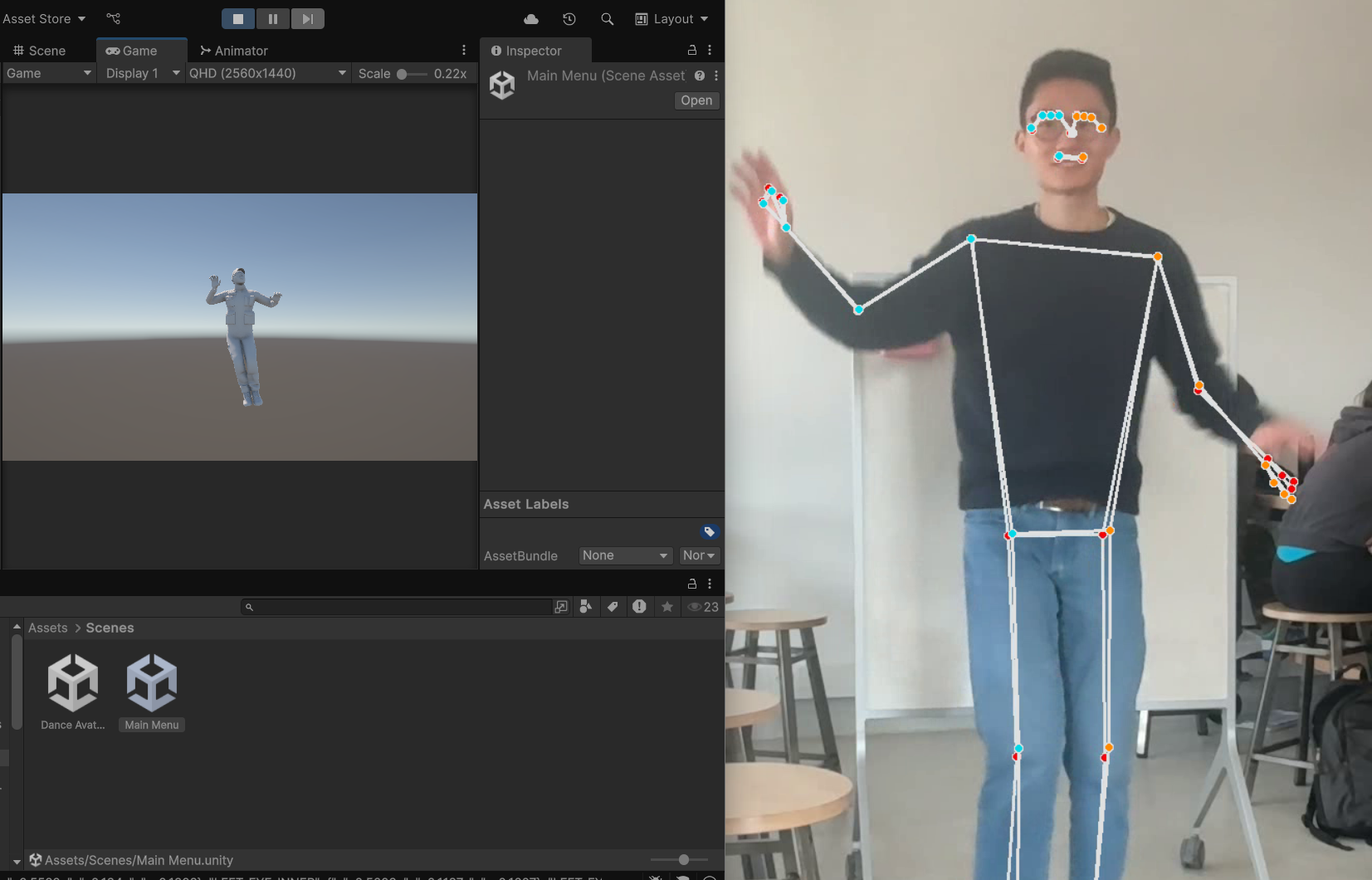

Additionally, I successfully optimized the avatar

Additionally, I successfully optimized the avatar