This week, I spent a lot of time planning, executing, and implementing the complete transition/pivot from our initial idea that was given in our abstract to our current direction of utilizing Computer Vision inputs and integrating a Unity-based dance coach. Given the feedback from our professor during the Project Proposal, I also had to plan how we will shift away from my initial vision of a rhythm game where we have pre-set dance moves in a given music track and compare inputs from the user to those dance moves. Our new direction uses an approach where we are first given a reference video with the dance sequence, and our CV pipeline will interpret the moves and map those to a reference avatar in Unity. Then, we can have the user try to learn the given dance sequence by comparing inputs from the user to the reference avatar we created.

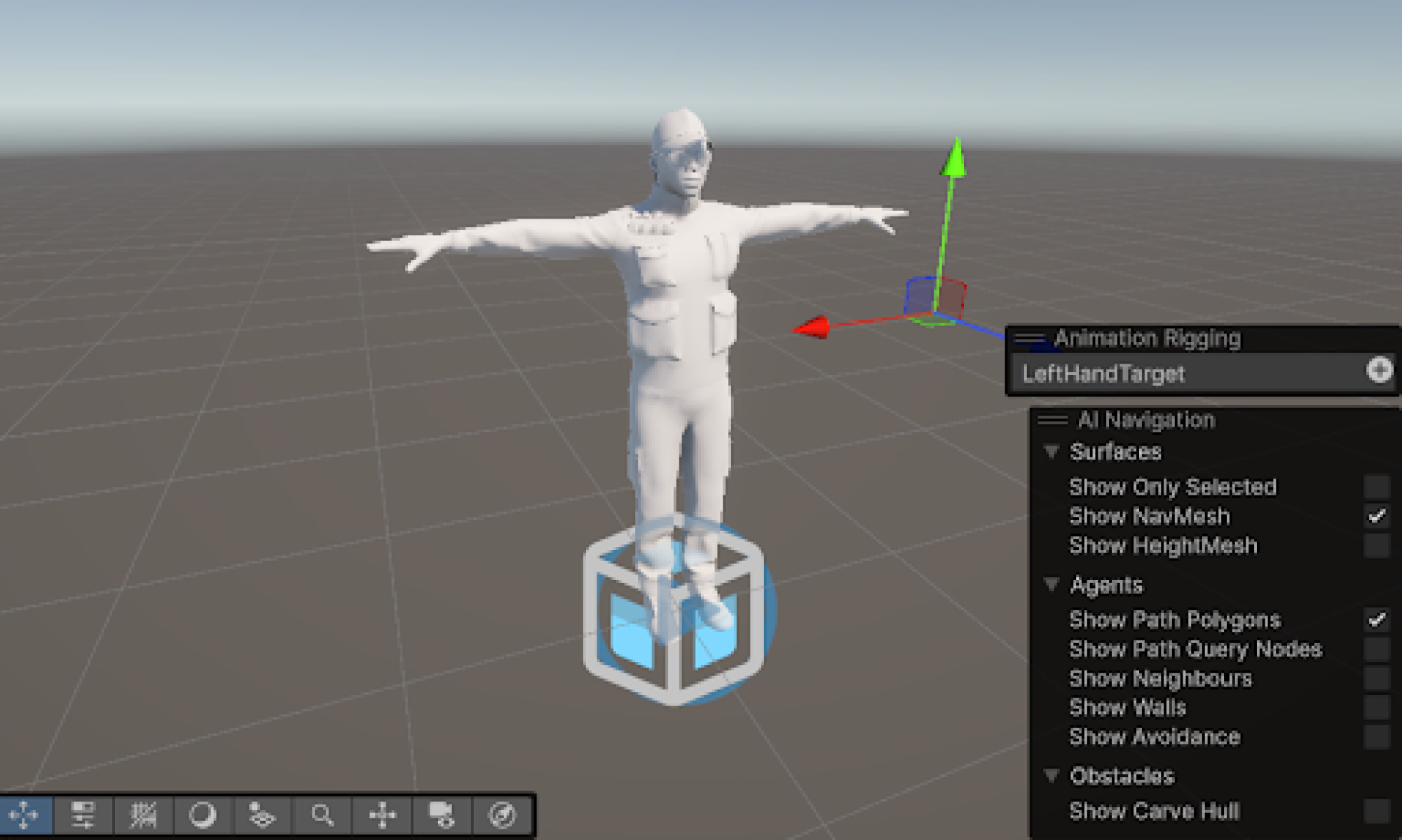

Because our project prior to feedback from our professor is still somewhat aligned with the rhythm game, I focused on learning Unity’s workflow, particularly the role of GameObjects and how components dictate their behavior. I explored the hierarchical structure of objects, learning how transforms and parenting affect scene composition. A significant portion of my time was dedicated to character rigging and bone modeling, ensuring the avatar is correctly structured for animation. Using a humanoid rig model, I mapped the skeleton and assigned necessary constraints for proper movement. I have also written code to map the joints and test functionality of all the correct joint movements. With the rig fully set up and all required bone parts assigned for movement, I believe I am on track with the schedule. Next week, I plan to complete input synchronization with Python, ensuring input data from the CV in Python is mapped to the avatar’s motion. If time allows, I will also start designing a basic environment (background) for the game.