Team Status Report

Risk Management:

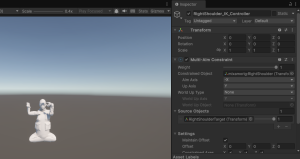

Risk: Losing movement details in the transition from MediaPipe to the Unity inputs. This is something we are noticing after running some initial experiments this week in trying to push simple movements through MediaPipe into Unity.

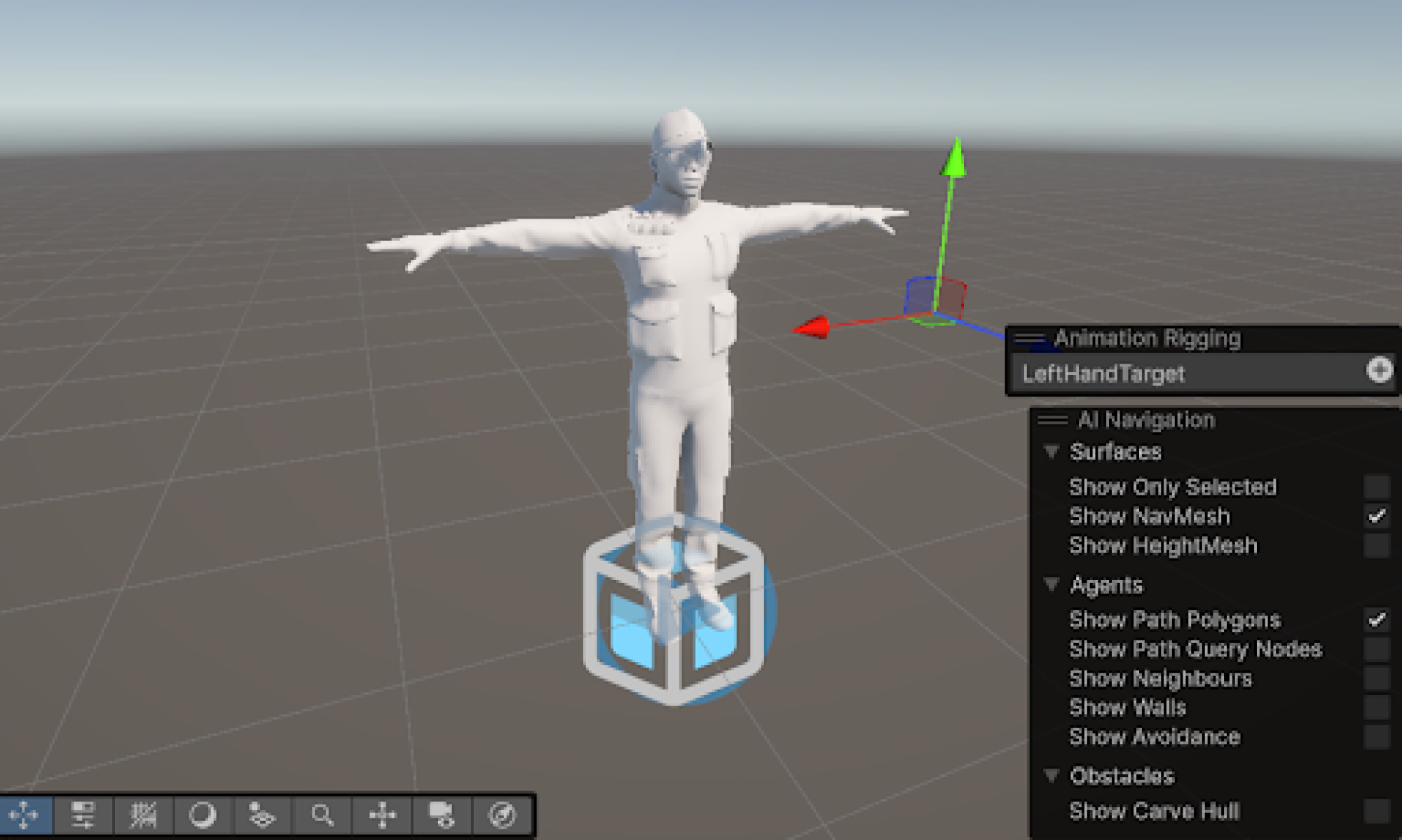

Mitigation Strategy/Contingency plan: Unity has different kinds of joint options (either choice of Two-Bone Inverse Kinematic Constraint/Multi-Aim Constraint/Damped Transform/Rotation Constraint), so testing between these 4 types of joint options and finding what looks the most natural, and is most coherent with our MediaPipe data.

Design Changes:

- Specific Design Updates:

- Change: Selecting MediaPipe as our library of choice as opposed to Open Pose

- Why: More detailed documentation, ease of use, better match with the amount of details we require

- Change: 3D Comparative Analysis Engine to be done in Unity

- Why: Unity’s detailed avatar rigging allows us to display the dance moves with accuracy and compare the webcam footage with the reference video with sufficient detail

- Cost Impact and Mitigation:

– No direct costs incurred these changes were a part of the planned exploratory stage in our schedule

Updated Schedule:

Part A was written by Danny  Cui, Part B was written by Rex Kim, Part C was written by Akul Singh

Cui, Part B was written by Rex Kim, Part C was written by Akul Singh

It is possible, though probably unusual that the answer to a particular question would be “does not apply.” In such a case, please describe what you have considered to ensure that it does not apply.

Please write a paragraph or two describing how the product solution you are designing will meet a specified need…

Part A: … with respect to considerations of public health, safety or welfare. Note: The term ‘health’ refers to a state of well-being of people in both a physiological and psychological sense. ‘Safety’ is the absence of hazards and/or physical harm to persons. The term ‘welfare’ relates to the provision of the basic needs of people.

- From a physical health perspective, the system promotes regular exercise through dance, which improves cardiovascular fitness, flexibility, coordination, and muscle strength. The feedback mechanism ensures users maintain proper form and technique, reducing the risk of dance related injuries that could occur from incorrect movements or posture. This is particularly valuable for individuals who may not have access to in person dance instruction or cannot afford regular dance classes.

- From a psychological health and welfare standpoint, the system creates a safe, private environment for users to learn and practice dance without the anxiety or self-consciousness that might arise in group settings. Dance has been shown to reduce stress, improve mood, and boost self-esteem, benefits that become more accessible through this technology. The immediate feedback loop also provides a sense of accomplishment and progression, fostering motivation and sustained engagement in physical activity. Additionally, the system addresses safety concerns by allowing users to learn complex dance moves at their own pace in a controlled environment, with guidance that helps prevent overexertion or dangerous movements. This is especially important for beginners or those with physical limitations who need to build up their capabilities gradually.

Part B: … with consideration of social factors. Social factors relate to extended social groups having distinctive cultural, social, political, and/or economic organizations. They have importance to how people relate to each other and organize around social interests.

- Our computer vision-based dance-coaching game makes dance training more accessible and engaging. Traditional dance lessons can be hard to find, especially in remote areas and especially if one does not want to consistently pay for the classes. Our game removes these barriers by letting users practice at home with just a camera and computer setup. Using Mediapipe and Unity, it analyzes an input video and compares the user’s movements to an ideal reference. Real-time feedback helps users improve without needing an in-person instructor. This makes dance education more available to people who may not have the resources or opportunities to attend formal classes.

- Beyond accessibility, our game also fosters cultural exchange and social engagement. Dance is deeply tied to cultural identity, and by incorporating a variety of dance styles from different traditions, the game can serve as an educational tool that promotes appreciation for diverse artistic expressions. Users can learn and practice traditional and contemporary dance forms, helping preserve cultural heritage while making it more interactive and engaging for younger generations. Additionally, the game can create virtual dance communities, encouraging users to share their performances, participate in challenges, and interact with others who share their interests.

Part C: … with consideration of economic factors. Economic factors are those relating to the system of production, distribution, and consumption of goods and services.

- Since our application relies only on a webcam and computer processing, its economic impact is primarily related to accessibility, affordability, and potential market reach. Unlike traditional dance classes, which require ongoing payments for instructors/studio rentals, our application offers a cost-effective alternative by enabling users to practice and improve their dance skills from home. This affordability makes dance education more accessible to individuals who may not have the financial means to attend in-person lessons, thus reducing economic barriers to learning a new skill.

- Additionally, our application aligns with current technological trends in society, where software-based fitness and entertainment solutions generate revenue through app sales, subscriptions, or advertisements. The fact that danCe-V only requires a computer webcam also reduces the financial burden on users, as they do not need specialized equipment beyond a standard webcam and computer. This makes it an economically sustainable option for both consumers and potential business models, allowing the platform to reach a broad audience while keeping costs low.

Images:

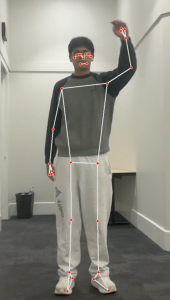

Testing from video input:

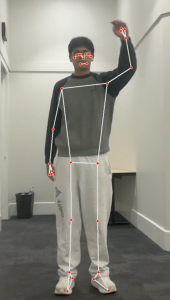

Testing from direct webcam footage:

Cui, Part B was written by Rex Kim, Part C was written by Akul Singh

Cui, Part B was written by Rex Kim, Part C was written by Akul Singh