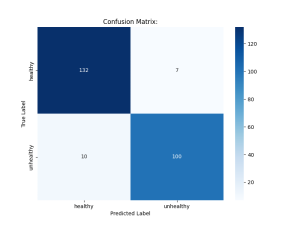

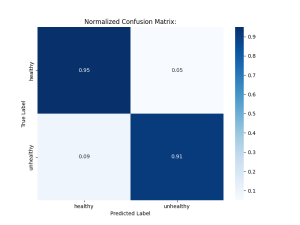

This week I got the mister working. I also finalized the data collection of image and sensor data for the ML plant health detection model. In total we now have 746 data points, consisting of 14 plants across 4 species. Based on testing results I made some adjustments to the ML model, mainly fine-tuning the image and sensor models using our dataset before training the late fusion classifier. With these adjustments, I was able to achieve the following results:

True Positive Rate (TPR): 0.9091

True Negative Rate (TNR): 0.9496

False Positive Rate (FPR): 0.0504

False Negative Rate (FNR): 0.0909

Although the results don’t quite meet the requirements I initially set in the use case and design requirements (FPR <5 %), I believe that the model performs reasonably well given dataset and time constraints, so I will be adjusting the design requirements to be FPR and FNR <10%.

Aside from that, Zara and I began testing the overall system on 3 African Violet plants. We placed the plants in the greenhouse system and set it to automatic mode, and have been monitoring the plants daily to ensure that the system works as required (lights turn on according to schedule, heaters/misters/watering work according to PID control, etc.)

I am on schedule, as I have now completed all my tasks. I am now focusing on getting the final assignments such as the poster, video, and report done.

Next Week’s Deliverables:

- Complete poster

- Complete video

- Complete demo

- Complete report