Report

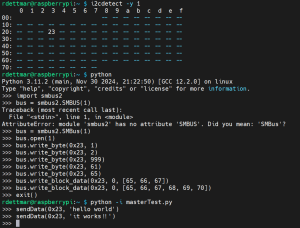

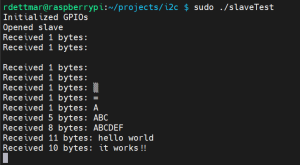

I soldered pins into the I2C GPIOs on the Rasppi boards to make accessing them simpler. With a steadier metallic connection I was able to test the Python version of the I2C library and got it to work as well, which makes wrapping the core code on each of the boards in the communication harness necessary much simpler since it’s all in the same language (and also I have an example of it working events-based, instead of just polling on every loop, so I don’t have to fight through figuring out events-based code in C++). I measured the current draw of each of the Rasppis running their core code, so I know how heavy of a battery I need to purchase, and it actually turned out to be a little less than I expected it to be. 1200mAh should do it; I’ve put in an order for a 3.7V LiPo that size (I think? This week has been a little hazy in general. If not I’ll do it tomorrow, either way it should get ordered early next week) and I have a 1200mAh LiPo battery on hand from a personal project that I can start to work with and wire things to on a temporary basis before it arrives.

Also the display arrived! As I understand it it arrived on time (March 22) but I didn’t actually get the ticket from receiving until much later in the week since the original one went to the wrong email (one attached to my name but on the wrong domain, and which I thought had been closed. Oops). But I have it now. It’s here! I feel so much less terrified of this thing now that it’s here! I need to get my hands on a reflective surface (will probably just order little mirror tiles and cut them to size, or a reflection tape that I can stick to the same sort of acrylic plastic that I’m going to cut the lenses out of. Gonna see what’s cheaper/faster/etc on Amazon).

I modified the draft of the CAD so I’ll be able to mount the Rasppis and the camera to it for interim demo. I ran out of time to do anything else, because the rest of the things are more complicated and the display came too late in the week for me to fight with it this week.

Progress Schedule

Things are getting done. I don’t know. It’s Carnival week and I am coming apart at the seams. I will reevaluate after next Sunday.

Next Week’s Deliverables

Interim demo. That’s it. I plan on printing the headset and attaching the boards I have tomorrow, and then I’ll have the wire lengths to connect the I2C pins. It’s gonna. get done