Report

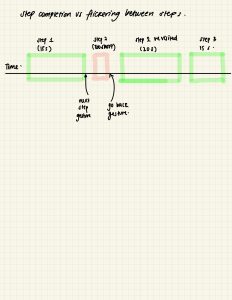

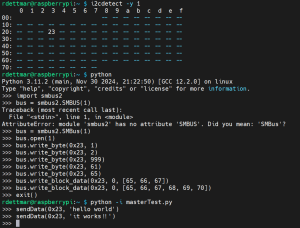

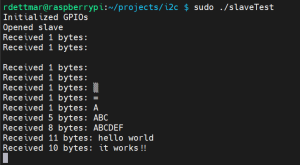

I corrected the soldering issue on the I2C pins as best as I could, and while the connection is still inexplicably intermittent, it’s consistent enough that I feel like sinking more time into fixing it is not worth the cost and there are better things I could be doing. We’ll have to account for the fact that when messaging over I2C it may not go through the first time you try with the software, by giving it multiple attempts until it receives the correct acknowledgement. As the amount of data being sent is minimal, this seems to me like a fairly low-cost workaround.

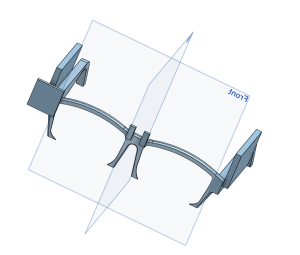

Additionally, since the first 3D print of the headset itself, I’ve reworked the design of the CAD into three parts- the front and the sidebars- that will all print flat, which is simpler, lower-cost, and will not require cutting away so much support material after the fact, which was a major problem in the initial design. I plan to attach them after printing with a small amount of short work-time epoxy resin at the joints. Additionally since the display came in the week of carnival, I’m working on the mount for it and the battery, as well as the power management board. I worry that because of the battery being mounted at the front of the headset, the device will be very front-heavy, but believe that this is the best of the options I considered, which are as follows:

- Mounting to the front-middle, over the brow. Front-heavy, and also places the battery fairly close to the user’s face. I don’t expect the battery to get hotter than somewhat warm, so I don’t think this is a problem.

- Mounting to one of the sides, which would make the device side-heavy. I believe that side-heaviness, asymmetry across the human body’s axis of symmetry, is more difficult to deal with and more uncomfortable for the user than front-heaviness.

- Mounting at the rear of the head, from, for instance, an elastic that attaches to the headset’s sidebars. The battery is light enough that acquiring an elastic strong enough to support it would be possible. However, this demands that the power lines between the battery and the devices on the headset be very long and constantly flexing, which is a major risk. The only mitigation I could come up with would be making the stiff plastic go all the way around the head, but this severely constrains the usability.

So to the front of the frame it goes. If it proves more of a difficulty than I expect, I will reconsider this decision.

Progress Schedule

My remaining tasks, roughly in order of operation:

- Finish designing the mount for the display on the CAD.

- Print the CAD parts and epoxy them together.

- Solder the display wires to the corresponding Rasppi and run its AV output.

- I’ll test the AV before soldering the same way I tested the I2C, by holding the wires in place to ensure the Rasppi is outputting as I expect, and the display is displaying.

- This link has a diagram that shows the display wiring labels.

- This link has the Rasppi 02W’s test pad pinouts, with the composite TV.

- Purchase machine screws and nuts to attach the devices onto the frame.

- I need #3-48 (0.099″ diameter) screws that are either 3/8″ or 1/2″ long, and nuts of the same gauge and thread density.

- Purchase car HUD mirror material for the display setup.

- This.

- Cut clear acrylic to the shape of my lenses.

- Solder together the power supply (charging board & battery).

- Solder the Rasppis’ power inputs to the power supply.

- Mount the Rasppis, camera, display, and power supply to the headset.

Next Week’s Deliverables

Pretty much everything as listed above has to get done this week. As much as absolutely possible.

Also, the slides and preparation for the final presentation, which I’m going to be giving for my group.

New Tools & Knowledge

I’ve learned how to use a great deal of new tools this semester. I’d never worked with a Raspberry Pi before of any sort, only much more barebones Arduinos, and just about all of the libraries we used to write the software therefore were also new. I learned about these through the official documentation, of course, but also through forum posts from the Raspberry Pi community, FAQs, and in a few cases a bit from previous 18500 groups’ writeups. I’ve used I2C before, but only with higher-level libraries than I had to use this time, because I had to manually set up one of my Raspberry Pis as an I2C slave device.

Also, my previous knowledge about 3D printing is minimal, though I’ve worked with SolidWorks and Onshape before (I’m using Onshape for this project). I learned a lot about how it works, how the tools work, the tenets of printable design, and so on, kind of through trial and error but also from some of my mechanical engineer friends who have to do a great deal of 3D printing for their coursework.