Report

I have learned that despite being supposedly a very mainstream device, the Raspberry Pi is… remarkably unintuitive. I’m using Raspberry Pi OS Lite to run the Rasppi headless and ssh into it, though for an as-of-yet unclear reason my computer does not seem to be able to resolve the Rasppi’s hostname and I have to use the IP address directly. This has only worked for this week’s development because I have direct access to my router and its IP address assignments at home, and will immediately have to resolve this issue upon returning to campus. Figuring out how to get into the Rasppi took just far, far too long because every single tutorial and answered question and Guide To Headless Rasppis that I could find online assumed that you could resolve the hostname, which is a very reasonable assumption, and simply bizarrely untrue in my case. I don’t know.

The Raspberry Pi OS Imager also doesn’t tell you what the name of the OS you’re using is, and even on the main website it’s just kind of… a throwaway inline parenthetical comment. Despite being the main thing the entire community uses to refer to the major versions of the OS. And so many things changing between them. It’s. This was a conscious decision. Why would you do it this way.

After figuring out the issue and getting into the board, getting it to talk to the camera was relatively simple (though I had the cable in upside down for a bit, which was deeply frustrating to discover after an hour and a half of debugging. So it goes). I’m using the native Raspberry Pi Camera Module, which is, you know, supposed to be the native camera and therefore straightforward to use, but you would just not believe the number of problems I have had because I’m using a native Pi camera instead of a USB camera.

First photograph captured from the Pi camera! It’s blurry and poorly exposed because I’ve left the protective plastic tab over the lens, since it still has to travel back to Pittsburgh. I expect the quality to be better once I take that off.

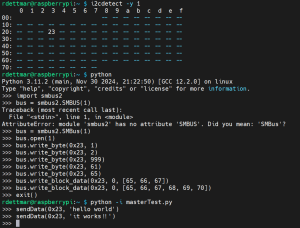

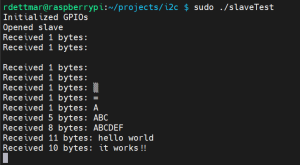

I also discovered that OpenCV’s primary image capture method VideoCapture(camera_id) is not compatible with libcamera, the regular Raspberry Pi camera library, because of course it isn’t. Surely nobody would ever want to use OpenCV straightforwardly on a minimal Raspberry Pi. Surely that couldn’t be an extremely common desire and mainstream goal. Can’t imagine.

However Picamera2, the Bookworm Python wrapper for libcamera, is configurable enough to be kind of compatible itself with MediaPipe.

(As an aside: all of the libraries I used this week I was able to access via pip, and that also seems to be the simplest way to use MediaPipe, except for Picamera2, which was only accessible with apt; I set the include-system-site-packages flag in my pyvenv.conf to true to be able to use it.)

This is the MediaPipe on Raspberry Pi tutorial I started from. It doesn’t work on its own, because it relies on the OpenCV method that doesn’t work, but I used it and the associated tutorials linked to set up the Python environment (sigh. why did it have to be Python) and MediaPipe installation.

I found this document, which was exactly what I want to do, with the sole caveat that it’s ten years out of date. Picamera has been displaced with Picamera2, which has been significantly streamlined and so the translation isn’t 1:1, and I’m not familiar enough with either library to do a quality translation. Sigh.

I ended up being able to scavenge bits and parts from this document and from the Picamera2 repo examples to make an trial script which captures images off the camera and streams them via OpenCV (in this case over my ssh tunnel, which was very slow, but I hope an amount of that is the ssh streaming and it will speed up when I cut that).

I was able to then graft the working Picamera image-capture script onto the MediaPipe script provided in the first tutorial. I’m just using a generic model right now, not our own custom gesture language, but it is a proof that the software works on the hardware. If only just barely. It ran at this point extraordinarily slowly, and there was truly just an untenable amount of lag between my hand motions and what I saw on the screen, and even more between the motion of the frames on the screen and the MediaPipe overlay. Making it run faster became a critical priority.

Image capture of the MediaPipe hand tracker running on the Raspberry Pi.

I modified the camera configuration to tell the software reading the camera both the resolution that I wanted out of it (which was already there) and the raw native resolution of the camera. This seemed to fix my zoom problems- the camera’s field of view was far smaller than I had expected or wanted; it seemed to have just been cutting out a 640×480 box out of the center of the FOV. With access to the native resolution, it appears to be binning the pixels to the desired resolution much more cleanly. Additionally, I fixed the framerate, which had previously just been at “whatever the software can handle”. Pinning it at 1.5fps sped up MediaPipe’s response time greatly, improved its accuracy, and all of the lag functionally disappeared (even still streaming the output). It also kept the board from getting so dang hot as it was before; Raspberry Pis since the 3 underclock when they hit 60C, and according to my temp gun that’s about where I was hanging before I fixed the framerate, so that was probably also contributing to lag.

Image capture of the MediaPipe hand tracker working on the Raspberry Pi.

1.5fps is a little lower than I wanted it to be, though. I switched the framerate and recognition outputs to feeding to a printline and turned off the streaming, and was able to trivially double my framerate to 3fps. This hits the spec requirement!

If possible, I’d like to try to pull OpenCV entirely out of the script (with the possible exception of its streaming feature for debugging purposes) since Picamera2 seems to have all of the functionality of OpenCV that I’m using, and in a much more lightweight, Raspberry Pi-native library. I believe this may help me improve the responsiveness of MediaPipe, and will certainly make the script cleaner, with fewer redundant, overkill tools. However, since it works just fine as is, this is not a high priority.

Progress Schedule

I’ve shuffled around my tasks slightly, accelerating the work on MediaPipe while pushing off the HDMI output slightly, so I’m ahead on one section while being behind on another. I’ve also had to put off measuring the power consumption of the Rasppi until I had the recognition model working- in retrospect, I don’t know why measuring the power consumption was placed ahead of getting the most power-hungry algorithm working. I’m not particularly worried about the lead time on the battery, so I’m fine with that getting estimated and selected a bit later than expected.

Next Week’s Deliverables

Originally next week was meant to be the MediaPipe recognition week, while this week was for the HDMI out, but this has been flipped; I plan on working on the code which will generate the display images next week. Additionally, I’ll have to figure out how to log into the Rasppi on the school’s internet connection when I don’t know its IP address directly, which may take a nontrivial amount of time.