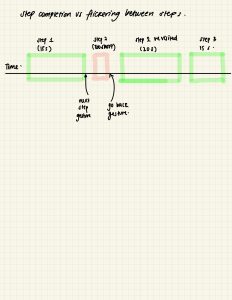

I am currently on track with the project schedule. The gesture recognition system is now fully functional on my computer display with all of the defined gestures. This week I focused on building the recipe database and successfully scraped recipe data from Simply Recipes and structured it into JSON format. An example of one of the recipe entries includes fields for title, image, ingredients, detailed step-by-step instructions, author, and category. The scraping and debugging process was somewhat tedious, as I had to manually inspect the page’s HTML tags to accurately locate and extract the necessary data. In our use case requirements, we specified that each step description should be under 20 words, but I’ve noticed that many of the scraped steps exceed that limit. This will need additional post-processing and cleanup. Additionally, some scraped content includes unnecessary footer items such as “Love the recipe? Leave us stars and a comment below!” and unrelated tags like “Dinners,” “Most Recent,” and “Comfort Food” that need to be removed before display.

My current focus is integrating the recipe JSON database into our Django web app framework. Additionally, I am also going to start working on generating recipe titles for display in Pygame on the Raspberry Pis. Next steps include complete integration of the recipe data with the Django web app and refining the display logic for recipe titles on the Raspberry Pi setup.

{

“title”: “One-Pot Mac and Cheese”,

“image”: “images/Simply-Recipes-One-Pot-Mac-Cheese-LEAD-4-b54f2372ddcc49ab9ad09a193df66f20.jpg”,

“ingredients”: [

“2tablespoonsunsalted butter”,

“24 (76g)Ritz crackers, crushed (about 1 cup plus 2 tablespoons)”,

“1/8teaspoonfreshlyground black pepper”,

“Pinchkosher salt”,

“1tablespoonunsalted butter”,

“1/2teaspoonground mustard”,

“1/2teaspoonfreshlyground black pepper, plus more to taste”,

“Pinchcayenne(optional)”,

“4cupswater”,

“2cupshalf and half”,

“1teaspoonkosher salt, plus more to taste”,

“1poundelbow macaroni”,

“4ouncescream cheese, cubed and at room temperature”,

“8ouncessharp cheddar cheese, freshly grated (about 2 packed cups)”,

“4ouncesMonterey Jack cheese, freshly grated (about 1 packed cup)”

],

“steps”: [

{

“description”: “Prepare the topping (optional):Melt the butter in a 10-inch Dutch oven or other heavy, deep pot over medium heat. Add the crushed crackers, black pepper, and kosher salt and stir to coat with the melted butter. Continue to toast over medium heat, stirring often, until golden brown, 2 to 4 minutes.Transfer the toasted cracker crumbs to a plate to cool and wipe the pot clean of any tiny crumbs.Simply Recipes / Ciara Kehoe”,

“image”: null

},

{

“description”: “Begin preparing the mac and cheese:In the same pot, melt the butter over medium heat. Once melted, add the ground mustard, pepper, and cayenne (if using). Stir to combine with the butter and lightly toast until fragrant, 15 to 30 seconds. Take care to not let the spices or butter begin to brown.Add the water, half and half, and kosher salt to the butter mixture and stir to combine. Bring the mixture to a boil over high heat, uncovered.Simply Recipes / Ciara KehoeSimply Recipes / Ciara Kehoe”,

“image”: null

},

{

“description”: “Cook the pasta:Once boiling, stir in the elbow macaroni, adjusting the heat as needed to maintain a rolling boil (but not boil over). Continue to cook uncovered, stirring every minute or so, until the pasta is tender and the liquid is reduced enough to reveal the top layer of elbows, 6 to 9 minutes. The liquid mixture should just be visible around the edges of the pot, but still with enough to pool when you drag a spatula through the pasta. Remove from the heat.Simple Tip!Because the liquid is bubbling up around the elbows, it may seem like it hasn\u2019t reduced enough. To check, pull the pot off the heat, give everything a stir, and see what it looks like once the liquid is settled (this should happen in seconds).Simply Recipes / Ciara KehoeSimply Recipes / Ciara Kehoe”,

“image”: null

},

{

“description”: “Add the cheeses:Add the cream cheese to the pasta mixture and stir until almost completely melted. Add the shredded cheddar and Monterey Jack and stir until the cheeses are completely melted and saucy.Simply Recipes / Ciara KehoeSimply Recipes / Ciara KehoeSimply Recipes / Ciara Kehoe”,

“image”: null

},

{

“description”: “Season and serve:Taste the mac and cheese. Season with more salt and pepper as needed. Serve immediately topped with the toasted Ritz topping, if using.Leftover mac and cheese can be stored in an airtight container in the refrigerator for up to 5 days.Love the recipe? Leave us stars and a comment below!Simply Recipes / Ciara KehoeSimply Recipes / Ciara Kehoe”,

“image”: null

},

{

“description”: “Dinners”,

“image”: null

},

{

“description”: “Most Recent”,

“image”: null

},

{

“description”: “Recipes”,

“image”: null

},

{

“description”: “Easy Recipes”,

“image”: null

},

{

“description”: “Comfort Food”,

“image”: null

}

],

“author”: “Kayla Hoang”,

“category”: “Dinners”

},