I have worked on the following this week:

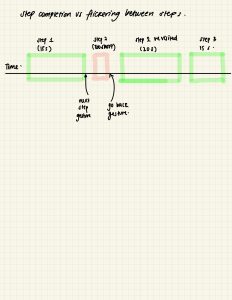

- I’ve been ironing out the design details for the post-cooking analytics feature. Based on concerns raised during our last meeting especially around how we detect when a step is completed and how to compute time per step. I am thinking of a few options such as to reduce noise from accidental flicks we already debounce each gesture using a timer. Only gestures that persist for a minimum duration (e.g. more than 300ms) are treated as intentional. If the user moves to the next step and then quickly goes back it’s pretty much a signal that they may have skipped accidentally or were just reviewing the steps. In these cases, the step won’t be marked as completed unless they revisit it and spend a reasonable amount of time. I’ll implement logic that checks if a user advanced and did not return within a short window making that as a strong indicator the step was read and completed. Obviously there is still edge cases to consider for example,

- Time spent is low, but the user might still be genuinely done. To address this I was thinking of tracking per-user average dwell time. If a user consistently spends less time but doesn’t flag confusion or goes back on steps, mark them as ‘advanced’. If a user shows a gesture like thumbs up or never flags a step we would treat it as implicit confidence even with short duration.

- Frequent back and forth or double checking. User behavior might seem erratic even though they are genuinely following instructions. I was thinking for this i won’t log a step as completed until user either a) proceeds linearly and spends threshold time or b) returns and spends more time. If a user elaborates or flags a step before skipping, we lower the confidence score but still log it as visited

- user pauses cooking mid step for example when they are using an oven and long time spent doesn’t always mean engagement. As we gather more data from a user, we plan to develop a more personalized model that will combine the gesture recognition, time metrics and NLP analysis of flagged content.

- I’ve been working on integrating gesture recognition using the pi camera and mediapipe. The gesture classification pipeline runs entirely on thepi. Each frame from the live video feed is passed through the mediapipe model, which classifies gestures locally. Once a gesture is recognized, a debounce timer ensures it isn’t falsely triggered. Valid gestures are mapped to predefined byte signals, and I’m implementing the I2C communication such that the Pi (acting as the I2C master) writes the appropriate byte to the bus. The second Pi (I2C slave) reads this signal and triggers corresponding actions like “show ingredients”, “next step”, or “previous step”. This was very new to me since I have never worked with writing an I2C communication. This still has to be tested.

- I’m also helping Charvi with debugging the web app’s integration on the Pi. Currently, we’re facing issues where some images aren’t loading correctly and also a lot of git merge conflicts. I’ll be helping primarily with this tomorrow.