What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

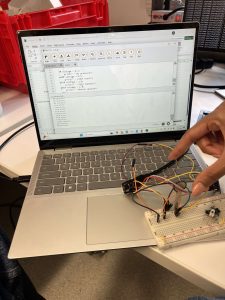

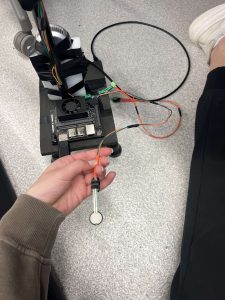

The risks that could jeopardize the success of the project is the battery not working for the Jetson Orin Nano and it possibly frying our Jetson. We performed extensive research on this to make sure it won’t fry it but for the small chance that it will, we backed up all of our code on github and have all of the individual components. working seperately

• Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

• Provide an updated schedule if changes have occurred.

• This is also the place to put some photos of your progress or to brag about a

component you got working.

There have been no chances to the existing design. There have been no changes to the schedule.

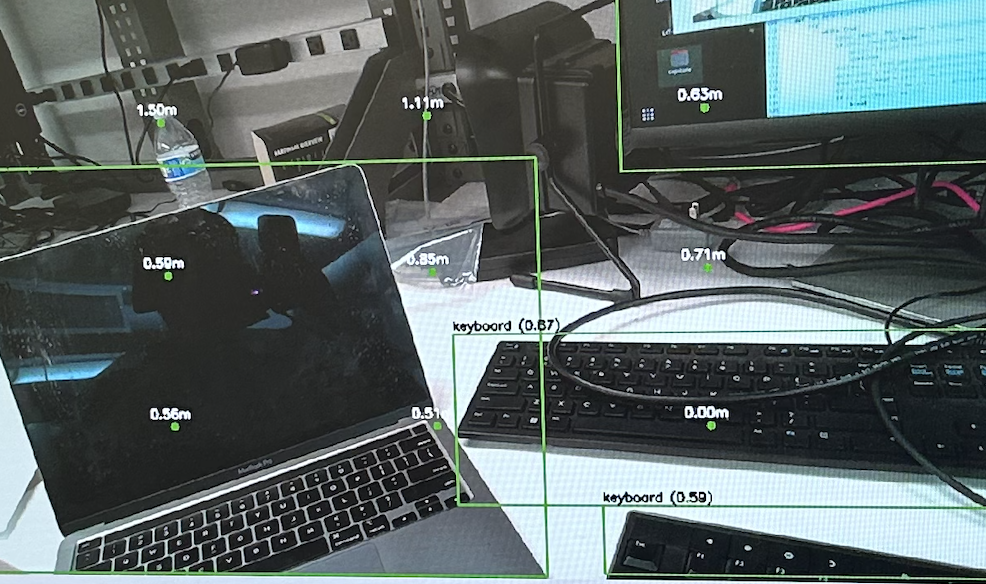

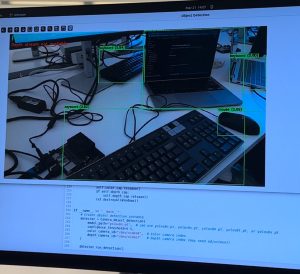

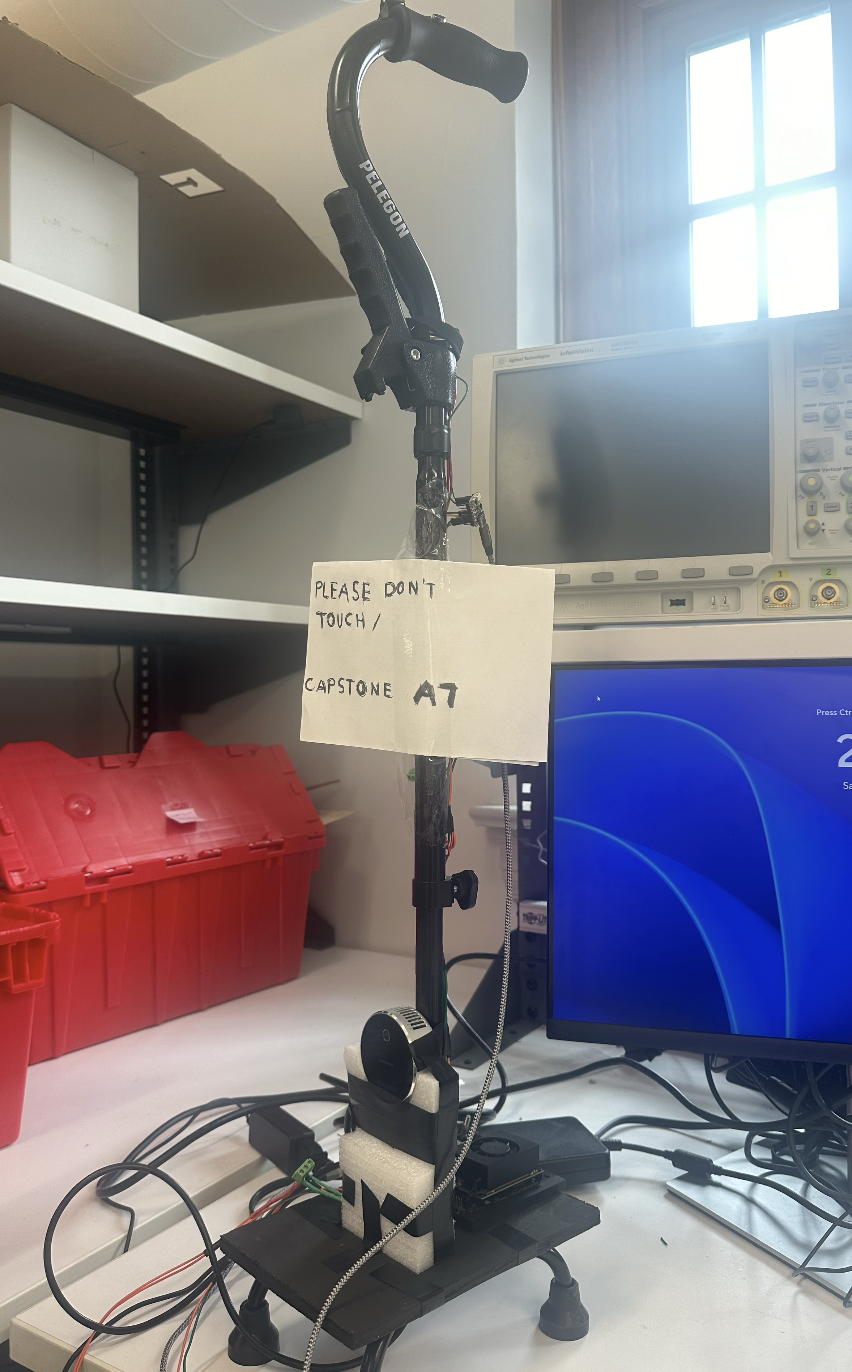

We have finished composing our cane!

Now that you have some portions of your project built, and entering into the verification and validation phase of your project, provide a comprehensive update on what tests you have run or are planning to run. In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

Validation

We plan on testing all of the various main features individually and then together. This means testing the object detection on 50 various objects, testing the wall detection on 50 various walls, testing the FSR on 25 different surfaces. For the various object and wall tests, the haptic feedback should detect on 48 out of the 50 tests respectively (~95%). For the 25 different surfaces, we want the FSR’s to detect the floor on 24 out of 25 of them (~95%).

Additionally, we want the system to only have false positives <= 5% of the time.