Risks:

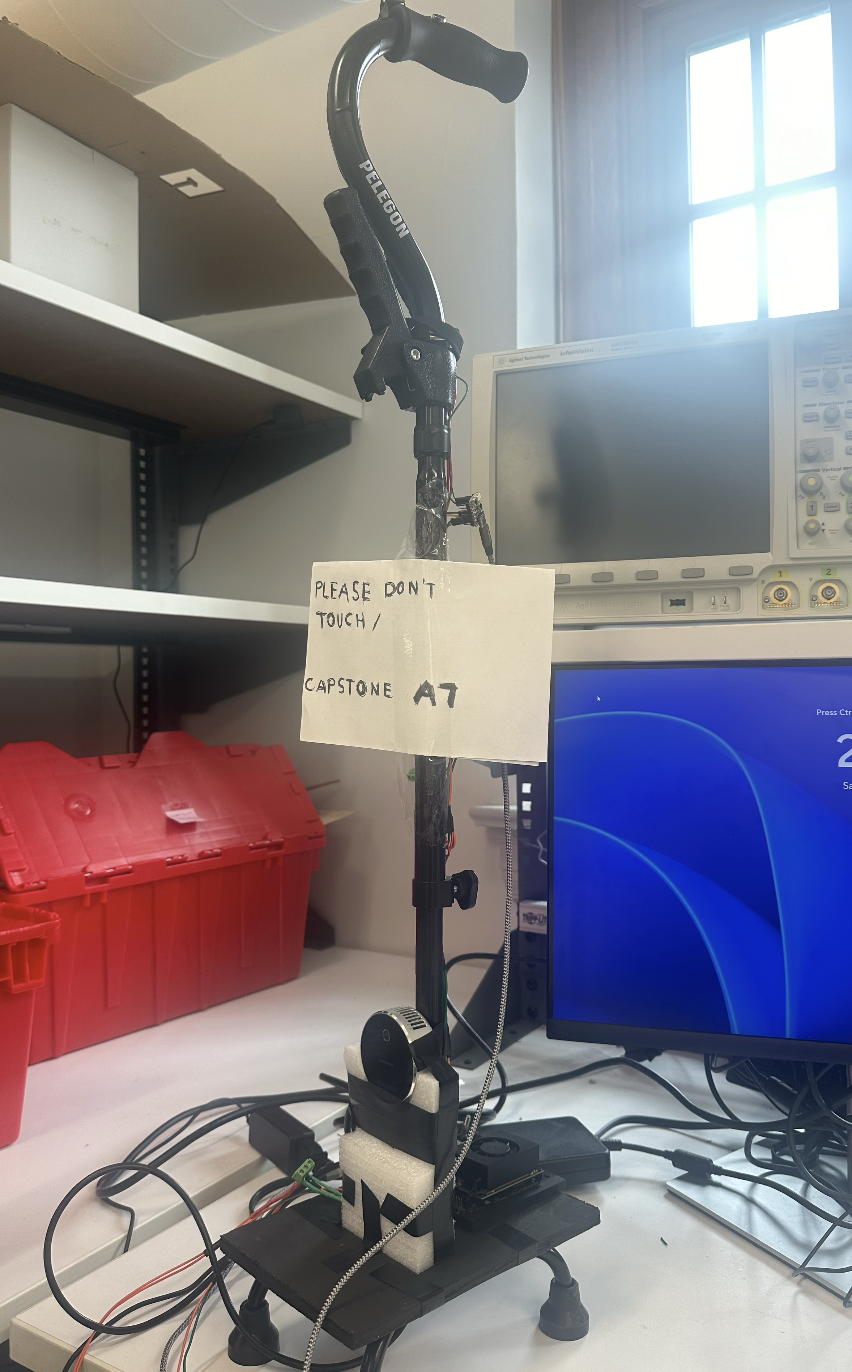

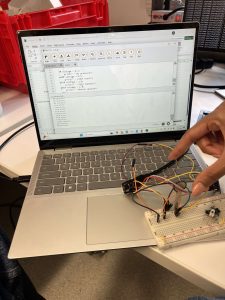

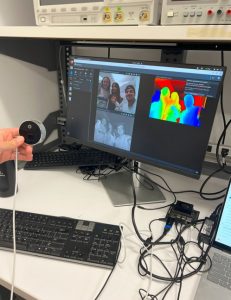

We have yet to attempt connecting the Jetson and L515, so that is a potential risk we may face, but we will be trying to do that this week so that we have ample time to problem solve if it does not work initially.

Changes:

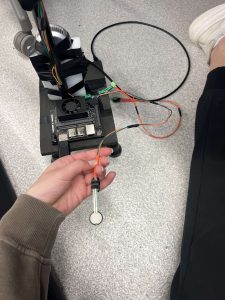

The only change we have made is a new power supply due to our new power calculations. We did not realize that our computer vision would require our Jetson to be in Super Mode, which requires an additional 10W from what we had originally planned for. But we have found a new power source that supplies our required 5V, 6A.

A was written by Maya, B was written by Kaya and C was written by Cynthia.

Part A: Our cane addresses a global need for increased accessibility and independence for individuals with visual impairments. Around the world, millions of visually impaired people face mobility challenges that hinder their ability to safely navigate unfamiliar environments. The need for better mobility tools spans urban areas, rural villages, and developing areas, meaning it is not limited to any one country or region. Our design considers adaptability to different terrains and cultures, ensuring the cane can be valuable in settings from crowded malls to personal homes. By enhancing mobility and safety for people with visual impairments on a global scale, the product contributes to broader goals of accessibility, inclusivity, and equal opportunity.

Part B: Our cane addresses different cultures having varying perceptions of disability, independence, and accessibility. In communities with strong traditions of communal living, the single technology-advanced cane encourages seamless integration into these communities by drawing less attention and allowing users to maintain their independence. Additionally, the haptic feedback system will allow for users to integrate seamlessly by drawing less attention by producing no noise from the device. By considering these cultural factors, our solution will allow for greater acceptance and integration into various societies.

Part C: We designed CurbAlert to take into consideration environmental factors, such as disturbing the environment around the user and interacting with the environment. Specifically, the feedback mechanism (haptic feedback) was chosen to notify only the user without creating extra noise or light or disturbing the surrounding environment or people. Additionally, our object detection algorithm is designed to detect hazards without physically interacting with the user’s environment and without having to be in contact with anything besides the ground and the user’s hand. Additionally, our prototype will be robust and rechargeable, making the product have no additional waste and making it so that a user will only need one of our prototype. By being considerate of the surrounding environment, CurbAlert is eco-friendly.