Accomplishments this week:

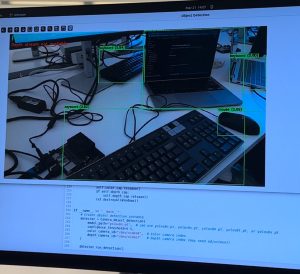

This week I spent most of my time debugging the fine-tuning code to make our system faster, which ended up not performing as expected, so further debugging will need to be done (but the current model is still working well, just slightly laggy). I also worked with Kaya to integrate wall detection with our code and get the correct response sent to the user.

Reflection on schedule:

We are on schedule, but because of laggy-ness our project will likely have a lower accuracy than our design requirement.

Plans for next week:

Testing and verification, further debugging, and starting our final report if we have time.

Verification:

I will focus on hazard and stair detection testing.

I will test the model (after removing the display of the frames which has been making the program slower) by analyzing the distance/location accuracy of objects detected, whether hazards vs non-hazards consistency get identified or not identified as expected, and the overall latency of the system from detection to user response with Maya. I will be performing the same analysis for the stairs hazard, with the addition of measuring how accurate the classification of the class stairs is. Note that I will not be testing the accuracy of specific object classifications because the response for different objects which pose as a hazard does not depend on what specific object it is, but on its overall position and size.

For hazard detection, I will perform an equal number of tests on large indoor items (such as tables and chairs), smaller items that should be detected (such as a laptop), and insignificant objects (such as flooring changes) to ensure false positives are not occurring. I will record true positives, false positives, and false negatives (missed hazards), aiming to achieve at least 90% true positive rate and no more than 10% false positive rate across these tests. I will also measure the latency from visual detection to haptic response with Maya, expecting a response time of less than 1 second for real-time feedback.

For stair detection, I will perform tests consisting of different staircases, single-step elevation changes, and flat surfaces used as negative controls (to ensure stairs are not falsely detected). Each group will be tested under varied indoor lighting and angles. The stair classification model will be evaluated on binary detection (stair vs. not stair). I aim to achieve at least 90% stair detection accuracy and 84% accuracy in distinguishing stairs from walls and other obstacles.