Accomplishments this week:

This week, I wrote the code for the FSR, and soldered all of the connections together which means our entire cane composition is mostly complete. This included everything for the haptics, and both FSRs and their connections with the QT Py. My code currently prints out when each pressure pad is pressed or not pressed, and we are currently working to integrate these responses with the Computer Vision.

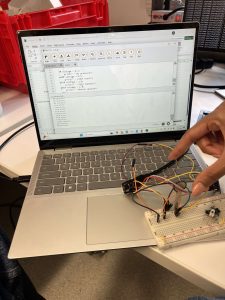

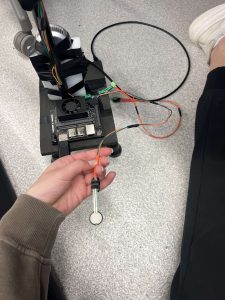

The left image is the feedback we get with pressing and releasing the FSRs. The right picture is how all of our wiring is set up with the cane.

Reflection on schedule:

We had a lot of important progress this week, and everything other than our power supply has finally come together. According to our schedule, our entire cane should be complete by Wednesday, and we should begin completing usability testing, so I think we are on track with that as long as the barrel jack converter we ordered is the fix to our power issues.

- Plans for next week:

Over the next week, I will be working on the power supply, and we will begin our usability testing. We will also begin working on our final presentation and report!Verification:

FSRs: - To verify the FSR system, I applied various pressures to the cane while logging voltage readings and observing whether they responded correctly to pressing and lifting.

- A threshold voltage of 1V was chosen to distinguish between cane contact and non-contact, based on real-world walking pressure tests.

- When the voltage exceeds the threshold, the QT Py sends a serial signal “ON” to the Jetson to indicate ground contact and trigger the computer vision script.

- When the cane is lifted and pressure is removed, the QT Py sends “OFF”, and the Jetson pauses the object detection process to conserve resources.

- I will also be testing the accuracy and responsiveness of this signal transition by walking with the cane and confirming that the system correctly activates only when the cane is placed on the ground.

HAPTICS

- The haptics send proper feedback based on which obstacle type is sent to it. We verified this by manually creating each object type and confirming the correct response was output.

OVERALL:

- The subsystem that deals with the QT Py was considered verified because it correctly detects cane contact, communicates with the Jetson, and produces haptic feedback reliably. We determined this was reliable because the feedback matches the print statements that we have on the screen based on object, wall, and stair locations.