Accomplishments this week:

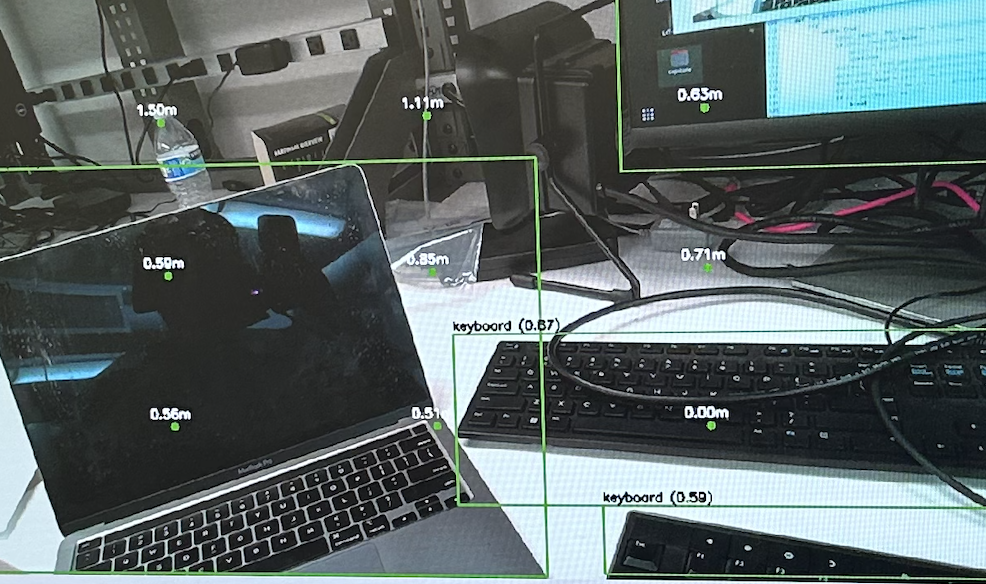

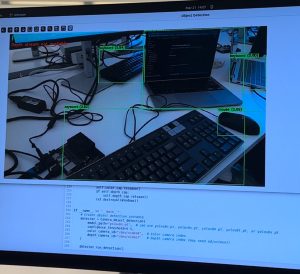

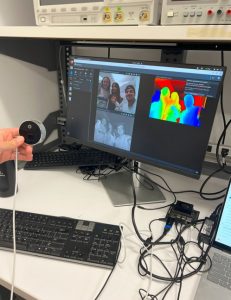

This week, I integrated the haptics with the Jetson, cut the wood and materials for our cane, and helped Cynthia work out specific cases for when objects should be detected and to trigger the proper haptic signal.

Reflection on schedule:

We had a lot of progress as a group this week and we were able to get many big steps put together. We are in a great spot for the interim demo and are in a great spot in terms of our schedule.

Plans for next week:

Over the next week, I will be working on testing each of the elements for power and current to make sure they are all going to be safe before plugging them in to the portable charger. I will also work to set up the pressure pads if time allows this week, but it is less important than the power consumption.