What did you personally accomplish this week on the project?

I completed the majority of the testing, and most performance metrics were successfully met as outlined in the final presentation. We are currently finalizing the integration between the gesture recognition UI and my code. Due to build issues with OpenPose on Ubuntu 22.04, we decided to switch to another device where package compatibility is better. Since we are approaching the final deadline, we agreed it would be more efficient to continue development on a computer instead.

Is your progress on schedule or behind?

We are slightly behind schedule but very close to completion.

If you are behind, what actions will be taken to catch up to the project schedule?

We are prioritizing final integration tasks and adjusting development platforms to avoid technical delays. I am actively working on completing the integration to ensure we meet the project deadline.

What deliverables do you hope to complete in the next week?

-

Final integration of the gesture recognition UI and my modules.

- Final polish and debugging to ensure a complete, working product.

Unit Tests and Overall System Tests Conducted:

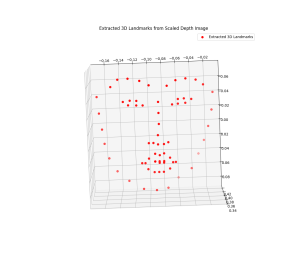

3D Face Model Generation Delay Test:

Objective: Measure the time taken to generate a sparse 3D facial model from detected 2D landmarks and depth input.

Method: After facial landmark detection, the 3D point cloud was reconstructed using depth information. Timing measurements were taken from the start of reconstruction to the output of the 3D model.

Result: Approximately 20 milliseconds delay for generating a model with sparse landmarks.

Expectation: Less than or equal to 50 milliseconds for acceptable responsiveness.

Outcome: Passed — face model generation is fast and within real-time constraints.

6DoF Head Pose Estimation Test:

Objective: Validate the speed and accuracy of estimating head pose (rotation and translation) from 2D–3D correspondences.

Method: The solvePnP function was used with the reconstructed sparse 3D landmarks to estimate pose. Timing was measured per frame.

Result: Approximately 2 milliseconds per pose estimation using 68 landmarks.

Expectation: Less than or equal to 150 milliseconds to ensure responsiveness.

Outcome: Passed — pose estimation is extremely fast, even with sparse or noisy data.

AR Filter Rendering Frame Rate Test:

Objective: Ensure that the AR rendering pipeline operates at a real-time frame rate.

Method: The rendering frame rate was measured during typical operation with overlays (e.g., glasses or makeup masks) being applied based on face tracking.

Result: Frame rate of about 160 FPS under standard conditions.

Expectation: Minimum 15 FPS for real-time interaction.

Outcome: Passed — rendering performance is well above the required threshold.

Movement Drift and Jitter Test:

Objective: Evaluate the stability of 3D tracking over natural head movements.

Method: Users were asked to move their heads smoothly across a reasonable range (side-to-side, up/down). The stability of the projected landmarks and overlays was recorded and analyzed.

Result: Minor visible jitter, approximately 3–5 pixels deviation.

Expectation: Drift and jitter less than or equal to 5 pixels.

Outcome: Passed — slight but acceptable drift observed.

Pose Estimation Accuracy Test:

Objective: Measure the accuracy of 6DoF head pose estimation under varying head movements and angles.

Method: Estimated pose was compared to known or visually aligned poses for validation. Deviation in landmark projection was used as a proxy for pose error.

Result: Pose errors ranged around 10–15 pixels in some cases.

Expectation: Less than or equal to 5 pixels for optimal accuracy.

Outcome: Partially Passed — some instability observed especially under extreme angles or occlusions, highlighting an area for improvement.