Anna’s Status Report for Apr 26

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I finished setting up the UI for me to begin doing the UI.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am a little behind. I will finish the UI this week.

What deliverables do you hope to complete in the next week?

I hope to finish my UI.

Team’s Status Report for 4/19

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

One major risk is the unreliability of gesture recognition, as OpenPose struggles with noise and time consistency. To address this, the team pivoted to a location-based input model, where users interact with virtual buttons by holding their hands in place. This approach improves reliability and user feedback, with potential refinements like additional smoothing filters if needed.

Finally, GPU performance issues could affect real-time AR overlays. Ongoing shader optimizations prioritize stability and responsiveness, with fallback rendering techniques as a contingency if improvements are insufficient.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

No changes have been made.

Provide an updated schedule if changes have occurred.

This week, the team is doing full system integration, finalizing input event handling, and testing eye-tracking.

Anna’s Status Report for 4/19

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I finished building the 2nd camera rig. I also finished setting up the UI so that I can now work on it.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on track. I will finish the UI this week.

What deliverables do you hope to complete in the next week?

I hope to finish my UI.

Team’s Status Report for 4/12

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

One major risk is the unreliability of gesture recognition, as OpenPose struggles with noise and time consistency. To address this, the team pivoted to a location-based input model, where users interact with virtual buttons by holding their hands in place. This approach improves reliability and user feedback, with potential refinements like additional smoothing filters if needed.

System integration is also behind schedule due to incomplete subsystems. While slack time allows for adjustments, delays in dependent components remain a risk. To mitigate this, the team is refining individual modules and may use mock data for parallel development if necessary.

Finally, GPU performance issues could affect real-time AR overlays. Ongoing shader optimizations prioritize stability and responsiveness, with fallback rendering techniques as a contingency if improvements are insufficient.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Gesture-based input has been replaced with a location-based system due to unreliable pose recognition. While this requires UI redesign and new logic for button-based interactions, it improves usability and consistency. The team is expediting this transition to ensure thorough testing before integration.

Another key change is a focus on GPU optimization after identifying shader inefficiencies. This delays secondary features like dynamic resolution scaling but ensures smooth AR performance. Efforts will continue to balance visual quality and efficiency.

The PCB didn’t exactly match the electrical components, especially the stepper motor driver being used. We had ordered a different kind of stepper motor to match our needs (being run for long periods of time), but it required an alternative design. So, we made new wire connections to be able to use the stepper motor.

Provide an updated schedule if changes have occurred.

This week, the team is refining motion tracking, improving GPU performance, and finalizing the new input system. Next week, focus will shift to full system integration, finalizing input event handling, and testing eye-tracking once the camera rig is ready. While integration is slightly behind, a clear plan is in place to stay on track. We will begin integrating the camera rig that is ready while the second one is being built.

In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

We will use logs to determine whether there is more than 200 ms latency between the gesture recognition and camera movement and a 1 s delay for generating face models.

Anna’s Status Report for 4/12

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I found a way to mount the camera rig securely. I also got started on building the 2nd camera rig.

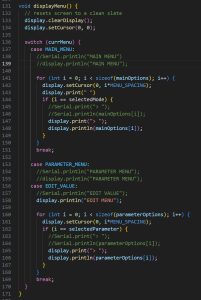

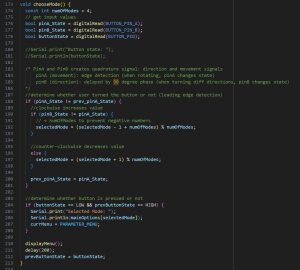

I also got all four stepper motors to work.

Steven and I also integrated gesture recognition with the camera rig for two stepper motors. However, it shouldn’t be a problem for four stepper motors since the command is just a little different.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on track. I will finish building the 2nd camera rig this week so that I can get started on the UI.

What deliverables do you hope to complete in the next week?

I hope to finish my 2nd camera rig and work on the UI.

How will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

I will test that my camera rig doesn’t move linearly for no more than 11.8 inches (the width of the display) by including a stop feature when it reaches the distance limit. The same thing goes for the angle. I will include a stop feature to make sure that it doesn’t turn beyond 90 degrees relative to the center. As for the 5 degree deviation, the way the camera rig works is that it increments the distance or rotation little by little so that users can control how much camera the user wants to move by, so there will be no deviation.

Anna’s Status Report for Mar29

- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

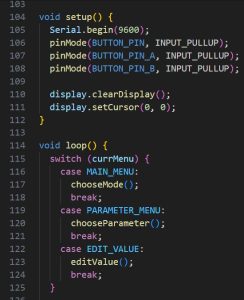

I wrote new code for my camera rig to test up and down motion. I got my up/down and left/right motion working. (couldn’t upload videos, but got the motions working. Providing screenshots instead)

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on track, though I am a little behind. I still have to find a way to make the camera rig stand and to write code for measuring the degree and distance.

- What deliverables do you hope to complete in the next week?

I hope to start creating my 2nd camera rig and work on the UI.

Team Status Report for Mar22

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

A significant risk could be having the two webcams not working properly. These two webcams are the essence behind our new feature of providing multiple viewpoints, and given that it doesn’t necessarily follow the original design, there are a lot of things that can go wrong. Right now, there are problems with setting up the stepper motor for rotational movement of the camera since the camera rig will be placed vertically instead of horizontally. They are being managed by using some screws and some materials to keep the stepper motor secured to the mounting plate that will move up and down. Contingency plans are purchasing stepper motor mounts that can hold the stepper motors perpendicularly in place.

Most significant risk currently is the system integration failing. So far everyone has been working on their tasks pretty separately (software for gesture recognition/eye tracking, software for AR overlay, hardware for camera rig + UI). It looks like everyone has made significant progress on their tasks, and are close to the testing stage for the individual parts. However, not much testing/design has gone into how these subprojects will interface. We will discuss this in the further weeks. Moreover, we have made some time in the schedule for the integration, which gives us ample time for making sure everything works.

Another risk is performance, the compute requirement for the software is a lot and the Jetson may not be able to handle it. But this has already been mentioned in our last team status report, and we are currently working on it.

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Yes, the placement of the stepper motor had to be positioned upward instead of sideways so that the camera can properly rotate horizontally when moving up and down. This change was necessary so that we can implement our new feature (multiple viewpoints) to users who want to view their face at different angles. The change has a risk of failing since there’s no efficient way to secure the stepper motor in place, and given the camera setup and structure, the camera might have difficulties rotating. These changes involve having to put more effort in making the new modifications work and can possibly incur more costs (though Anna is trying to work her way around it). If needed, we will purchase stepper motor mounts if they can’t be secured with screws and other materials.

- Provide an updated schedule if changes have occurred.

No major changes have occurred yet.

Anna’s Status Report for Mar22

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

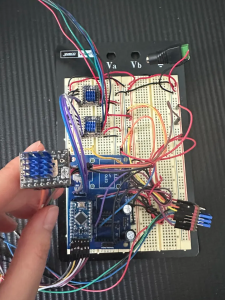

This week, I made sure to assemble the whole camera rig and identify parts that I would need to officially start testing my code. Currently, I am trying to modify the camera design from the original once since ours will be placed vertically instead of horizontally. Right now, if I follow the original design, the camera wouldn’t be able to rotate horizontally, so I would have to make sure that the stepper motor is positioned perpendicularly to the camera rig. I also identified that I would need a battery connector to connect the battery to the PCB.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am a little behind given that I was supposed to start testing my code this week. This week, I will purchase the battery connector and start testing my code on the stepper motors even if I don’t get the cameras in position. I will focus on assembling the camera rig itself and testing the code separately for functionality.

What deliverables do you hope to complete in the next week?

I hope to test my code and fix some bugs that I anticipate as well as fully build the camera rig.

Anna’s Status Report for Mar15

- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I got the UI setup and ready to go so that I can start working on the UI. However, I encountered a problem where ImGui is not showing up. This didn’t happen before, so I will have to debug this with Steven.

I also assembled my PCB so that I can use it to test the camera rig.

I also got all the parts for the camera rig so that I can start to assemble the camera rig and connect the stepper motors on the camera rig to the PCB.

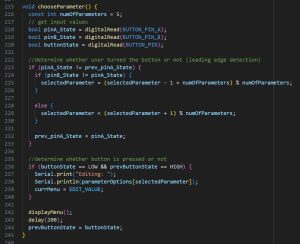

I also finished writing my Arduino code. I will upload it to the Arduino and have it hooked up with the PCB and stepper motors to test if my code works.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule? I am on schedule. This week, I will finish assembling the camera rig since I now have all the parts and will test if my Arduino code is working. I will also see why my ImGui isn’t showing up even though the build and run is successful.

- What deliverables do you hope to complete in the next week? I hope to assemble the camera rig and make progress in fixing up my code by testing it. If possible, I hope to be able to start working on the UI.