What did you personally accomplish this week on the project?

This week, I implemented a pipeline for generating a 3D face model using RGB and depth images. Where the input per frame is RGB and depth image, then I used dlib to detect facial landmarks in the RGB image and projected them onto the depth image. Then I converted depth image pixels to 3D world coordinates and extracted 3D face landmarks.

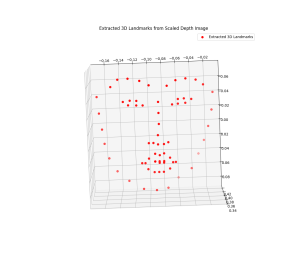

Alongside (this takes a little more time to process) I am able to transform the depth image into a full point cloud and integrated it with the 3D face landmarks to form a smooth surface for the 3d face model if more landmarks needs to be mapped.

This is a display of the reconstructed 3D face point cloud along with detected landmarks in 3D.

In terms of compute, only extracting a costant number of landmarks (14 in this case) takes around 1-2 second, and this can be accelerated by using lower resolution RGB and depth, which I want to make a quality study on accuracy once rendering section is implemented. I have also been trying using simd to accelerate the calculation here, which I will continue doing next week.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on schedule, having completed the 3D modeling component on time. Before leaving for spring break, I also integrated my work with Jetson and prepared for the next phase.

After returning from spring break, I will start to work on wrapping a texture map onto the 3D face model using captured landmarks.

What deliverables do you hope to complete in the next week?

Continue testing and refining for better visualization and performance.

By the next milestone, I aim to have a functional prototype with texture mapping implemented.