What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

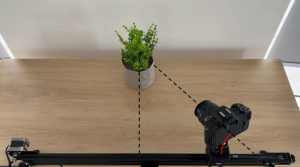

One of the most significant risks could be that there can be jitters from the camera. This will ruin the overall experience of the users since they are not able to see their side profiles and other parts of the face well. To mitigate this, we are implementing a PID control loop to ensure smooth motor movement and reduce vibrations. Additionally, we are testing different mounting and damping mechanisms to isolate vibrations from the motor assembly.

Contingency plans include having a backup stepper motor with finer resolution and smoother torque, as well as a manual override mode for emergency situations.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

The design for the camera control system changed, but it’s cheaper. We changed it due to the cost and complexity of the previous project. The one we are going with requires fewer 3D printing parts, which cuts down our cost by half, and it will integrate well with the display. The change also simplifies the assembly and reduces the overall weight of the system, improving portability.

The cost incurred is minimal, primarily for redesigning and reprinting certain parts. To mitigate these costs, we are using readily available components and not printing parts that we don’t need (like the stepper motor case).

Provide an updated schedule if changes have occurred.

We are behind schedule since we haven’t received the materials and equipment yet. Once we get the materials, we plan on catching up with the schedule by allocating more time for assembly and testing. We’ve also added buffer periods for unforeseen delays and assigned team members specific tasks to parallelize the work.

A: Public health, safety or welfare Considerations (written by Shengxi)

Our system prioritizes user well-being by incorporating touch-free interaction, eliminating the need for physical contact and reducing the spread of germs, particularly in shared or public spaces. By maintaining proper eye-level alignment, the system helps minimize eye strain and fatigue, preventing neck discomfort caused by prolonged unnatural viewing angles. Additionally, real-time AR makeup previews contribute to psychological well-being by boosting user confidence and reducing anxiety related to cosmetic choices. The ergonomic design further enhances comfort by accommodating various heights and seating positions, ensuring safe, strain-free interactions for all users.

B: Social Factor Considerations (written by Steven)

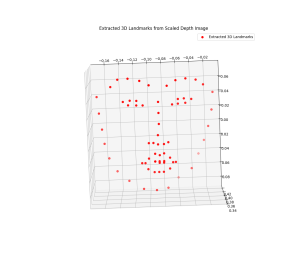

Being a display with mirror-like capabilities, we aim to pay close attention to how it affects perception of body image and self-perception. We plan to make the perspective transforms accurate and the image filters reasonable, so we don’t unintentionally reinforce unrealistic beauty norms or contribute to negative self-perception. This will be achieved through user-testing and accuracy testing of our reconstruction algorithms. Also one of the goals of this project is to keep it at a lower cost compared to competitors (enforced by our limited budget of ~$600) so that lower-income communities have access to this technology.

C: Economic Factors (written by Anna)

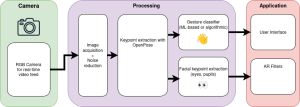

UsAR mirror provides a cost-efficient and scalable solution as our mirror costs no more than $600. For production, the UsAR mirror has costs in hardware, software, and maintenance/updates. It uses affordable yet high-quality cameras like the Realsense depth camera and webcams. The Realsense depth camera will allow users to have filters properly aligned to a 3D reconstruction of the face, maximizing the experience while minimizing the cost. The camera control system has an efficient yet simple design that doesn’t require many materials or doesn’t incur a lot of costs. As for the software, there’s no cost. It uses free, open-source software libraries like OpenCV, Open3D, OpenGL, and OpenPose. The Arduino code that controls the side-mounted webcams is developed with no cost.

For distribution, the mirror is lightweight and easy to handle and install. The mirror is a display that’s only 23.8 inches, so it is easy to carry and use as well as easy to package and ship. For consumption, UsAR mirror will be greatly used by retailers who can save money on sample products and the time spent for customers to try on all kinds of glasses. Moreover, because customers are able to try on makeup and glasses efficiently, this reduces the percentage that they will likely come back to return products, making the shopping experience and business on the retail end more convenient. These days, customers are longing for a more personalized and convenient way of shopping, and UsAR mirror addresses this demand.