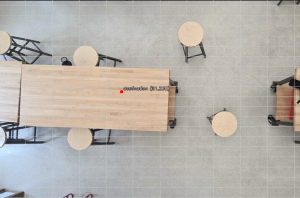

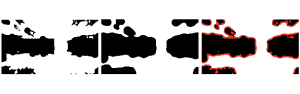

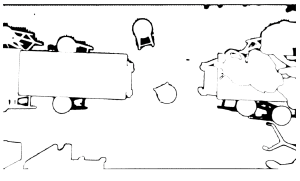

The most significant risks involve the fact that our final demonstration will be in a new room. Because our system is room-specific, this last minute change of environment will be one that our system needs to handle. We are managing these risks by conducting thorough testing on new environments and identifying ways to mitigate problems. We have tested our segmentation and localization on multiple locations and made minor adjustments so that we are confident our system can adapt to the environment that our demo is in.

No major changes have occurred.

There is still some final work to be done with the project, since we are building the structure that will hold our overhead camera/UWB anchors as the demo room will not have the same power outlets and structures as the typical locations we tested on.

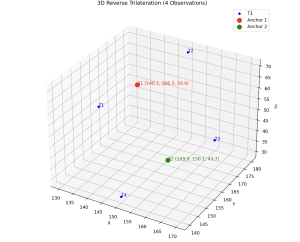

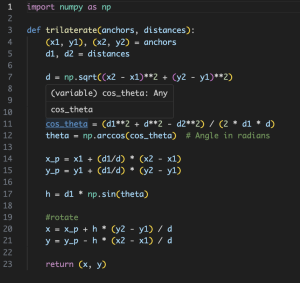

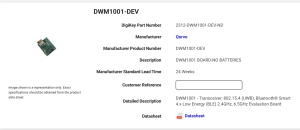

In terms of testing, we conducted unit tests and overall system tests. Unit tests include trialing the accuracy of the UWB localization (measuring actual vs. predicted), the rate at which our segmentation model can detect obstacles, etc. We also tested our overall navigation success rate, measuring % successful navigations. As mentioned earlier, we ensured that testing was done in diverse environments to prepare for the new demo room.