The most significant risks involve the fact that a lot of the parts we want to use have limited examples online, and are unfamiliar to us. For example, the UWB boards seem tricky as we will have to figure out the documentation on our own. We tried to mitigate this by selecting parts that seem to have more documentation/similar examples, but there will be some inevitable learning and trial-and-error necessary. Furthermore, we selected parts that are more general purpose. For example, in the case that we can’t find an effective solution utilizing the bluetooth feature of the UWB board, we can still wire it directly to the belt’s Raspberry Pi, which should have bluetooth capabilities on its own.

One change we made was to add a magnetometer. This was necessary as we needed the rotational orientation of the user to navigate them properly. The additional cost is both the additional hardware component and needing to learn the interface of this tool, but we plan on keeping this portion of the design simple. Furthermore, we introduced a Raspberry Pi on the wearable belt. This was necessary because we are realizing that a lot of communication/processing stems from the wearable, but we plan on selecting a lightweight and efficient Pi to minimize weight and power consumption.

Otherwise, our schedule remains mostly the same.

Kevin did part A. Charles did part B. Talay did part C.

Part A.

Our project’s initial motivation was to improve accessibility, which I believe strongly addresses safety and welfare.

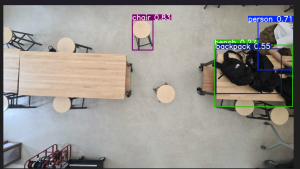

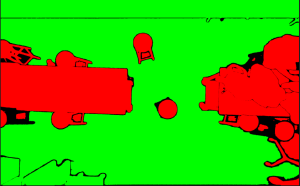

Providing navigation for the visually impaired can provide an additional level of physical safety by navigating users around obstacles to avoid collisions/hazards. Traditional tools, such as a white cane, have limitations. For example, white canes are not reliable for detecting obstacles that aren’t at ground level and may not provide the granularity needed to navigate around an unfamiliar environment. Using a stereo camera from an overhead view, our device should be able to detect the vast majority of obstacles in the indoor space, and safely navigate the user around such obstacles without having to approach them.

Furthermore, public welfare is addressed, as accessibility enables users to navigate and enjoy public spaces. We anticipate this project being applicable in settings such as office spaces and schools. Take Hamerschlag Hall as an example; a visually impaired person visiting the school or perhaps frequenting a work space, would struggle to navigate to their destinations. With lab chairs frequently disorganized and hallways splitting from classrooms into potentially hazardous staircases, this building would be difficult to move around without external guidance. This ties hand-in-hand with public health as well; providing independence and the confidence to explore unfamiliar environments would improve the quality of life for our target users.

Part B

For blind individuals, our product will help them express more freedom. Right now, many public spaces aren’t designed with their needs in mind, which can make everyday activities stressful or even isolating. Our project aims to make spaces like airports, malls, and office buildings more accessible and welcoming. It means blind individuals can navigate these places on their own terms, without always needing to rely on others for help. This independence opens up opportunities for them to participate more fully in social events, explore new places, or even just move through their daily routines with less stress. This will have huge social impacts for the visually impaired and will allow them to more fully engage in social areas.

Part C

BlindAssist will help enhance the independence and mobility of blind people in indoor spaces such as offices, universities, or hospitals. This reduces the need for external assistance in public institutions such as universities and public offices. This could help reduce cost to hire a caregiver or expensive adaptations to buildings. BlindAssist offers an adaptable, scalable system that many institutions could rapidly adopt. With a one-time set up cost, the environment could become “blind-friendly” and accommodate many blind people at once. With economies of scale, the technology to support this infrastructure becomes cheaper to produce, allowing more places to adopt it. This could reduce accessibility costs in most environments even more. A possible concern is the reduction in jobs for caregivers. However, these caregivers could spend their time caring for other people who currently do not have the technical infrastructure to support them autonomously.