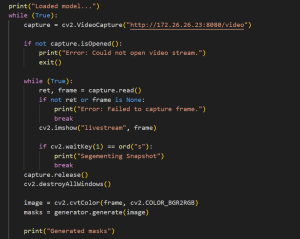

This week I spent some more time on the obstacle detection pipeline along with Talay. Talay got a PoC to work with his phone and having his phone communicate back with our laptops. So we tried adding his phone to the pipeline, to do this we just captured a frame from the live feed of his camera and started to run our obstacle detection pipeline on the captured image. I didn’t capture an image of the process or what it looks like, but this snippet of code should give a good idea of what is being done.

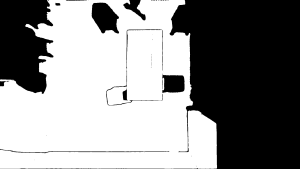

Once we got this image to work, we realized that some of the images we were getting from the obstacle detection looked off. This an example picture of a problematic result:

The white in this picture denotes what is a floor while the black denotes an obstacle. You can see pretty clearly here that it is mislabeling the table and the chair here as floor. We figured out that this was just a processing mistake and Talay’s post has the corrected processing. However, in order to fix the issue we had to regress back to a stage of the pipeline where there is no labelling of the floor and obstacles to see what exactly the SAM model is doing.

We can see here that the SAM model is segmenting everything in the room correctly, the only issue is with our labelling algorithm. The solution to the problem ended up being a bug in the processing code.

Next week, I am going to talk with the rest of my group members on a very important point of the project. We need to discuss how the user will select destinations. This can be done with a variety of approaches, most of them requiring at least some sort of UI. We also need to prepare for the demo coming next week.