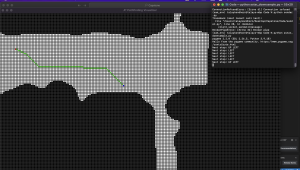

This week, I continued working on the path-finding algorithm and fine-tuning it so that it would be best for our use-case. I decided to switch from a D* path finding algorithm to an A* path finding algorithm so that it does not store any state from iteration to iteration. When integrated with the UWB sensors, we foresee that there might be some warping action with respect to the user’s position. D* path planning algorithm uses heuristics to only calculate certain parts of the path, which relies on the user moving in adjacent cells. Because the user might warp a few cells, A* path finding algorithm is more suitable because it calculates the path from scratch during each iteration. I simulated the user’s position on the grid with mouse clicks and there was minimal latency, so we are going to proceed with the A* path finding algorithm.

Next, I tested the segmentation model on multiple rooms in Hammerschlag Hall. The segmentation itself was quite robust, but our processing to further segment the image into obstacles and free space was not quite robust. I revisited this logic and tweaked a few points so it selected the largest segmented space as free space and everything else as obstacles. Now, we have a fine-grained occupancy matrix that needs to be downsampled.

I worked on the downsampling algorithm with Kevin. We decided to downsample by picking chunks of certain size (n x n) and labeling it as an obstacle or free space (1 x 1 block on the downsampled grid) depending on how many blocks in the chunk were labeled as obstacles. We wanted a conservative estimate on obstacles, so we wanted the obstacles to have a bigger boundary. This way, the blind person wouldn’t walk into the obstacle. We did this by choosing a low voting threshold within each chunk. For example, if 20% or more of the cells within a chunk were labeled as obstacles, the downsampled cell will be labeled as an obstacle. Once we downsampled the original occupancy matrix generated by the segmentation model, we saw that the resolution was still good enough and we got a conservative bound on obstacles.

This is the livestream capture from the phone camera mounted up top.

This is the segmented image.

Here, we do processing on the segmented image to label the black parts (ground) as free space and the white parts as obstacles.

Here is the A* path finding algorithm running on the downsampled occupancy grid. Here, the red is the target and the blue is the user. The program outputs that the next step is top left.

I believe that our progress is on schedule. Next week, I hope to work with the team to create a user interface where they could select the destination. The user should be able to preconfigure which destinations they would like to save in our program so that they can request navigation to that location with a button press. I would also like to try to run the entire software stack on the Jetson to see if the Jetson can process everything with reasonable latency.