Progress This Week

Our team made meaningful progress across hardware, cloud integration, and software components in preparation for the final project deadline

-

Computer Vision & Cloud Integration: The YOLOv5 model has been finalized and benchmarked for accuracy and inference speed on the Raspberry Pi, it is deployed through the cloud pipeline.

-

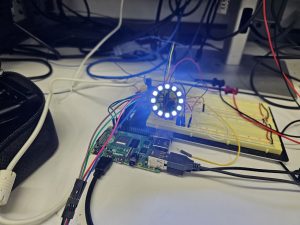

Hardware & Imaging: The motorized camera slider with stepper control and ring light is operational and is linked with the camera system for synchronized scanning.

-

Mobile Application & Recommendation System: The recipe recommendation system is functional and integrated into the app’s UI. It makes use of a query based system to return the top recommendations.

Current Status - Our project is on schedule with all major components nearing full integration. Our efforts are focused on fine-tuning the system to ensure seamless interaction between all components in order to prepare for the final demo.

Goals for next week

- Finalize camera and cloud transmission pipeline

- Complete backend-mobile app communication for fridge scans

- End-to-end testing across modules

- Complete final deliverables