1. Overview

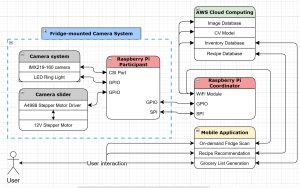

Our project remains on track as we make significant progress across hardware, CV, and mobile application refinement. Our efforts were focused on expanding the dataset, optimizing our model, finalizing the design report, as well as improving the mobile app’s UI and backend integration. Though some tasks, such as the camera data transmission pipeline, are still in progress, the project remains on schedule. Next week, we will focus on fine-tuning our model, optimizing inference, and implementing key hardware and software components to seamlessly integrate Fridge Genie’s features.

2. Key Achievements

Hardware and Embedded Systems

- Formally documented use cases, requirements and numerical specifications for our camera system.

- Derived minimum field-of-view calculations to ensure full fridge coverage

Computer Vision

- Collected and integrated new annotated fridge datasets to improve model performance

- Applied data augmentation techniques to enhance robustness of model

- Research and analyzed different YOLOv5 models to determine which model best meets our requirements

Mobile App Development

- Improved navigation and layout for smoother user experience

- Cleaned up existing codebase and resolved some minor bugs for enhanced stability

- Explored libraries and APIs for integrating computer vision into the mobile app

3. Next Steps

Hardware and Embedded Systems

- Complete data transmission pipeline between camera and Raspberry Pi

- Begin motorized slider construction for improved scanning if hardware arrives

Computer Vision

- Train and test YOLOv5x model with hyperparameter tuning to reach >90% detection accuracy

- Explore model quantization and optimizations for Raspberry Pi to reduce inference time

- Finalize model comparisons and select optimal YOLOv5 model

Mobile App Development

- Continue backend optimizations for inventory management and data synchronization

- Begin CV integration to the app

- Backend development to optimize data storage and retrieval efficiency

4. Outlook

Our team is making good progress, with advancements in CV model training, hardware design and mobile app development. Our key challenges will include minimizing inference latency and finalizing hardware integration. For the next week, we will focus on fine-tuning our ML model, optimizing our inference pipeline and improving backend connectivity for data transfer between the mobile app and our model.

Part A: Global Factors (Will)

Our project addresses the global problem of food waste, which is estimated to cost the global economy $1 trillion per year. By implementing automated inventory tracking as well as expiration date alerts, our solution helps households reduce waste, which leads to more financial savings and greater food security. This extends beyond developed nations, as the system can be scaled for deployment in less-developed regions where food preservation is critical. Furthermore, the project provides global accessibility through its mobile-first design, which enables users in different countries to easily integrate it into their grocery management habits. Future iterations of our project could support multiple languages and localization to adapt to different markets. Last, our project directly supports environmental sustainability by reducing food waste, which accounts for around 10% of global greenhouse gas emissions.

Part B: Cultural Factors (Steven)

When developing our detection model and recipe recommendation, we took into account regional dietary habits and cultural food preferences. Different cultures have various staple foods, packaging and consumption patterns, thus the model must recognize diverse food types. For instance, a refrigerator in an East Asian household might contain more fermented foods such as kimchi/tofu, while a Western household might have more dairy products and processed foods.

While our initial product will be focused on American groceries and dietary habits, for future iterations, we will aim to support culturally relevant recipes. Users will be able to receive cooking suggestions that aligns with their dietary traditions and preferences. The user interface will also be designed to accommodate for individuals who are less technologically literate, enabling accessibility across different demographics.

Part C: ENVIRONMENTAL Considerations (Jun Wei)

Our project directly supports environmental sustainability by reducing food waste, which accounts for around 10% of global greenhouse gas emissions. By providing users with real-time grocery tracking and expiration notifications, we help reduce unnecessary grocery purchases and food disposal.

Furthermore, in terms of our hardware, we selected low-power consumption devices such as the Raspberry Pi Zero, which minimizes the system’s carbon footprint. Unlike traditional high-energy smart fridges, we offer an energy-efficient, cost-effective alternative that extends the lift of existing fridges instead of requiring consumers to purchase expensive IoT appliances.

For the long term, we could consider modifying our design to enable it to be retrofittable to most fridges that consumers currently have. This would make our solution more accessible and help reduce waste at a scaled-up level, in addition to preventing consumers from having to replace their existing fridges (which would in turn, have an added toll on greenhouse emissions). Working with industry stakeholders would also help expand the reach of our solution, benefiting not only individual consumers but also grocery stores, food banks, and restaurants.