For this week, I continued with refining the training pipeline for the object detection model, in order to maximize our performance for the demo . I expanded our dataset with the Open Images database, filtering out food-related classes to our use case, significantly increasing our existing data. Furthermore, I am looking to experiment with the YOLOv10 model in order to further increase accuracy.

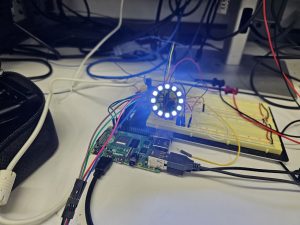

I am currently on track with our milestones. Preliminary training have been completed, and we are working to maximize our accuracy and finalize our model. I am also working on deploying our model onto the Raspberry Pi.

For next week, we aim to proceed with our demo, during which I aim to show our model with the optimal accuracy. I will continue working on integrating our model with the tentative pipeline for fridge item detection, and continue expanding our training dataset and tuning our parameters to optimize accuracy.