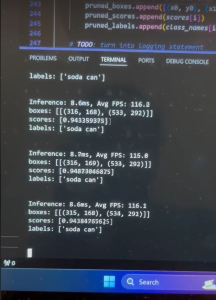

This week, I was successfully able to generate bounding boxes using the optimized tensorrt (trt) model. As seen in the below screenshot, inferencing runs at an average rate of 115 FPS (~8.7ms) on a live camera feed when jetson_clocks is enabled.

As the camera can only support a max of 118 FPS, we are bound by the FPS rate of the camera. The ML Subsystem should not cause any bottlenecks. The code to run a demo for this process can be found in trt_demo.py.

Additionally, I also wrote send_bboxes.py (and related util helper functions in the linked repo) and recv_bboxes.py (in the git repo for motor control which is currently set to private). I also verified these files communicated correctly across the Jetson Orin Nano and RPi over ethernet. They use the PubSub model, where bounding boxes (bboxes), corresponding confidence scores, and corresponding labels are published by the Orin and then pulled by the RPi’s subscription node. Since bboxes can be detected every ~8.7ms, I publish to the connection every ~10ms. The RPi is then free to read from the connection to get the latest bbox whenever it wants. A video of this working can be found in our team’s status report.

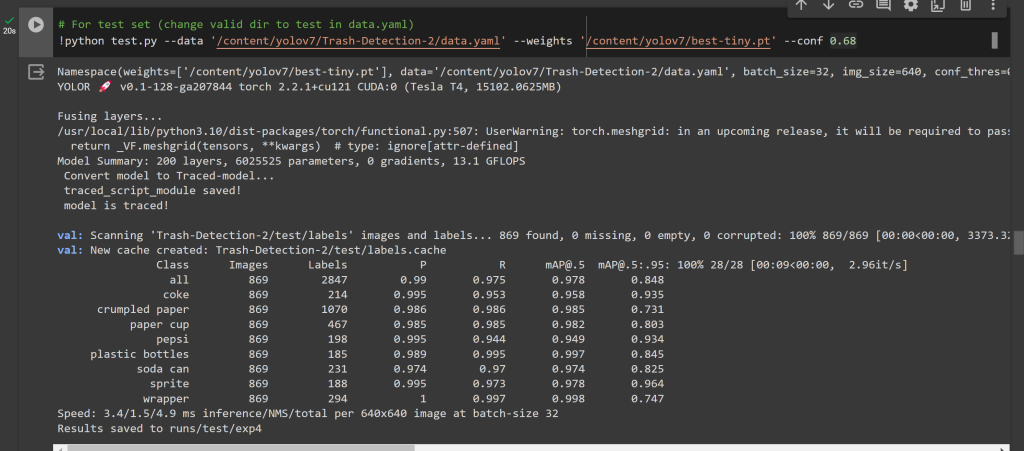

In terms of testing, we have a couple user requirements and design requirements related to the ML subsystem. As per our original user requirements, the robot should be able to identify trash objects with at least 95% mean average precision (mAP) for YOLOv7 and 80% for tiny YOLOv7. Originally, we were also targeting 100% recall. Post testing, that is, running the YOLOv7-tiny model on our test dataset, comprised of roughly 10% of our overall dataset, we were successfully able to get 97.8% mAP and 97.5% recall over all classes. With how high these numbers were, it no longer made sense to use the YOLOv7 model as a part of our pipeline, and ~98% for both mAP and recall meant there was no longer a need to consider tradeoffs between the two values. The model empirically also works best under consistent lighting conditions and plain backdrops/floors. The comprehensive results of the test can be found below.

As per our design requirements, originally we wanted the performance of our inferencing pipeline to support a camera frames per second (FPS) rate of at least 15 and return bounding boxes surrounding trash objects at a confidence interval of 0.68. While testing however, we noticed adjusting the confidence interval to 0.5 resulted in the best detections, which is also what the above screenshot uses to determine the mAP and recall rates. As mentioned earlier in my status report, we were able to verify inferencing runs at 115 FPS by logging the time right before beginning inferencing and after inferencing completed, which is very well over our original target. We were also able to verify the bboxes can be sent over ethernet to the RPi every 10ms using the same logging technique.

In other words, the testing of the ML subsystem is completed and our metrics have been verified (within reason).

My individual progress is on schedule.

In the following week, I hope to help my teammates finalize integration, so that we can successfully test the rest of our project.

0 Comments