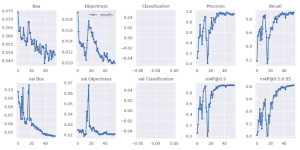

This past week, I spent most of my time setting up Google Colab and training the YOLOv7 and YOLOv7-tiny models. As expected, the YOLOv7 has been tuning well, but the metrics for the YOLOv7-tiny model are not so stellar. Since I’m ahead of schedule, I’ve been focusing on finding ways to improve the recall metric for both models rather than run several tuning trials to avoid running out of compute units too early. I’ve started by working with the soda cans dataset. Below are images showing the current best performing tunings for both and the overall tuning graphs:

YOLOv7

YOLOv7-tiny

![]()

For the tiny model, I managed to improve the tuning to this point by increasing the learning rate. I also plan to run another test with an increased learning rate and more epochs, as it seems it didn’t tune for long enough to settle on a value. I may also decrease the IoU Threshold.

Like my teammates, I also worked on design presentation slides, incorporating feedback from our previous presentation and acted more as a sanity checker for the overall design.

I’m currently on schedule based on the Gantt chart, but tuning models is always unpredictable. Next week, I plan to invest most of my time into finishing up training both models and hopefully run inferencing on the Jetson Nano Orin, if the micro SD card comes in time.

0 Comments