Main Accomplishments for This Week

- Design presentation

- Initial ML and CV combined integration for basic ASL alphabet testing

- Confirmation of inventory items for purchase

Risks & Risk Management

- Currently no significant risks for the whole team. Therefore, no mitigation needed. There are concerns for each team member in their respective roles, but nothing to the extent that they jeopardize the entire project.

Design Changes

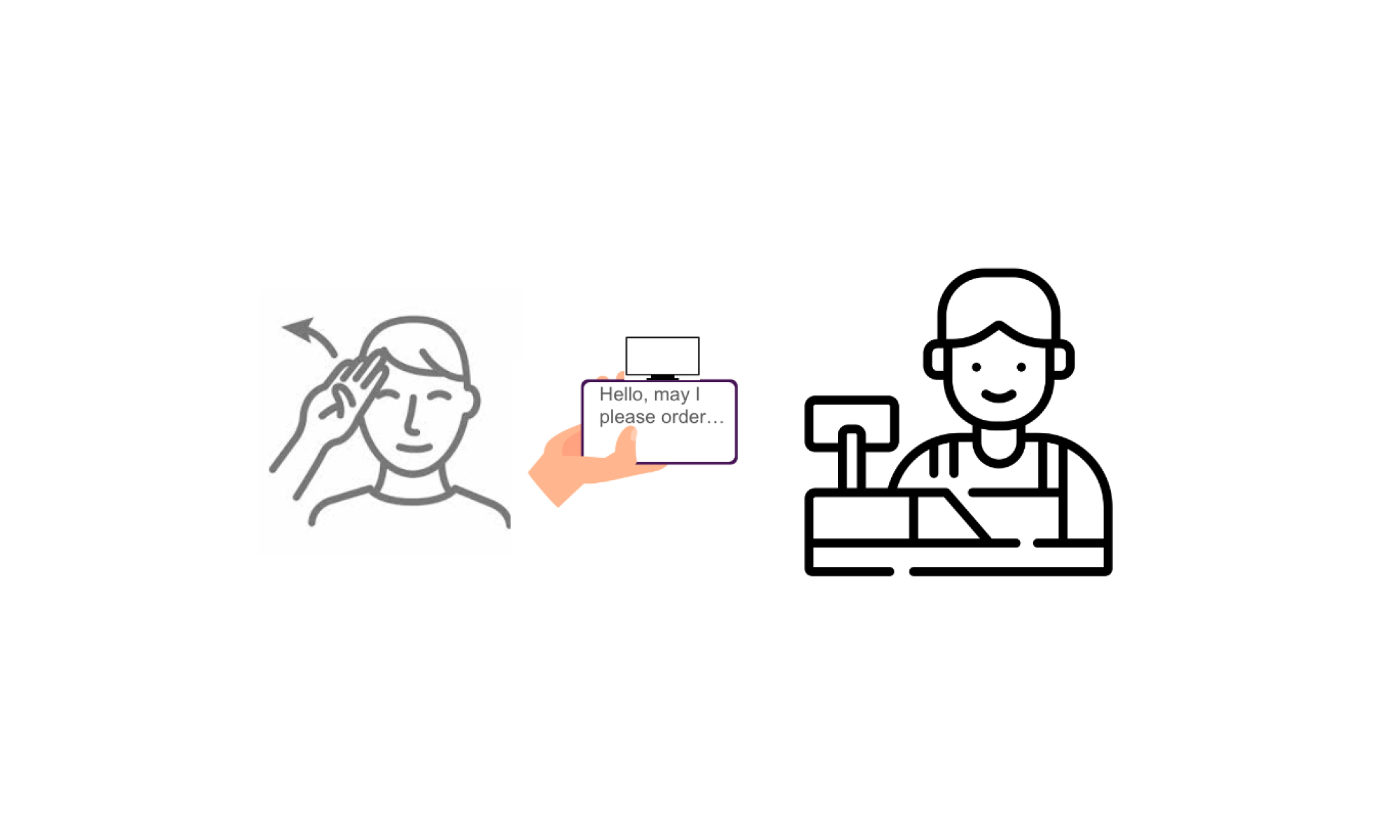

- Natural language processing (NLP) has been included in software development. Considering sign language does not directly translate into full, syntactic sentences, we realized we needed a machine learning algorithm for grammar correction to achieve proper translation. We intend to use open-source code after understanding NLP computation, and plan for it to be implemented in later stages. Specifically, it will be developed after the ASL ML algorithm and CV programming have been accomplished. Although this grows the software aspect a little more, team members are all on board to contribute to this part together to minimize any possible costs this may incur in the overall timeline.

- Three reach goals have been confirmed for after MVP is completed: 1. Speech-to-text, 2. A signal for the end of a sentence by the ASL user (a flash of light, or an audio notification), and 3. Facial recognition to enhance the ASL translations. All of the above is for smoother, fluid conversation between the user and the receiver.

Schedule Changes

- 1 more week added for Leia & Sejal to implement and train NLP

- 2 more weeks added (starting in the same week we plan to reach MVP) for Ran to add speech-to-text feature

- Updated schedule: https://docs.google.com/spreadsheets/d/1eBY0F7bD37ePsoAr9xaq0xKkDXdvM-4sQ33pZXoQqZg/edit#gid=0

Additional – Status Report 2

Part A was written by Ran, B was written by Sejal and C was written by Leia.

Part A: Our project by nature enhances public health and welfare by ensuring effective communications for sign language users. In the context of health, both obtaining and expressing accurate information about material requirements, medical procedures, and preventive measures are vital. Our project facilitates these communications, contributing to the physiological well-being of users. More importantly, we aim to elevate the psychological happiness of sign language users by providing them with a sense of inclusivity and fairness in daily social interactions. In terms of welfare, our project enables efficient access to basic needs such as education, employment, community services and healthcare via its high portability and diverse use-case scenarios. Moreover, we make every effort to secure the functionality of mechanical and electronic components: the plastic backbone of our phone attachment will be 3-D printed with round corners, and the supporting battery will operate at a human-safe low voltage.

Part B: Our project prioritizes cultural sensitivity, inclusivity, and accessibility to meet the diverse needs of sign language users in various social settings. Through image processing, the system ensures clarity and accuracy in gesture recognition, accommodating different environments. The product will promote mutual understanding and respect among users from different cultural backgrounds, to unite them on effective communication. Additionally, recognizing the importance of ethical considerations in technology development, the product will prioritize privacy and data security, such as implementing data protection measures to ensure transparent data practices throughout the user journey. By promoting trust and transparency, the product solution will foster positive social relationships and user confidence in the technology. Ultimately, the product solution aims to bridge communication barriers and promote social inclusion by facilitating seamless interaction through sign language translation, meet the needs of diverse social groups and promote inclusive communication in social settings

Part C: Our product is meant to be manufactured and distributed at very low costs. The complete package is a free mobile application and a phone attachment, which will be 3D printed and require no screws, glue, or even assembly. The attachment is simply put on or taken off the phone at the user’s discretion, even if the phone has a case. The product’s most costly component is the Arduino, which is about $30, and we expect the total hardware side will amount to less than $100. Not only are production costs minimal, but given the product’s purpose is for equity and diversity, the product will not be exclusively distributed. Purchasing it is considered like buying any effective and helpful item for daily living. If it becomes a part of the market, it should not necessarily impact the current goods and services related to the deaf or hard-of-hearing communities. However, our product and software are optimized for Apple ecosystems. Our team members all use Apple products and hence, our project has the potential for cross-platform solutions but will not be tested for it. Currently, this may come as a cost for some users who do not use Apple operating systems. Still, since Apple products are popular and common, we feel our product is still overall economically reasonable.