Progress

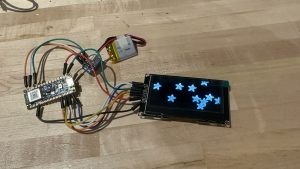

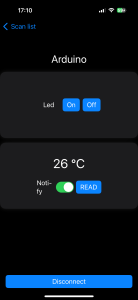

The components for our product have been ordered through the purchasing form: an Arduino Nano 33 BLE, an OLED 2.42” screen display module, an E-Ink 2.7” display, a Lithium Polymer battery 3.7V 2000mAh, and a breadboard + jumper wires kit. Currently, the two different screens and the battery have been received and hopefully the rest of the parts will arrive this coming week. I’ve been continuing to prepare how I’ll connect everything and learning sign language on Youtube. I’ve also been practicing the Swift language and Xcode environment via Apple Developer Tutorials. Specifically, there are three features I’m trying to learn to integrate into the mobile app for our MVP and also for backup in case our attempts at integration in the future across the app, Arduino, machine learning, and computer vision go awry: 1. Retrieving data from the internet such as URLs so we can port a web app into a mobile app, 2. Recognizing multi-touch screen gestures like taps, drags, and touch and hold, and 3. Recognizing hand gestures from the phone camera with machine learning. With Ran, I am also trying to figure out how to distribute our app into our phone for testing purposes. She raised an issue that the Xcode-simulated iPhone does not have a camera implementation so we are working to try and get the app into our phones.

Next Steps

The third feature mentioned in the Progress section needs further analysis and communication with team members. It’s performance is still uncertain and how it could amalgamate with our ASL-adapted computer vision and machine learning is questionable. For now, its primary use is to try and get our app to use the phone’s camera.

My plan is to get a working mobile app with functional buttons that lead to the settings page and the ASL page where at its corner, a small window shows what the phone’s front-facing camera sees. This will be broken down into further steps. Additionally, once I obtain the rest of the purchased components, I will connect the Arduino to the app, using the BLE feature. I’ll attach an LED to the Arduino and see if the mobile app can control it. After, I’ll hook a screen to Arduino, control the screen via Arduino, then control the screen via app. I realized that it’s still too early to try and utilize CAD, so my priorities have shifted into working on the mobile app and operating the hardware.