Main Accomplishments for This Week

- 3D printing of phone attachment completed

- Fully functional web application (lap-top based)

- Progress on displaying text via bluetooth

- Final presentation

Risks & Risk Management

The biggest risk now is the major video input function, getUserMedia, is restricted to be used in secure contexts, including localhost and HTTPS. However, to enable phones to open the webpage, we are currently deploying the web application in AWS with HTTP. So, the webcam does not work if we open the web app on our phone. We are looking for methods to solve this issue, but if we cannot fix it, we will revert back to a laptop based implementation.

Design Changes

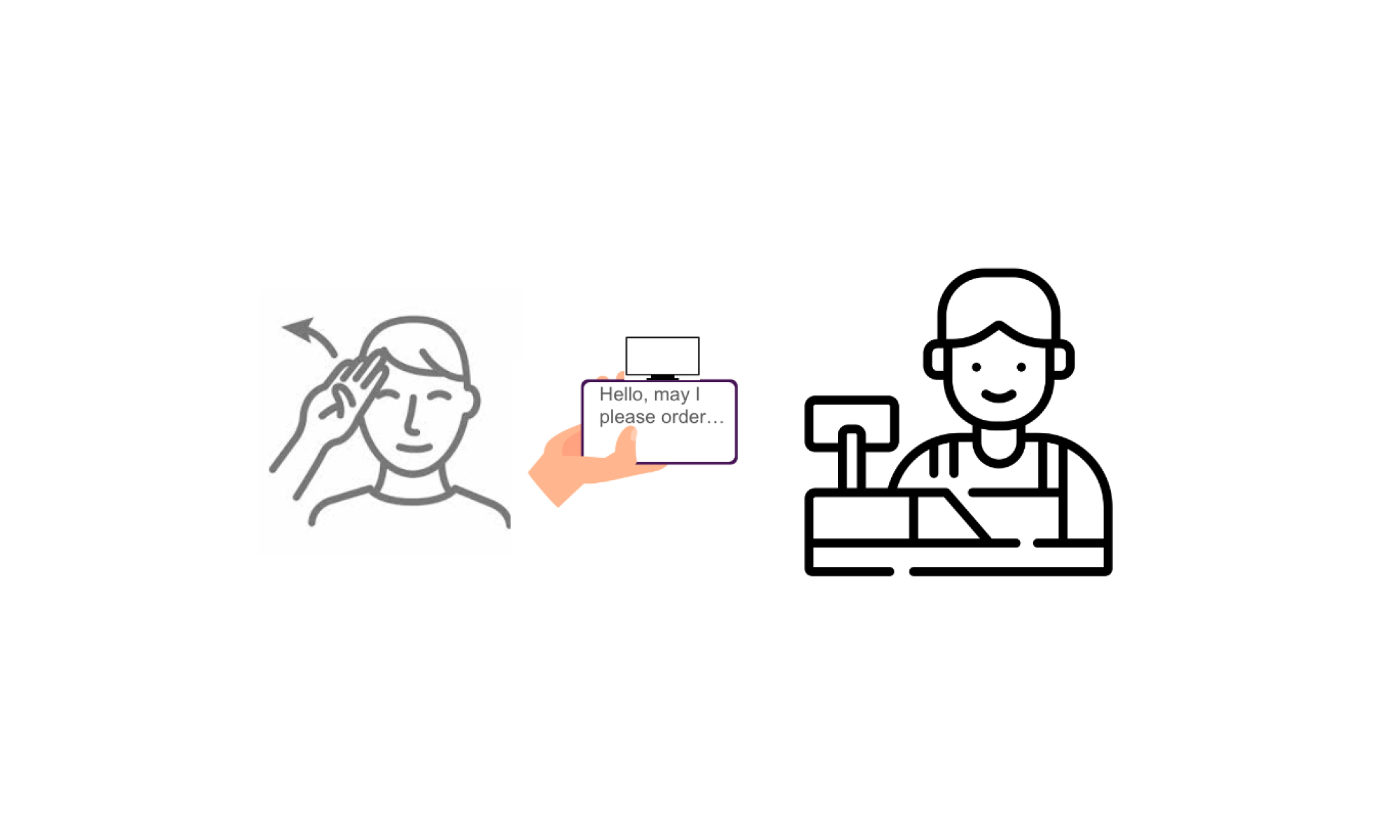

We decided to switch from a mobile app to a web app due to lack of MediaPipe support for IOS. In order to receive an accurate prediction, it is necessary to incorporate pose landmarks, which there is limited support for in iOS. By using a web app, we can incorporate necessary landmarks in a way we are already familiar with.

Schedule Changes

No schedule changes

Additional question for this week

We conducted unit tests on the CV module, and since our ML function is dependent on CV implementation, our tests on ML accuracy and latency were conducted after CV-ML integration.

For CV recognition requirements, we tested gesture recognition accuracy under two metrics: signing distance and background distraction.

- For signing distance: we manually signed at different distances and verified if hand and pose landmarks were accurately displayed on the signer. We adopted 5 samples at each interval: 1ft, 1.5ft, 2.0ft, 2.5ft, 3ft, 3.5ft, 4ft. Result: proper landmarks appear 100% of the time at distances between 1-3.9ft.

- For background distractions: we manually created different background settings and verified if hand and pose landmarks were accurately displayed on the signer. We adopted 20 testing samples in total, with 2 samples at each trial: 1-10 distractors in the background. Result: landmarks are drawn on the target subject 95% of time (19 out of 20 samples).

For translation latency requirements, we measured time delay on the web application.

- For translation latency: we timed the seconds elapsed between the appearance of landmarks and the display of English texts. We adopted 90 samples: Each team member signed each of the 10 phrases 3 times. Result: prediction of words appeared an average of 1100 ms after a gesture. Translation appeared an average of 2900ms after a sentence

For the ML accuracy requirements,

- The validation and training accuracies from training the dataset were represented graphically, with the training accuracy reaching about 96% and validation accuracy reaching about 93%. This suggests that the model should have high accuracy, with the possibility of some slight overfitting.

- In order to test the accuracy while using the model, each of our team members signed each phrase 3 times along with 3 complex sentences using these phrases in a structure that was similar to typical sign language. Result: phrases translation accuracy: 82/90 of the phrases were accurate, Sentences translation accuracy: 8/9 sentences were accurate. Note that it is a bit difficult to determine if a sentence is accurate because there might be multiple correct interpretations of a sentence.

- While integrating additional phrases into the model, we performed some informal testing as well. After training a model consisting of 10 additional phrases, we signed each of these signs and determined that the accuracy was significantly lower than the original model of only 10 signs from the DSL-10 dataset, without around every ⅓ translations being accurate. Limiting to only 1 additional phrase posed the same low accuracy, even after ensuring the data was consistent among each other. As a result, we decided that our MVP would only consist of the original 10 phrases because this model proved high accuracy.