- What did you personally accomplish this week on the project?

In the first week, I discovered a mistake in my older way of integrating CoreML model with iOS app. I inserted the model in the incorrect function, so the model was never called, producing null results. After I moved it to the target function, multiple errors were raised and the integration was largely unsuccessful. Moreover, since MediaPipe only provides hand landmarker packages for iOS development but not pose landmarks, my search for alternative packages, including QuickPose and Xcode Vision library, failed to indicate a strong feasibility overall. So, after meeting with professors and TAs and our internal group discussion, we officially decided to change our integration plan to a web application in the Django framework.

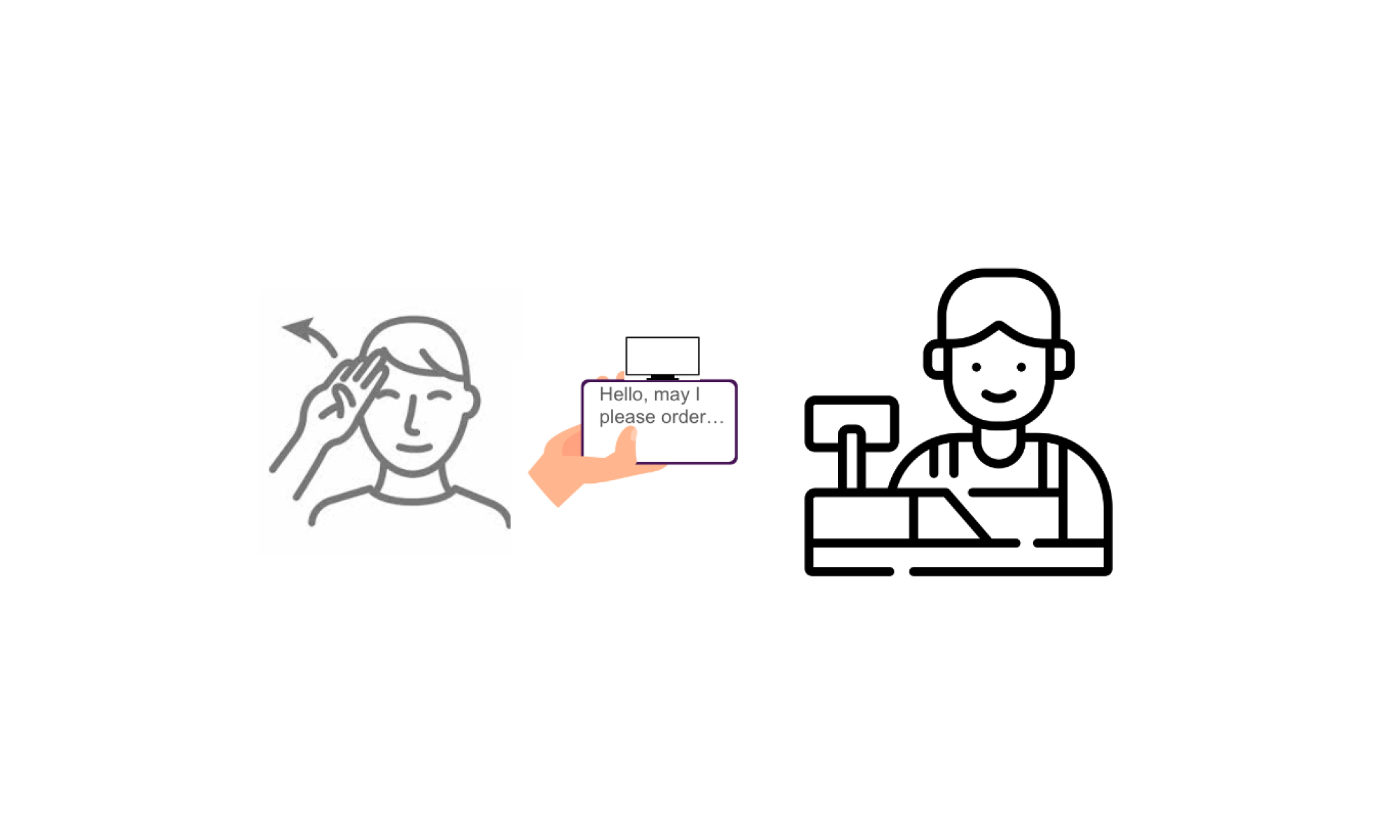

Accordingly, I was responsible for the overall codebase setup, the javascript hand and pose real-time recognition functions, and the data transmission between frontend and backend. Over 5 days starting from this Monday, I accomplished setting up Django framework, converting the original MediaPipe CV module written in Python to the same functionality written in javascript, and enabling frontend and backend request and response.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Yes, my progress is on schedule. Given that we are entering the final weeks of the project, I still need to speed up my process as much as possible to leave time for further integration.

- What deliverables do you hope to complete in the next week?

User experience survey distribution and collection

Improving accuracy

Improving UI

- Additional question for new module learning

To accomplish our gesture and pose detection feature, we utilized the MediaPipe module a lot. While MediaPipe is a powerful library for generating landmarks on input image, video, or livestream video, it took us a couple weeks to study its official google developer website, read its documentation, and follow its tutorials to build the dependencies and experiment with the example code. In addition, we also watched YouTube videos to learn the overall pipeline to implement the CV module for gesture and pose recognition.