Accomplishments

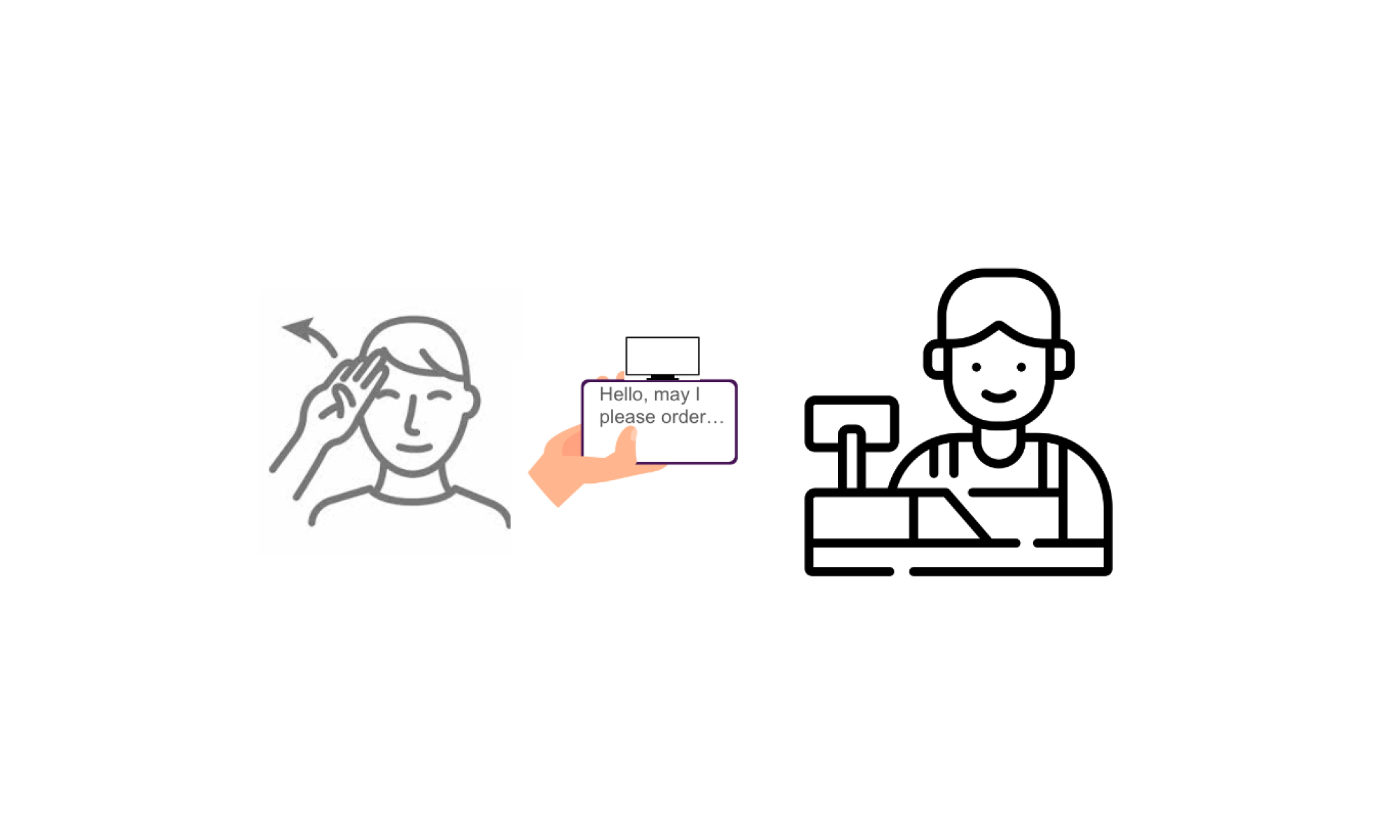

This week, my team did our interim demo and began to integrate our respective parts. To prepare for the demo, I made some small modifications to the prompt taken in API request for the LLM to ensure that it was outputting sentences that made sense according to the predicted gestures.

Since we are using CoreML to use machine learning within our IOS app, my teammate Ran and I worked on the swift code to integrate machine learning into our app. I converted my model to CoreML and wrote a function that behaves the same way the large language model in the machine learning processing works.

I also continued trying to debug why the expanded dataset from last week wasn’t working. I re-recorded some videos ensuring that MediaPipe was recognizing all the landmarks in the frame, with good lighting, and the same format as the rest of the dataset. Again, while the training and validation accuracies were high, while testing the gesture prediction with the model, it recognized very little of the gestures. This might suggest that with the expanded amount of data, the model is not complex enough to handle this. So, I continued to try to add layers to make the model more complex, but there didn’t seem to be any improvement.

My progress is on schedule as we are working on integrating our parts.

Next week, I am going to continue to work with Ran to integrate the ML into the IOS app. I will also try to fine tune the model structure some more to attempt to improve the existing one, and perform the testing described below.

Verification and Validation

Tests ran so far: I have done some informal testing by signing into the webcam and seeing if it displayed the signs accurately

Tests I plan to run:

- Quantitatively measure latency of gesture prediction

- Since one of our use case requirements was to have a 1-3 second latency for gesture recognition, I will measure how long it takes after a gesture is signed for a prediction to appear.

- Quantitatively measure latency of LLM

- Similar to measuring the latency of gesture prediction, it is important to also measure how long it takes the LLM to process the prediction and output a sentence, so I will measure this as well.

- Quantitatively measure accuracy of gesture prediction

- Since one of our use case requirements was to have a gesture prediction accuracy of > 95%, I will measure the accuracy of a signed gesture against its prediction

- Qualitatively determine accuracy of LLM

- Since there is no right/wrong output of the LLM, this testing is done qualitatively, to determine whether the sentence output makes sense based on the direct predictions, and in a conversational sentence.

I will do the above tests in various lightings, backgrounds, and with distractions to ensure it corresponds to our use case requirements in different settings, simulating where the device might be used.