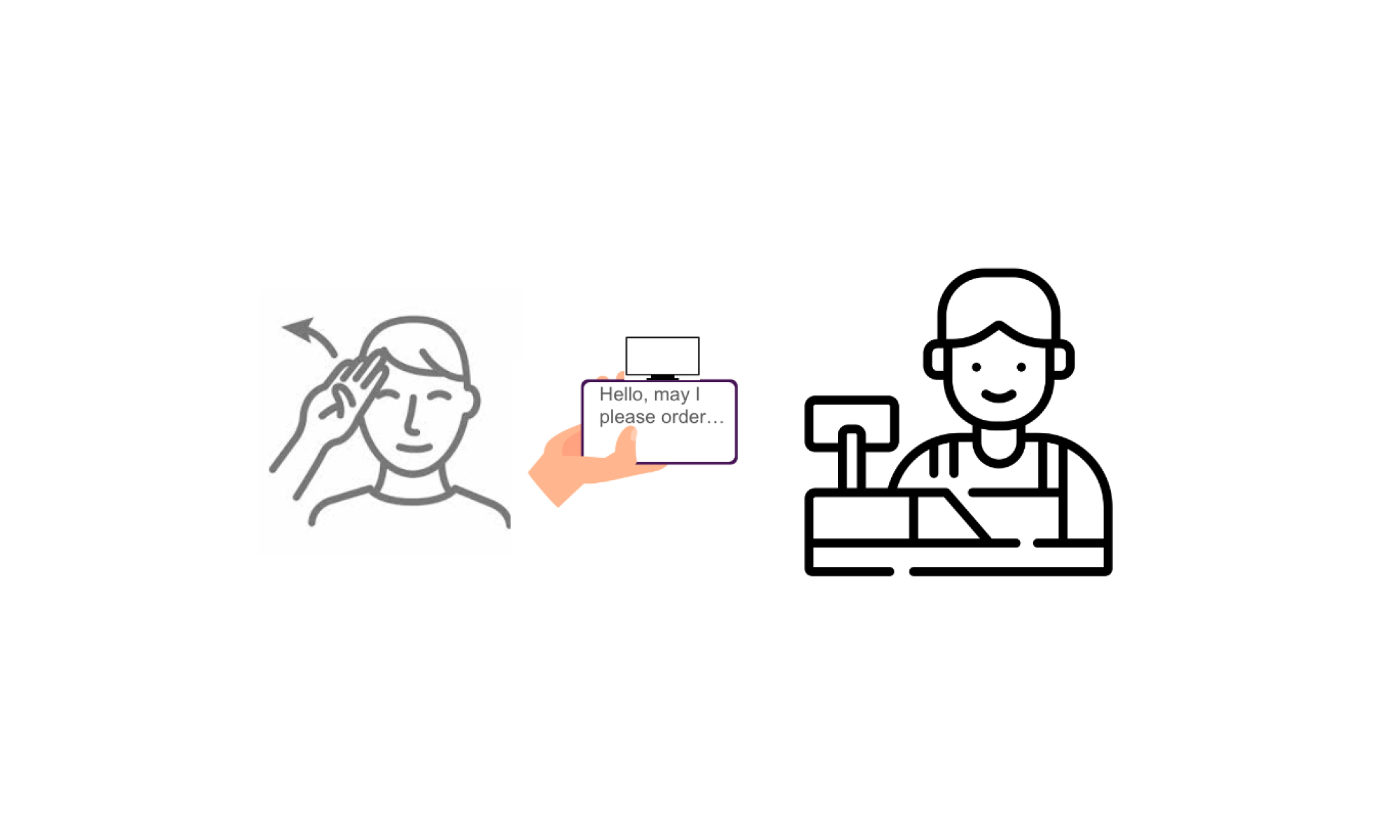

This week I got started on a simple ML model and combined it with Ran’s computer vision algorithm for hand detection. I trained a dataset from Kaggle’s ASL MNIST using a CNN. Using the trained model, I took the video processing from the OpenCV and Mediapipe code, processed the prediction of what character was being displayed, and displayed this prediction on the webcam screen, as shown below.

(Code on github https://github.com/LunaFang1016/GiveMeASign/tree/feature-cv-ml)

Training this simple model allowed me to think about the complexities required beyond this, such as incorporating both static and dynamic signs, and combining letters into words to form readable sentences. After doing further research on the structure of neural network to use, I decided to go with a combination of CNN for static signs, and LSTM for dynamic signs. I also gathered datasets that display both static and dynamic signs from a variety of sources (How2Sign, MS-ASL, DSL-10, RWTH-PHOENIX Weather 2014, Sign Language MNIST, ASLLRP).

My progress on schedule is on track as I’ve been working on model testing and gathering data from existing datasets.

Next week, I hope to accomplish more training of the model using the gathered datasets and hopefully be able to display a more accurate prediction of not just letters, but words and phrases. We will also be working on the Design Review presentation and report.