Public Health, Social, and Economic Impacts

Concerning public health, our product will address the growing concern with digital distractions and their impact on mental well-being. By helping users monitor their focus and productivity levels during work sessions and their correlation with various environmental distractions such as digital devices, our product will give users insights into their work and phone usage, and potentially help improve their mental well-being in work environments and relationship with digital devices.

For social factors, our product addresses an issue that affects almost everyone today. Social media bridges people across various social groups but is also a significant distraction designed to efficiently draw and maintain users’ attention. Our product aims to empower users to track their focus and understand what factors play into their ability to enter focus states for extended periods of time.

The development and implementation of the Focus Tracker App can have significant economic implications. Firstly, by helping individuals improve their focus and productivity, our product can contribute to overall efficiency in the workforce. Increased productivity often translates to higher output per unit of labor, which can lead to economic growth. Businesses will benefit from a more focused and productive workforce, resulting in improved profitability and competitiveness in the market. Additionally, our app’s ability to help users identify distractions can lead to a better understanding of time management and resource allocation, which are crucial economic factors in optimizing production. In summary, our product will have a strong impact on economic factors by enhancing workforce efficiency, improving productivity, and aiding businesses in better-managing distractions and resources.

Progress Update

The Emotiv headset outputs metrics for various performance states via their EmotivPRO API including attention, relaxation, frustration, interest, cognitive stress, and more. We plan to compute metrics to understand correlations (perhaps inverse) between various performance metrics. Given further understanding of how some performance metrics interact with one another; for example, the effects of interest in a subject or cognitive stress on attention could prove to be extremely useful to users in evaluating what factors are affecting their ability to maintain focus on the task at hand. We also plan to look at this data in conjunction with Professor Dueck’s focus vs. distracted labeling to understand what threshold of performance metric values denote each state of mind.

On Monday, we met with Professor Dueck and her students to get some more background on how she works with her students and understands their flow states/focus levels. We discussed the best way for us to collaborate and collect data that would be useful for us. We plan to create a simple Python script that will record the start and end of focus and distracted states with timestamps using the laptop keyboard. This will give us a ground truth of focus states to compare with the EEG brainwave data provided by the Emotiv headset.

This week we also developed a concrete risk mitigation plan in case the EEG Headset does not produce accurate results. This plan integrates microphone data, PyAudioAnalysis/MediaPipe for audio analysis, and Meta’s LLaMA LLM for personalized feedback into the Focus Tracker App.

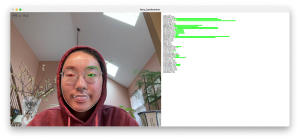

We will use the microphone on the user’s device to capture audio data during work sessions and implement real-time audio processing to analyze background sounds and detect potential distractions. The library PyAudioAnalysis will help us extract features from the audio data, such as speech, music, and background noise levels. MediaPipe will help us with real-time audio visualization, gesture recognition, and emotion detection from speech. PyAudioAnalysis/MediaPipe will help us categorize distractions based on audio cues and provide more insight into the user’s work environment. Next, we will integrate Meta’s LLaMA LLM to analyze the user’s focus patterns and distractions over time. We will train the LLM on a dataset of focus-related features, including audio data, task duration, and other relevant metrics. The LLM will generate personalized feedback and suggestions based on the user’s focus data.

In addition, we will provide actionable insights such as identifying common distractions, suggesting productivity techniques, or recommending changes to the work environment that will further help the user improve their productivity. Lastly, we will display the real-time focus metrics and detect distractions on multiple dashboards similar to the camera and EEG headset metrics we have planned.

To test the integration of microphone data, we will conduct controlled experiments where users perform focused tasks while the app records audio data. We will analyze the audio recordings to detect distractions such as background noise, speech, and device notifications. Specifically, we will measure the accuracy of distraction detection by comparing it against manually annotated data, aiming for a detection accuracy of at least 90%. Additionally, we will assess the app’s real-time performance by evaluating the latency between detecting a distraction and providing feedback, aiming for a latency of less than 3 seconds.

Lastly, we prepared for our design review presentation and considered our product’s public health, social, and economic impacts. Overall, we made great progress this week and are on schedule.