This week, I made enhancements to the user interface and overall data presentation in preparation for the Interim Demo.

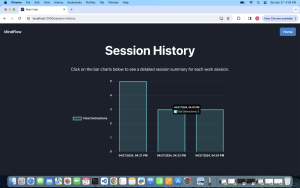

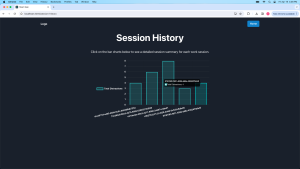

I incorporated a graph into the React frontend to visualize the distraction data collected from yawning, sleep, and gazing detection. This interactive graph, built using the Chart.js library, dynamically displays the frequency of each type of distraction over the current session. Users can hover over the graph to see detailed statistics on the number of times each distraction has occurred as well as the exact time the distraction occurred. Currently, the graph displays all the data from the current session.

To help users track the duration of their work or study sessions, I added a session timer to the webpage. This timer is displayed on the Current Session Page and starts automatically when the session begins and updates in real-time.

I also created a calibration page that allows a distinction between the Calibration and the Current Session page. This page features a simple interface with a green button that, when clicked, triggers the run.py Python script to start the OpenCV face detection process. This calibration step ensures that the distraction detection algorithms are finely tuned to the user’s current environment and camera setup.

To provide more comprehensive session summaries, I modified the data payload structure to include a “frequency” item. This addition stores the number of times each type of distraction occurred during the session. Once the user decides to stop the current session, they will be routed to the Session Summary Page which displays statistics on their distraction frequencies.

Lastly, I worked with Rohan on integrating the EEG data into the Session Summary page. Leveraging Django REST API endpoints, we enabled the real-time display of EEG data. We created an EEGEvent model that stores the epoch_timestamp, formatted_timestamp, and all the relevant data needed to display the flow state detection for the user.

The Current Session Page, Session Summary Page, and Calibration Page look like the following:

(A window pops up for the calibration when the user is on this page. This is not displayed in the picture above.)

My overall progress is doing great and I am on schedule. This week I will continue to work with Rohan to display the EEG data on the frontend in the Current Session and Session Summary Pages. The plan is to make a pie chart of the time the user is in a flow state vs. not in a flow state and also display this information in a graph format.