Now that I have finished implementation of all the distraction and behavior detections, I spent time testing and verifying the integrated system. I tweaked thresholds so that the detections would work across different users.

My testing plan is outlined below, and the results of testing can be found here.

- Test among 5 different users

- Engage in each behavior 10 times over 5 minutes, leaving opportunities for the models to make true positive, false positive, and false negative predictions

- Record number of true positives, false positives, and false negatives

- Behaviors

- Yawning: user yawns

- Sleep: user closes eyes for at least 5 seconds

- Gaze: user looks to left of screen for at least 3 seconds

- Phone: user picks up and uses phone for 10 seconds

- Other people: another person enters frame for 10 seconds

- User is away: user leaves for 30 seconds, replaced by another user

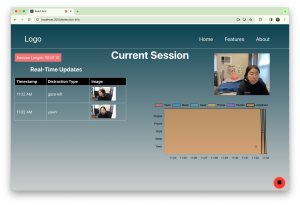

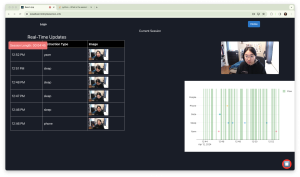

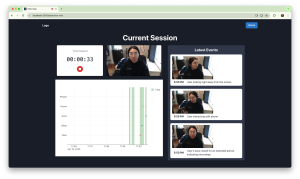

After completing testing, I focused my effort on integration, getting flow state and distractions displayed on the current session page. I also made significant improvements to the UI to reach our usability and usefulness requirements.This required a lot of experimentation with different UI libraries and visualization options. I’ve attached some screenshots of the iterative process of designing the current session page.

My next focus is improving the session summary page and fully integrating the EEG headset with our web app. Currently, the EEG data visualizations are using mock data that I am generating. I would also like to improve the facial recognition component as the “user is away” event results in many false positives at the moment. With these next steps in mind and the progress I have made so far, I am on track and schedule.

One of the new skills I gained by working on this project is how to organize a large codebase. In the past, I’ve worked on smaller projects individually, giving me leeway to be more sloppy with version control, documentation, and code organization without major consequences. But with working in a team of three on a significantly larger project with many moving parts, I was able to improve my code management, collaboration, and version control skills with git. This is also my first experience fully developing a web app, implementing everything from the backend to the frontend. From this experience, I’ve gained a lot of knowledge about full-stack development, React, and Django. In terms of learning strategies and resources, Google, Reddit, Stack Overflow, YouTube, and Medium are where I was able to find a lot of information on how others are using and debugging Python libraries and React for frontend development. It was helpful for me to read Medium article tutorials and watch YouTube tutorials of a “beginner” project using a new library or tool I wanted to use. From there, I was able to get familiar with the basics and use that knowledge to work on our capstone project with a bigger scope.