In order to better understand how to characterize flow states, I had conversations with friends in various fields and synthesized insights from multiple experts in cognitive psychology and neuroscience including Cal Newport and Andrew Huberman. Focus can be seen as a gateway to flow. Flow states can be thought of as a performance state; while training for sports or music can be quite difficult and requires conscious focus, one may enter a flow state once they have achieved mastery of a skill and are performing for an audience. A flow state also typically involves a loss of reflective self-consciousness (non-judgmental thinking). Interestingly, Prof. Dueck described this lack of self-judgment as a key factor in flow states in music, and when speaking with a friend this past week about his experience with cryptography research, he described something strikingly similar. Flow states typically involve a task or activity that is both second nature and enjoyable, striking a balance between not being too easy or tedious while also not being overwhelmingly difficult. When a person experiences a flow state, they may feel a more “energized” focus state, complete absorption in the task at hand, and as a result, they may lose track of time.

Given our new understanding of the distinction between focus and flow states, I made some structural changes to our previous focus and now flow state detection model. First of all, instead of classifying inputs as Focused, Neutral, or Distracted, I switched the outputs to just Flow or Not in Flow. Secondly, last week, I was only filtering on high quality EEG signal in the parietal lobe (Pz sensor) which is relevant to focus. Here is the confusion matrix for classifying Flow vs Not in Flow using only the Pz sensor:

Research has shown that increased theta activities in the frontal areas of the brain and moderate alpha activities in the frontal and central areas are characteristic of flow states. This week, I continued filtering on the parietal lobe sensor and now also on the two frontal area sensors (AF3 and AF4) all having high quality. Here is the confusion matrix for classifying Flow vs Not in Flow using the Pz, AF3, and AF4 sensors:

This model incorporates the Pz, AF3, and AF4 sensors data and classifies input vectors which include overall power values at each of the sensors and within each of the 5 frequency bands at each of the sensors into either Flow or Not in Flow. It achieves a precision of 0.8644, recall of 0.8571, and an F1 score of 0.8608. The overall accuracy of this model is improved from the previous one, but the total amount of data is lower due to the additional conditions for filtering out low quality data.

I plan on applying Shapley values which are a concept that originated out of game theory, but in recent years has been applied to explainable AI. This will give us a sense of which of our inputs are most relevant to the final classification. It will be interesting to see if what our model is picking up on ties into the existing neuroscience research on flow states or if it is seeing something new/different.

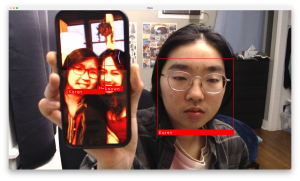

My Information Theory professor, Pulkit Grover, introduced me to a researcher in his group this week who is working on a project to improve the equity of EEG headsets to interface with different types of hair, specifically coarse Black hair which often prevents standard EEG electrodes from getting a high quality signal. This is interesting to us because one of the biggest issues and highest risk factors of our project is getting a good EEG signal due to any kind of hair interfering with the electrodes which are meant to make skin contact. I also tested our headset on a bald friend to understand if our issue with signal quality is due to the headset itself or actually because of hair interference. I found that the signal quality was much higher on my bald friend which was very interesting. For our final demo, we are thinking of inviting this friend to wear the headset to make for a more compelling presentation because we only run the model on high quality data, so hair interference with non-bald participants will end up with the model making very few predictions during our demo.