This week we stayed on schedule for our software development goals. Since we were finalizing our parts orders this week, we delayed some tasks that were dependent on hardware (such as building the mount for our camera and conducting tests of our computer vision models with our camera). We also worked on developing a better-defined API to ensure that our different models could integrate seamlessly with each other. So far, we have not made any changes to the design of our system.

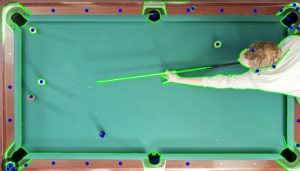

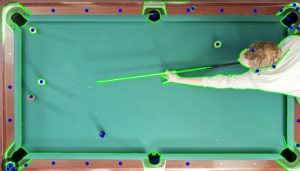

This week’s focus was on implementing the software for our project. This consists of developing our computer vision models to detect different objects in our system (the pool table walls, pool cue, and the different cue balls); creating a physics model to simulate a ball’s trajectory based on its interaction with the pool cue, walls, or other balls; and detecting AprilTags for localization and calibration.

Andrew focused on AprilTag detection to produce a coordinate frame to which our environment will be calibrated. Debrina used hough line transforms to detect the pool walls. Tjun Jet used open-cv’s findContours method to detect pool balls and the pool cue, and focused on researching different physics libraries and successfully modeled the trajectory of a ball when bounced on a wall. In the coming week we will work towards better integrating these models and improving their accuracy. Furthermore, we will begin creating a design to translate our output to the projector and build the frame for mounting our camera and projector.

Currently, the most significant risks that could jeopardize the success of our project would be if the models we have developed are not as effective if implemented using a live video feed. When conducting the tests of our models on jpeg or png images, we are to yield accurate results. Testing our models’ performance and accuracy on a live video feed is one of our biggest next steps for the coming week. Regardless, we believe that if we can set up an environment that will provide consistent lighting and positioning, this would enable our models to be accurate after adjusting the relevant parameters.

Below, we outline our product’s implications on public welfare, social factors, and economic factors.

Part A (Written by: Debrina Angelica, dangelic)

CueTips addresses some crucial aspects of public health, safety, and welfare by promoting mental well-being and physical activity. Learning an 8-ball pool game can be challenging, especially for those looking to integrate into social circles quickly. By providing a tool that predicts and visualizes ball trajectories, our product alleviates the stress associated with the learning process, fostering a positive and supportive environment for individuals eager to join friend groups. Moreover, in our hectic and demanding lives, playing pool is intended to be a relaxing activity. Our solution enhances this aspect by reducing the learning curve, making the game more accessible and enjoyable. Furthermore, the interactive nature of the game encourages physical activity, promoting movement and coordination. This not only contributes to overall physical health, but also serves as a form of recreational exercise. Additionally, for older individuals, engaging in activities that stimulate cognitive functions is crucial in reducing the risk of illnesses like dementia. The strategic thinking and focus required in playing pool can contribute to maintaining mental acuity, providing a holistic approach to well-being across different age groups and skill levels. In essence, our product aligns with considerations of public health, safety, and welfare by fostering a positive learning experience, promoting relaxation, enhancing physical activity, and supporting cognitive health.

Part B (Written by: Andrew Gao, agao2)

In terms of social factors, our product solution relates in primarily three ways: responsiveness, accessibility, community building. Pool is a game that requires immediate feedback and a high level of responsiveness and skill. When a player aims at a ball, they instantaneously predict the trajectory of the ball on a conscious/subconscious level. Because of this requirement for instantaneous feedback, we need our solution to create predictions as fast as the human eye can process changes (< 150-200 ms). In order to keep the game engaging and responsive, our system must run at this speed or faster. In terms of accessibility, we want our product solution to reach a wide range of users. This motivates our choice in outputting visual trajectory predictions. Both beginner and expert pool players can understand, use, and train using our system. Our product solution achieves community by how people will use it. Pool is inherently a community-building game between multiple players. Our system helps people get better at pool and thus contributes to an activity which serves as a massive social interest in many parts of the world.

Part C (Written by: Tjun Jet Ong, tjunjeto)

CueTips presents a unique and innovative solution that addresses a diverse range of users, catering to individuals spanning from beginners seeking to learn pool to seasoned professionals aiming to enhance their skills. Given that our target audience spans a broad range of different users, encompassing different age groups and skill levels, our product is inclusive and accessible for everyone, thereby creating room for substantial economic potential. To distribute our product, we could strategically partner with entertainment venues, gaming centers, and sports bars to target the demographic who are not only passionate about the game of pool, but also seeking interactive and technologically advanced gaming experiences to improve their game play. Simultaneously, we could also plan to offer these CV pool tables for direct-to-consumer sales, capitalizing on the growing market of individuals looking for home entertainment solutions. Through a combination of upfront hardware sales, subscription models, and potential collaborations with game developers for exclusive content, there is a lot of potential for our product to contribute significantly to the economy while providing an engaging and versatile pool gaming experience for users at all skill levels and ages.

Here are some images related to our progress for this week: