Team Status Report for April 20, 2024

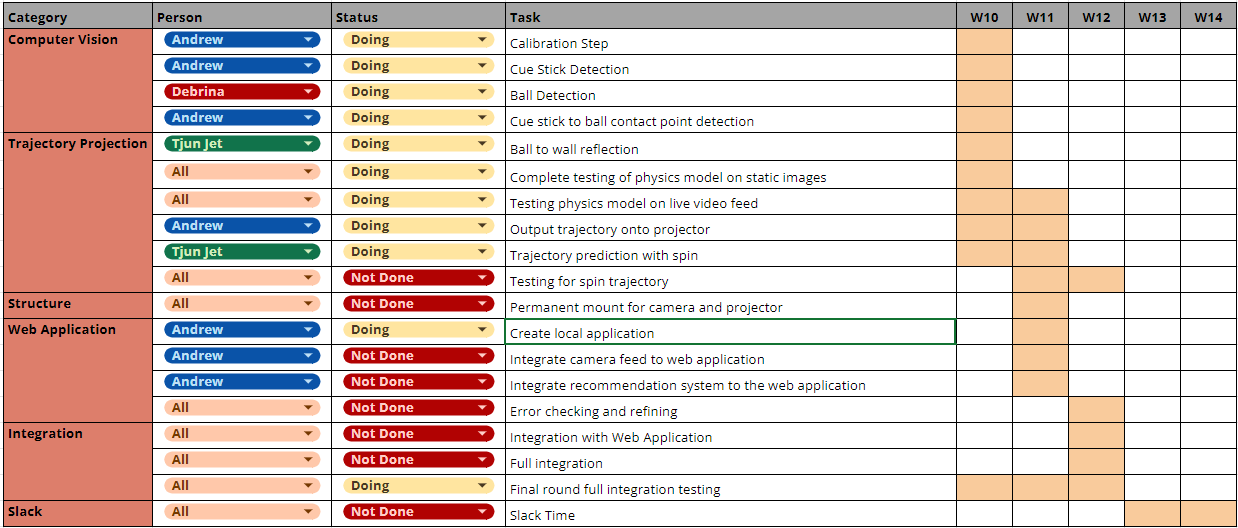

This week, our team is on schedule. We have not made any modifications to our system’s existing design; however, we did make some improvements to our testing plans. The main focus of this week was improving cue stick detection, implementing the spin feature and conducting tests and validation of our system.

This week, we made more improvements on the cue stick detection model, which we determined was a limiting factor in our system that caused some inaccuracies in our trajectory outputs. We were able to make it much more accurate with our new implementation than the previous method we applied. This week we also added to our web application to create a user interface where the user can select a location on the cue ball that they would like to hit. We then send this location to our physics model, which will calculate the trajectory of the cue ball based on the amount of spin it will have upon impact. We are basing our spin model off of an online physics reference and are currently working on implementing these equations. This is an effort that we will intend to complete in the following week. We will also conduct testing on this feature at the end of the coming week.

In terms of testing and validation, we utilized the following procedures to conduct our tests. We are still in the process of finishing up our testing to gather more data, which will be presented in the final presentation on April 22nd.

Trajectory Accuracy

- Aim cue ball to hit the target ball at the wall.

- Use CV to detect when the target ball has hit the wall.

- Take note of the target ball’s coordinate when it hits the wall.

- Compare the difference between this coordinate and the predicted coordinate of the wall collision.

Ball Detection Accuracy

- Position balls on various locations on the table, with special focus on target balls located on edges of walls, near pockets, and balls placed right next to each other.

- Apply the ball detection model and measure the distance between the actual ball’s center and the center of the perceived ball.

Latency

Time our code from when we first receive a new frame from the camera to the time it finishes calculating a predicted trajectory. We are timing our code for different possible cases of trajectories (ball to ball, ball to wall, ball to pocket) since the different cases may have different computation times.

The most significant risk we face now is the alignment of the projector projection to the table. We noticed that there may be a horizontal distortion from the projector that may not project the detected balls in the correct location on the table. While this would not affect the calculations of our accuracy in our backend system, it may not give the most accurate depiction to the user.

In the following week, we will be coming up with better ways to calibrate the projector to yield more consistent projection alignments. Furthermore, we will continue with our testing and validation efforts and potentially improve our system if there are any specifications that can be improved. As mentioned earlier, we will also continue to implement and improve the spin feature and conduct tests on this feature.

Debrina’s Status Report for April 20, 2024

This week I am on schedule. On the project management side, this week, we’ve spent a decent amount of time planning the final presentation that is scheduled to take place on April 22nd. Furthermore, we’ve worked on making our system more robust and prepared a demo video to present in the final presentation.

In terms of progress related to our system, I continued running tests on our system to continue identifying and resolving issues that would cause our system to crash on some edge cases. On the structural side, this week I also installed lighting to our environment and created a permanent mount for our projector and camera, but left it possible to adjust their vertical position so that we can still adjust the camera and projector as we debug. A big issue this week was calibration of the projector. Our original plan was to manually align the projector to the table by modifying the projector’s zoom ratio and position after the table image is made full screen on our laptop screen. However, there are some limitations to this since the projector’s settings are not as flexible as we had hoped. Hence, another solution we tried out this week to speed up the calibration process is to stream the video from our camera on flask, where we would have more freedom in adjusting the zoom ratio and position of the video feed and hence be able to better align it with the table. This is still an ongoing effort that I will continue to work on in the coming week.

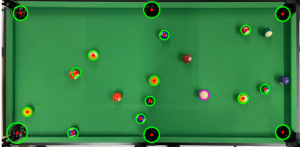

Another big focus for this week was on making further improvements to the ball detections. In order to have more stable ball detections, I implemented a state detection algorithm that would detect whether the positions of the balls on the table have shifted, in which case the ball detections would be re-run and a new set of ball locations would be passed into the physics model for computations. Currently, the state changes are based on the position of the cue ball. Hence, if a different ball moves, but it isn’t the cue ball, the ball detections would not be updated. This is a difficult issue to solve, however, as it would be computationally intensive to match the different balls across different ball detections. I will be working on an improvement for this limitation in the coming week to make the algorithm more robust.

Besides the projector calibration and improving the ball state detection, in the coming week I also plan to continue conducting tests and fix any bugs that may still remain in the backend.

As you’ve designed, implemented and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

On the technical side, I learned a lot about computer vision, Python development, and object oriented programming in Python. I tried out a lot of different tools from the OpenCV library in order to implement the object detections since a lot of the times they yielded inaccurate results. I had to research different methods then implement them and compare their results with each other to determine which ones would be more accurate and under what lighting / image conditions they would be more accurate. Sometimes the meaning of the different parameters used in the different methods were not very clear, and in order to learn more about these I would either experiment with different parameters and inspect the changes they made, or I would consult blogs that discussed a sample implementation of the tool in the author’s own project. In terms of Python development, I learned a lot about API design and object oriented programming. Each detection model that I implemented was a class that contained certain parameters that could be used to keep track of historical data and be used to return detections based on these historical data. I also tried to standardize the APIs to ease our integration efforts. Furthermore, since our system would be a long-running process, I focused on implementing algorithms with lower complexity in order to allow our backend to run faster. All the learning that I had done was mainly done through trial and error, experimenting with the tools and seeing the behavior of the implementation, and reading documentation and implementation examples to fix bugs or modify the necessary parameters used by the different tools.

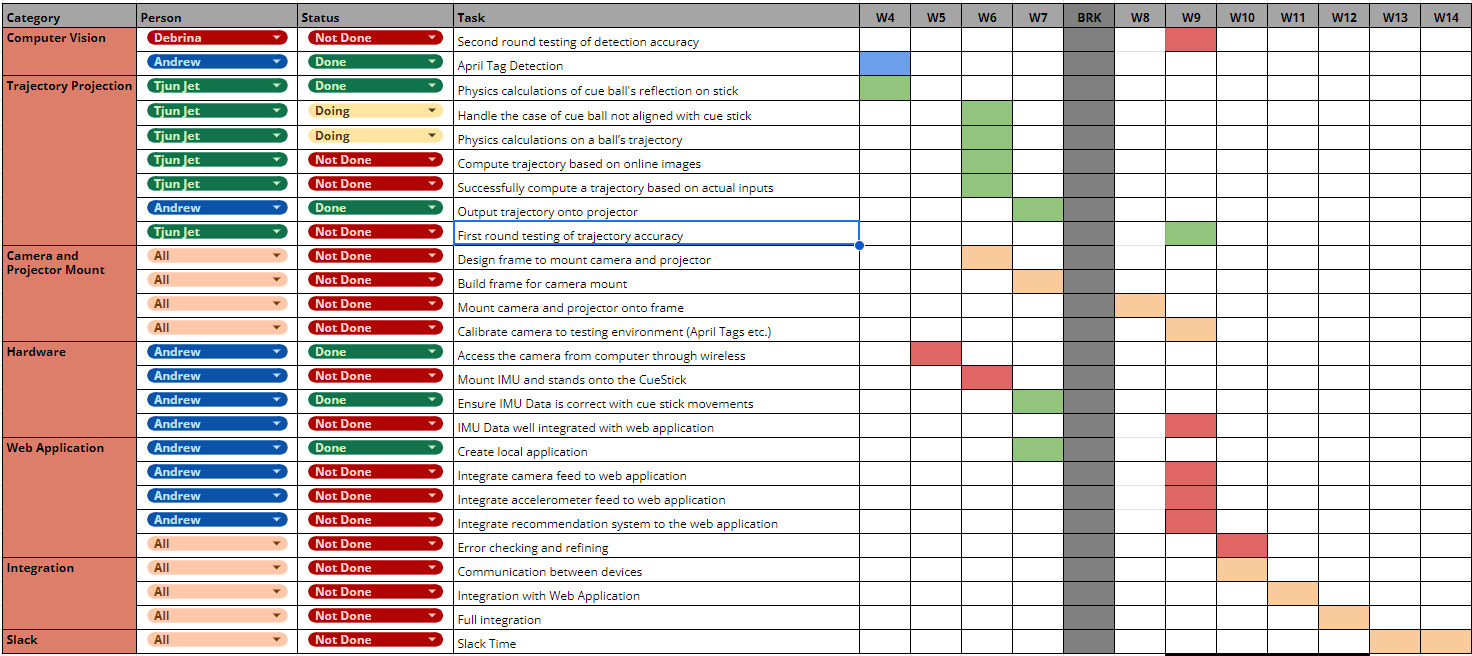

Team Status Report for April 6, 2024

This week, our team is on schedule. We had our initial project demo and received some helpful feedback regarding some improvements we could make in our system. Furthermore, our team also managed to get the projection running and output the trajectories on the table. The main focus of this week, however, was the testing and continued efforts for the full integration of our system. We continued to run tests and simulate different cases to identify any that may yield undesired behavior in our system. This week we also planned out a more formal approach to testing and verification of our system that is more rigorous and aligns well with our initial design plans. These plans will be outlined below.

The most significant risks we face now would be if our trajectory predictions are not as accurate as we hope for them to be. If this were to happen, it is likely that the inaccuracies are a result of our ‘perfect’ assumptions when implementing our physics model. Hence, we would need to modify our physics model to account for some imperfections such as imperfect collisions or losses due to friction.

This week, we have not made any modifications to our system’s existing design.

In the following week, we plan to conduct more rigorous testing of our system. Furthermore, we plan to do some woodworking to create a permanent mount for our camera and projector after we do some manual calibration to determine an ideal spot for their position.

The following is our outlined plan for testing:

Latency

For testing the latency, we plan on timing the code execution for each frame programmatically. We will time the code by logging the begin and end times of each iteration of our backend loop using Python’s time module. As soon as a frame comes in from the camera via OpenCV, we will log the time returned by time.clock into a log. When the frame finishes processing, we will again take the time when the predictions are generated and append this to the log. We intend to keep this running while we test out different possible cases for collisions (ball to ball, ball to wall, and ball to pocket). Next, we will find the difference (in milliseconds) between the start and end times of each iteration. Our use case requirement regarding latency was that end-to-end processing would be within 100ms. By ensuring that each individual frame takes less than 100ms to be processed through the entire software pipeline, we ensure that the user perceives changes to the projected predictions within 100ms which has the appearance of the predictions being instantaneous.

Trajectory Prediction Accuracy

For testing the trajectory prediction accuracy, we plan to manually verify this. The testing plan is as follows: we will take 10 shots straight-on, and we will use the mounted camera to record the shots. In order to ensure that the shots are straight, we will be using a pool cue mount to hold the stick steady. Then, we will manually look through the footage and trace out the actual trajectory of each ball struck and compare it to the predicted trajectory. The angle between these two trajectories will be the deviation. Angle deviation from all 10 shots will be averaged, and this will be our final value to compare against the use-case specification of <= 2 degrees error.

Object Detection Accuracy

For object detection accuracy, the test we have planned will measure the offset between the projected object and the real object on the pool table. Since the entire image will be scaled the same, we will use projections of the pool balls in order to test the object detection accuracy. The test will be conducted as follows: we will set up the pool table with multiple ball configurations, then take in a frame and run our computer vision system on it. Then, we will project the modified image with objects detected over the actual pool table. We will measure the distance between the real pool balls and projected pool balls (relative to each ball’s center) by taking a picture and measuring the difference manually. This will ensure that our object detection model is accurate and correctly captures the state of the pool table.

Debrina’s Status Report for April 6, 2024

This week I am on schedule. This week I resolved a few bugs in the physics model related to an edge case where a ball collision occurs near a wall. This caused the predicted ball location to be out of bounds (beyond the pool table walls). This was resolved using some extra casing on bounds checks. Furthermore I have been working on the ball detections and coming up with potential methods to distinguish between solids and stripes. For solid and stripe detection, I plan to use the colors of the balls in order to classify them. I took some inspiration from the group from a few semesters ago that did a similar project. These tasks are still works in progress which I intend to continue in the coming week.

This week our team also did some testing of our system. Although the system I worked on most was the object detection model, I helped Tjun Jet do some testing on the physics model. The testing we have done this week was rather informal as our goal was to identify edge cases in our physics model. To do this, we ran our system and placed the cue stick in multiple angles and orientations in order to ensure that each orientation and direction would allow for correct predicted trajectories. We wanted to identify any cases that would cause our backend to crash or produce incorrect outcomes. In coming weeks we will come up with more potential edge cases and do some more rigorous testing of the integrated system.

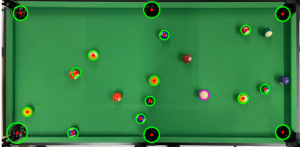

The testing I have done for my individual subsystem is evaluating the accuracy of the ball detections. So far, I have done informal evaluations on the accuracy of the detections in order to determine the ideal lighting conditions required for the model to achieve its best performance in terms of ball detection accuracy. Furthermore, I have evaluated the different cases in which some balls may fail to be detected. To detect these issues, I tried different positions of the balls and observed whether the detection model would perceive them properly (when the detection model runs, it prints out circles around the ball that it detects using cv2’s imshow method). Some cases I tested, for example, were placing several balls side by side; placing balls near the pocket; and placing balls against the pool table’s walls. These tests were done mainly to ensure the proper basic functionality of the detection model.

In the following week, I will conduct tests that are more focused on the accuracy of the ball detections under different possible layouts. Furthermore, I will be testing the accuracy of the pocket locations and the wall detection. For the ball detection tests, the cases I will focus on are:

- When balls are right next to each other (this may make the model have trouble detecting them as circles)

- When balls are right against the walls

- When balls are next to the pocket

- When balls are inside the pocket (ensure they are not detected)

- When the cue ball is missing (ensure that no other ball is perceived to be a cue ball)

In order to test the accuracy of the ball detections in these different conditions, I will be projecting the detections onto the table and taking measurements of the difference between the ball’s centers and the centers of the detections. In our design proposal’s use case requirements, we specified a maximum error of 0.2in.

The procedure for testing the accuracy of the pocket detection will be similar to that of the balls.

In order to test the accuracy of the wall detections, I will also project the detections on the table and measure the distance between the endpoints of each wall to the edge of the actual wall. For this, we are also aiming for a maximum error of 0.2in.

These tests will allow me to evaluate the level of accuracy of our detection models, which will have an impact on the accuracy of our final trajectory predictions.

Debrina’s Status Report for March 30, 2024

This week our team continued our efforts to integrate the full system in order to have a smooth-running demo by Monday, April 1st. In terms of our schedule, I am on track.

I continued running tests of our backend on the live video and solving bugs that emerged due to edge cases, and I mainly worked on improving the pocket detection algorithm in the physics model. First, I modified it to account for the direction of the trajectory. I also modified the trajectory output when a ball is directed towards a pocket. Previously, the trajectory drawn would be between the ball’s center and the pocket’s center. However, this leads to an inaccurate trajectory; instead it is more natural to extrapolate the trajectory from the ball’s center to the point on the pocket’s radius which it intersects. Lastly, I make sure to check how close to the center of the pocket the intersection between a trajectory and the pocket is. This is because an intersection with the pocket doesn’t necessarily mean that the ball will be potted. An example to consider would be if the trajectory is right on the edge of the pocket’s radius. Even though there is an intersection, the ball will not be potted as it will not fall into the pocket. Hence, in this case, the trajectory would instead collide with a wall.

Aside from the physics model, I worked on the calibration of our system on startup. I added a method to calibrate the wall detection and pocket positioning using the first 50 frames taken on startup of our system. This is because the wall and pocket locations shouldn’t change much, so we shouldn’t need to re-detect these components on each frame taken by the video. I also added a small modification of our wall detection model to ensure that the left and right walls are to the left and right of the midpoint of the frame (similarly to ensure that our top and bottom walls are to the top and bottom of the frame). This helped inaccurate detection of our walls that caused them to be on the wrong side of the table on some frames.

Lastly, I have been working on improving the ball detection to make it more accurate and reduce false positive detections. I am using a color mask to distinguish the balls from the table, which will allow the balls to contrast more with the table and eliminate false positives caused by subtle contour detections on the table. This is still a work in progress that I am to complete tomorrow (Sunday, March 31st).

In the coming week, I will continue running integration tests to solve any bugs that may arise as we try out more edge cases. I hope to help out with implementing the spin trajectory — a new feature of our system that we have decided to add and began to work on last week. This week I also ordered LED lights, so I hope to install them in the coming week and make sure that the lighting conditions improve our detection models.

Team Status Report for March 23, 2024

This week our team continued to work towards the full integration of our system. We continued conducting tests that would allow us to let our different modules interact with each other. We identified several edge cases that we did not account for in both our object detection models and physics models. This week, we worked towards accounting for these edge cases. Furthermore, we had great advancements in improving the accuracy of our cue stick and wall detection models.

In the computer vision side of our project, our team made significant progress on cue stick detection and wall detection. Andrew spent a lot of time creating a much more accurate cue stick detection model after testing out different detection methods. Although the model was quite accurate in detecting the cue stick’s position and direction, there is an issue where the cue stick is not detected in every frame captured by our camera. This is an issue that Andrew will continue to address in the coming week as it is crucial that the cue stick data is always available for our physics model’s calculations. Tjun Jet was able to detect the walls on the table with much better accuracy using an image masking technique. This advancement is beneficial for the better accuracy of our trajectory predictions.

The most significant risk for this project right now is we did not successfully get our output line out, which we intended to do this week. Initially, the output line seemed to be working as we tested it on a singular image. However, during integration with the video, we realized that there were a lot of edge cases that we did not consider. One factor we didn’t account for was the importance of the direction of the trajectory lines. In our backend, we represent lines using two points on the line; however, some of the functions need to be able to distinguish between the starting and ending point of the trajectory. Hence, this is an additional detail that we will standardize across our systems. Another edge case was ensuring that the pool stick’s cue tip had to lie within a certain distance with the cue ball in order for us to project the line. Further, the accuracy of the cue stick trajectory is heavily affected by the kind of grip one uses to hold the stick. There were also other edge cases we had to account for when calculating the ball’s reflection on the walls. Thus, a lot of our code had to be re-written to account for such edge cases, putting us slightly behind on schedule regarding the physics calculations.

One change that was made for this week is that Debrina and Tjun Jet swapped roles. This change sparked as Debrina realized that she was not making much progress in improving the accuracy of the Computer Vision techniques, and Tjun Jet felt that it would be better to have a second pair of eyes to implement the physics model, as it was non-trivial. Thus, Tjun Jet searched for more accurate methods for wall detections and Debrina helped to find out what’s wrong with the physics output line. Another reason for this swap is because integration efforts have begun, and thus, roles are now slightly more overlapped. We also began working together and having more meetups. Thus, our group mates have ended up working together to solve each other’s problems more compared to before.

Debrina’s Status Report for March 23, 2024

This week I am on schedule, but there are some issues we faced in integration this week that may slow down progress in the coming week. This week our team focused on integration to begin testing the full system – from object detection to physics calculation and trajectory projection. Our testing helped us classify some bugs in our implementation caused by certain cases. These bugs led to some unexpected interruptions and sequences in our backend system. Hence, this week I helped to work on the physics model to add more functionality and to improve some existing implementations to account for edge cases.

In the physics model, I implemented a method to classify the wall that a trajectory line collides with. Furthermore, I fixed some bugs related to division by zero, which would occur whenever a trajectory line was vertical. This case would happen if a user points the cue stick directly upwards or downwards. Next, I tried to fix some bugs related to the wall reflection algorithm since I noticed that it only worked as we expected on specific orientations of the pool cue. I began implementing a few more features to this algorithm so that it would account for walls that are not purely horizontal or vertical. I also modified the return value of this function to return a new trajectory (after the wall collision) instead of a point. This is crucial as this new trajectory is required for the next iteration of our physics calculations. With this new implementation, there are still a few bugs that cause the function to encounter errors when a collision occurs with the top or bottom walls. I will be consulting my teammates to address these errors during our team meeting tomorrow (Sunday, March 24th).

The edge cases that we discovered during our preliminary testing led me to realize that we could introduce more standardizations for our backend model. I added a few more objects and specifications to the API that Tjun Jet had designed in prior weeks. In particular, I added a new object called ‘TrajectoryLine’, which will allow us to explicitly differentiate between the starting point and the end point of a trajectory. This is helpful to use in some components of our physics model where the direction of the trajectory is crucial.

In the coming week, I will work on modifying parts of our backend that need to be updated to meet the new standardizations. I will continue to help work on the physics model implementation and conduct testing on trajectory outputs to ensure that we have covered all possible cases of orientations of the cue stick and locations of the pool balls. With regards to our project’s object detection model, I hope to improve the ball detections by using different color masks to better filter out noise that may be caused by different lighting conditions.

Team Status Report for March 16, 2024

This week we worked on finalizing our backend code for object detection and physics. We were able to finish our physics model, and can now begin testing its accuracy. This week, we also cleaned up our backend code to ease the integration phase in coming weeks. We created documentation for our APIs and refactored our code to align with our updated API design standards. We also took a few steps towards integrating our backend by combining the physics model and object detection model. In terms of the structure of our system, we were able to mount the projector and camera, and we will make our current setup more sturdy in the following week. With these components in place, we are on track to begin testing our integrated design on a live video of our pool table this coming week.Aside from making progress on our system, our group spent a decent amount of time preparing for the ethics discussion scheduled for Monday, March 18th. We each completed the individual components and plan to discuss parts 3 and 4 of the assignment tomorrow (Sunday, March 17th).

This week was a busy week for our team, so there were some tasks that we were unable to complete. Although we planned to create our web application this week, we will now push this task to span the next two weeks, and we will work on this in parallel with our testing on different subsystems. We don’t foresee this delay to pose significant risks as the main component of our project (the trajectory predictions) do not depend on the web application.

We came up with some additional features to implement in our system, and after discussing this in our faculty meeting, we have a better idea of the design requirements. We plan to implement a learning system to give the users recommendations based on their past shots taken. This will require us to detect the point on the cue ball the user hits, as well as the outcome of the shot—whether or not the target ball fell into the pocket. This will require us to implement some additional features in our system, and we plan to complete these in the coming week.

The most significant risk we face now is the potential lack of accuracy of our detection algorithms. As we begin conducting testing and verification, it is possible that there are inaccuracies in our physics model or object detection model that cause our projected trajectory to be inaccurate. In this case, we would need to detect which components are causing the faults and devise strategies to solve these errors. We do have plans in place to mitigate these risks. If there are issues with the accuracy of our object detection models, we may use more advanced computer vision techniques to refine our images. In particular, we may implement OpenCV’s background subtractor tools to better distinguish between the game objects and the pool table’s surface.

Debrina’s Status Report for March 16, 2024

This week I was able to stay on schedule. I created documentation for the object detection models. I updated some of the return and input parameters of the existing object detection models to better follow our new API guidelines. Furthermore, I created a new implementation for detecting the cue ball and distinguishing it from the other balls. Previously, cue ball detection depended on the cue stick’s position, but we decided to change it to be dependent on the colors of the balls. To do this, I filtered the image to emphasize the white colors in the frame, then performed a Hough transform to detect circles in the image. Since this method of detecting the cue ball worked quite well on our test images, in the coming week I plan to try implementing a similar method to detect the other balls on the table in the hopes of being able to classify the number and color of each ball.

In terms of the physical structure of our project, I worked with my team to set up a mount for the projector and camera and perform some calibration to ensure that the projection could span the entire surface of our pool table setup. Aside from the technical components of our project, I spent a decent amount of time working on the individual ethics assignment that was due this week.

In the next week I hope to continue doing some integration among the backend subsystems and conduct testing. It is likely that the object detection models will need recalibration and potential modifications to better accommodate the image conditions of our setup, so I will spend time in the coming week working on this. Furthermore, I plan to create an automated calibration step in our backend. This calibration step would detect the bounds of the walls of our pool table setup, the locations of the pockets, and tweak parameters based on the lighting conditions. This calibration function would allow us to more efficiently recalibrate our system when we start it up in order to yield more accurate detections.