Related ECE Courses

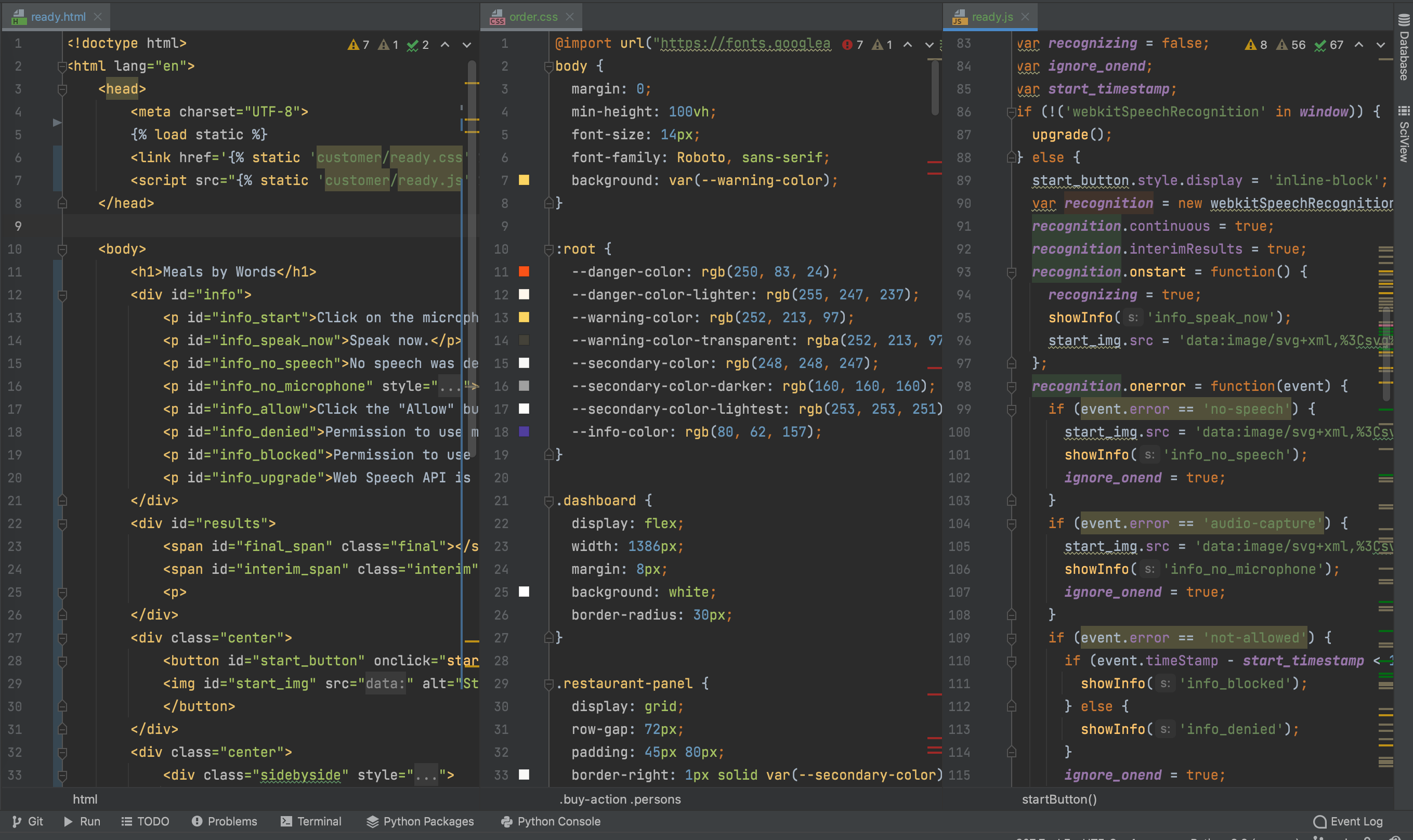

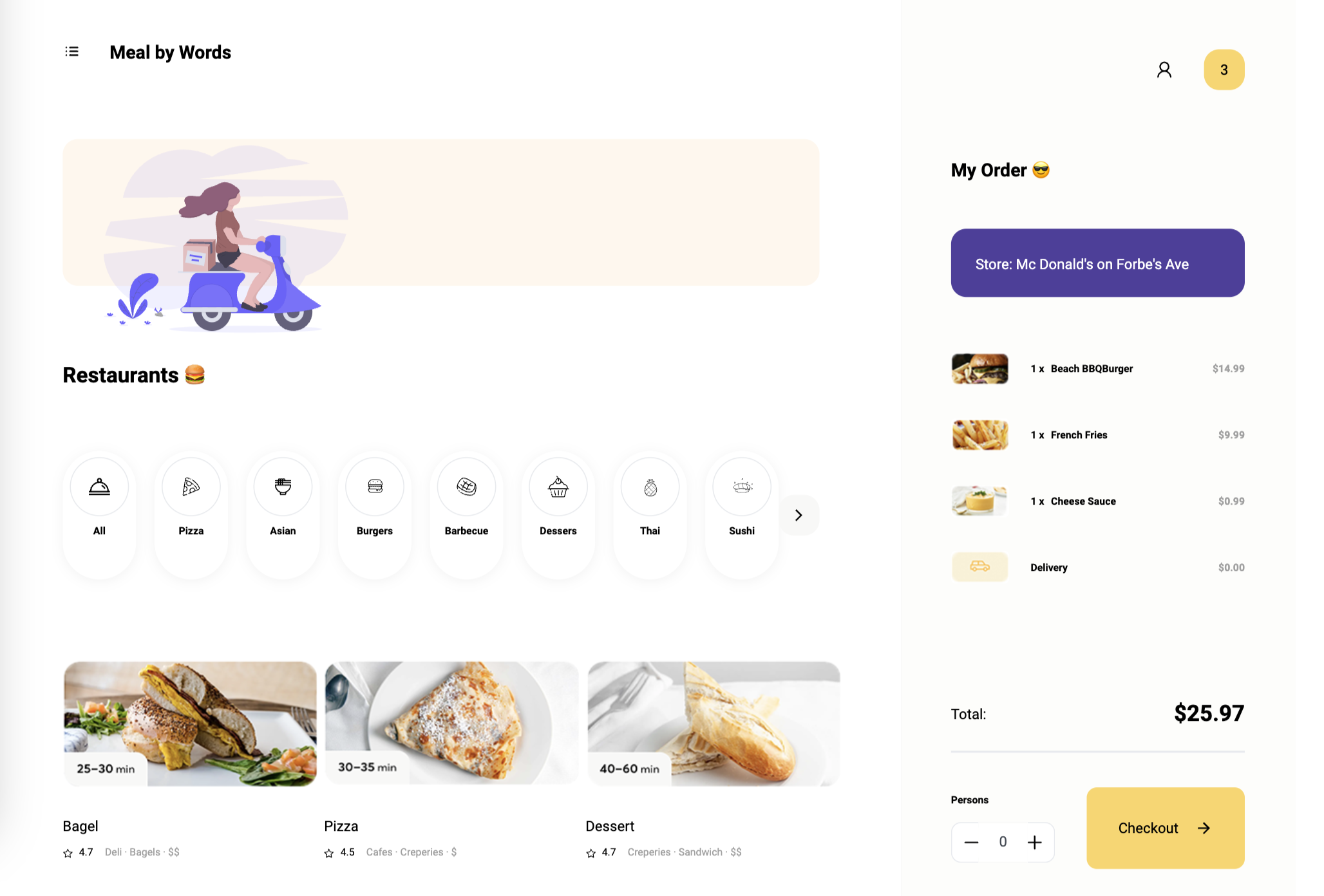

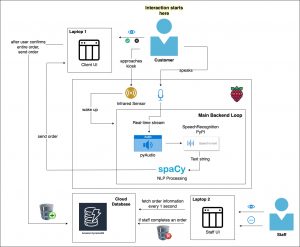

I learned about modularity and ethics in 15-440 Distributed Systems, 17-214 Principles of Software Construction, and 17-437 Web Application Development. An example of using the modularity principle would be that, when designing a distributed system that involves clients, proxies, and servers, it is important to divide the system into separate, independent components or modules. For our project, we have divided the system into speech recognition (backend), motion detection (backend), database (backend), user interfaces (frontend), and hardware components such as microphones and infrared sensors. Each person in our team is responsible for one or more of these modules.

Personal Accomplishments

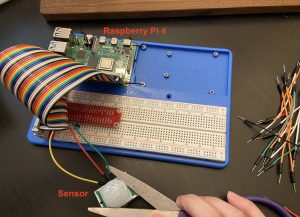

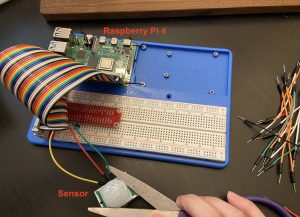

One of the tasks assigned to me was to make the infrared sensor work with the rest of the system. The sensor should signal the Raspberry Pi when a customer is within 0.3 – 1m away from the kiosk.

HC-SR501 PIR sensor (front & back)

After doing some research, I made a list of hardware components I needed:

1 x HC-SR501 PIR sensor

1 x 830-point solderless breadboard

1 x Raspberry Pi holder compatible with Raspberry Pi 4B

1 x T-shape GPIO Extension Board

1 x 20cm 40-pin Flat Ribbon Cable

5 x HC-SR501 Motion PIR sensor

Resistors

Jumper wires

I ordered them online, and they arrived this past week. I brought the Raspberry Pi home with me from the class inventory and spent Friday assembling them.

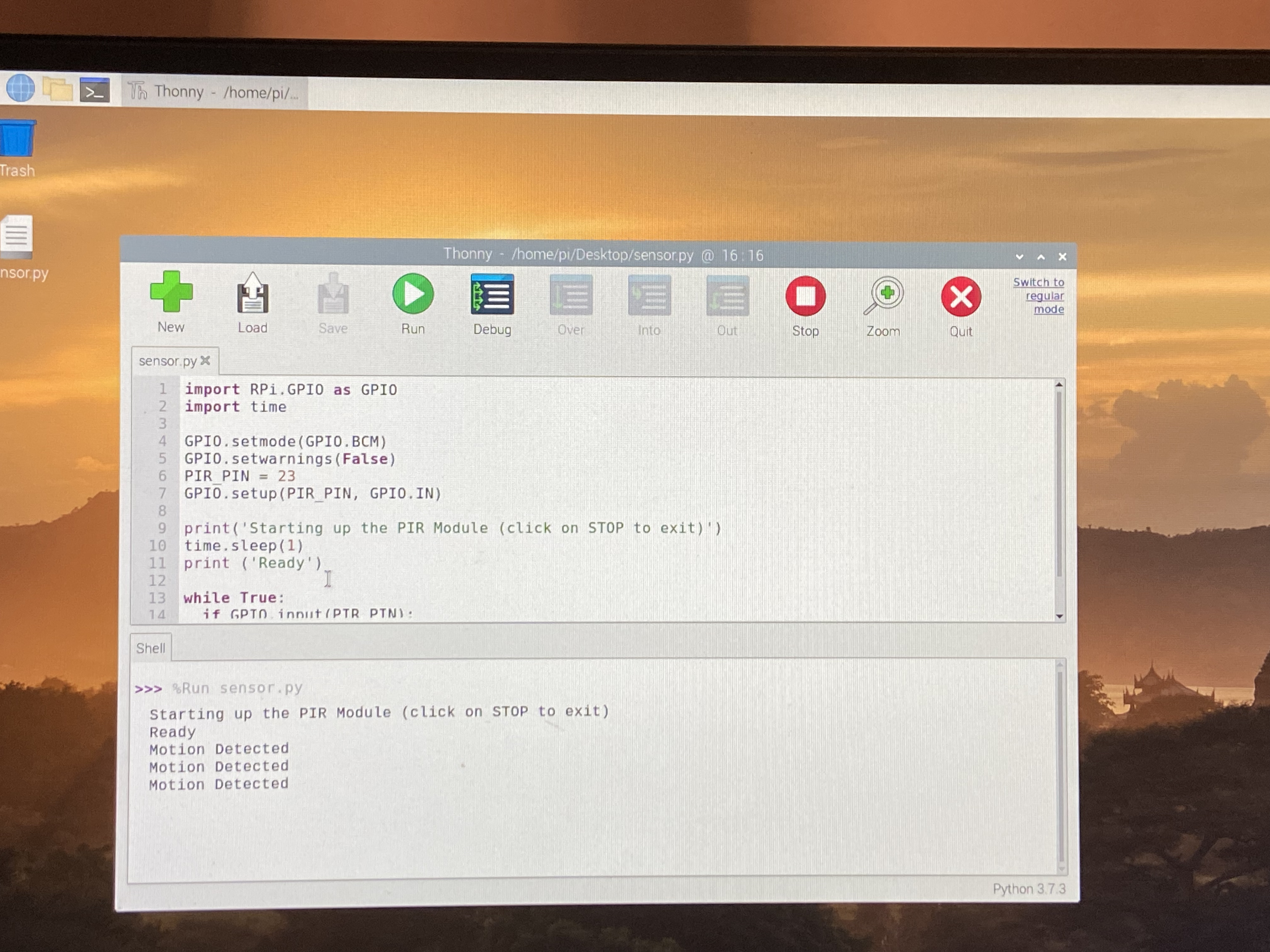

The next step was to write a Python script that would allow us to visualize when motion is detected. I downloaded the Thonny IDE and used it to write the code because of its vanilla-like interface.

import RPi.GPIO as GPIO

import time

GPIO.setmode(GPIO.BCM)

GPIO.setwarnings(False)

PIR_PIN = 23

GPIO.setup(PIR_PIN, GPIO.IN)

print('Starting up the PIR Module (click on STOP to exit)')

time.sleep(1)

print ('Ready')

while True:

if GPIO.input(PIR_PIN):

print('Motion Detected')

time.sleep(2)

It prints “Motion detected” when the sensor detects movement (condition applied). Along the way I discovered a few places that caused bugs and fixed them by:

- Giving a 1-sec sleep to settle the sensor before entering the infinite loop.

- Giving a 2-sec sleep in each iteration to avoid multiple motion detections.

After running this code, the shell should look like:

On schedule?

Yes, my progress is on schedule.

Deliverables for next week

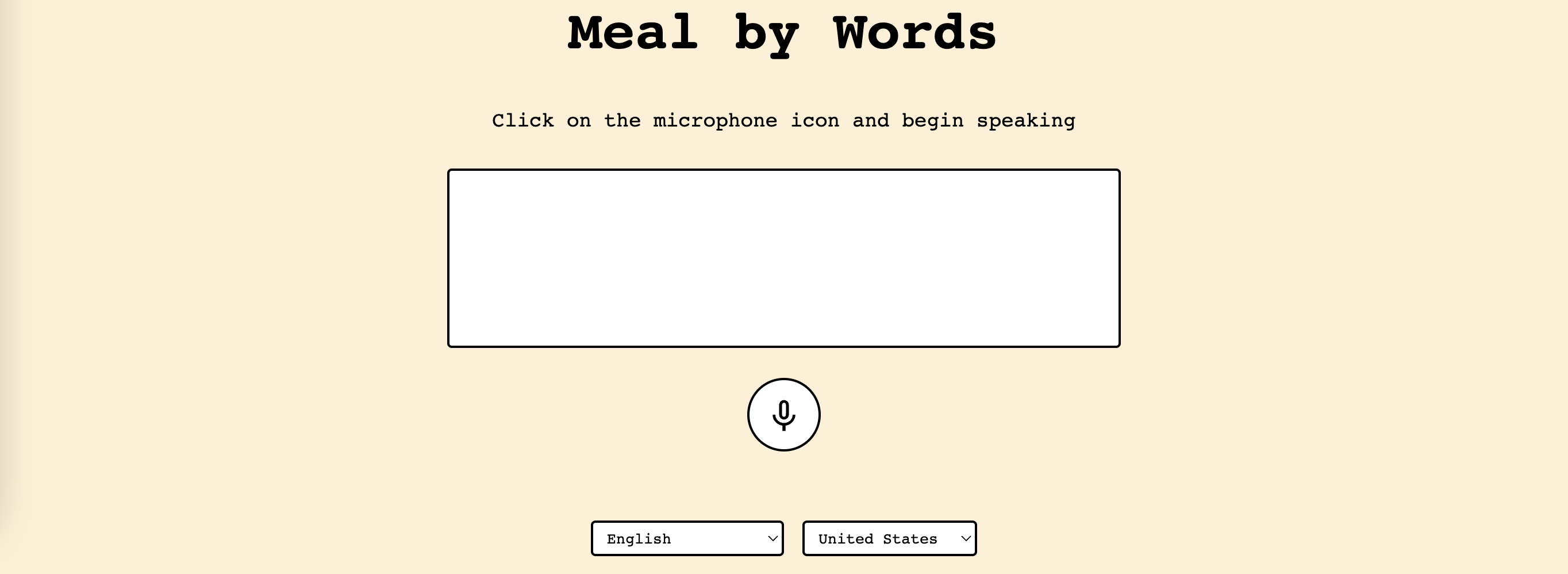

It doesn’t make sense to add more code for the sensor until the backend is a bit more developed (currently at the design stage). Therefore, I will move on to working on the frontend (customer UI & staff UI). I will be discussing with Lisa, who is responsible for the speech recognition part, about what to display on the web pages as this is semi dependent on how & how fast speech is parsed.