Progress Updates:

- Had a meeting with the yoga instructor we are working with to get ideal poses and learn about typical errors and metrics to correct yoga poses

- Collected reference pose images for all the 6 poses we want our system to have

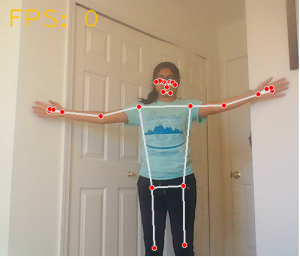

- Currently working on analyzing the poses to determine which nodes and what angles should be used for pose comparison. We are also computing pose detection values and storing them so it does not need to be recomputed each time.

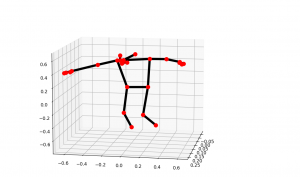

- Yoga expert helped us understand additional error signals used in in-person instruction that should be present in our pose correction system, most notable of these being the concept of alignment along axes on a person’s pose (for example, arms and shoulder should be collinear)

- Implemented library for Pose Correction component to compute:

- Euclidean angles given 3 joints on reference pose

- Distance between 2 joints (to check if they are in contact)

- Check if all the required joints are visible (to make sure they are in frame, and that there joints are not one behind the other)

- Developed our design review presentation:

- Fleshed out our system’s design

- Better understood how to implement sub-systems

- Practices and created slides

Our Major Risks so far include:

- Monitor and hardware acquisition: We are hoping to acquire 2 monitors to construct a full-length mirror, but are not sure how to acquire at a reasonable price and integrate them together along with the two way mirror.

- Contingency plan is to have one smaller monitor that acts like a mirror and have the user stand further away so their entire image can still be seen.

- Mirror UI: As mentioned in our presentation, another huge risk is how to display our Pose Correction results on the mirror itself and whether any further mapping may be required. We have spent some time working through the physics of it so it seems more plausible now but is still a risk until we acquire the hardware.

- Contingency plan is to simply render the camera feed on monitor

Guiding Engineering/Scientific Principles For Our Design

- Machine Learning/Computer Vision:

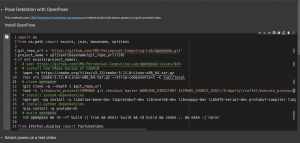

- Core components of our project like the pose estimation capabilities rely on computer vision ML models. The model we’ve selected to use is a convolutional neural network (CNN) model called BlazePose that is optimized for real-time, on-device inference

- Computer Graphics:

- Need to use efficient and lightweight computer graphics libraries/methods in order to render pose corrective suggestions in real-time in a visually understandable way

- Software engineering:

- Working with several python modules and writing a custom pose comparison library

- Geometry:

- Pose comparison is based on angles and distances, which are rooted in 2D and 2D geometry

- Physics of light and mirrors:

- Using the behavior of light and principles of reflection to understand where a person’s reflection will appear on the mirror

- Firmware and hardware-software interface:

- Integrating camera input with the computer

- Working with speakers for audio output

- Human-Computer Interaction (HCI):

- Have HCI as an additional guiding principle to make sure that the final integrated system is easily usable and accessible for users.

- Ensure that the UI and corrective feedback are delivered in a way that is understandable and doesn’t compromise the yoga learning experience (deliver audio cues to avoid user physically interacting with the screen for example)

Timeline Update:

For our software algorithm, we are on track. However, we were supposed to buy the hardware last week. We spoke to the TAs about using monitors that are already present in the lab and are waiting on that information to go ahead with the purchase of materials. We hope to make that purchase this week, which will push back our hardware timeline by a week.

Next Steps:

- Complete error comparison this week

- Order hardware and begin assembly of hardware

- Complete design presentation