Progress Updates:

- Scheduled a meeting with the yoga instructor to get pictures on the ideal poses. We also created a plan on questions to ask, including what the typical errors a person makes, what areas to focus on for each pose, etc.

- The meeting is scheduled for Monday

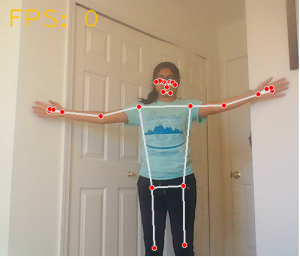

- Worked on pose detection algorithm with blaze pose using media pipe’s pose detection algorithm

- Spent a lot of time understanding the various outputs of the mediapose’s library

- Created a detailed plan of all the information we need to extract from the poses for the pose correction aspect

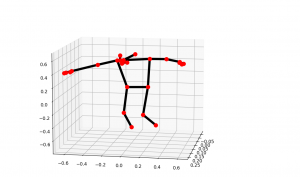

- Build 3D renderings of the poses so we can have a better spatial understanding of the poses

Our Major Risks so far include:

- The Pose Correction algorithm does not perform as well as we outlined in our requirements section (>90%)

- Contingency plan is to have an overall pose accuracy rather than error detection at each node or limb

- Monitor and hardware acquisition: We are hoping to acquire 2 monitors to construct a full-length mirror, but are not sure how to acquire at a reasonable price and integrate them together along with the two way mirror.

- Contingency plan is to have one smaller monitor that acts like a mirror and have the user stand further away so their entire image can still be seen.

- Mirror UI: As mentioned in our presentation, another huge risk is how to display our Pose Correction results on the mirror itself and whether any further mapping may be required.

- Contingency plan is to simply render the camera feed on monitor

Design Updates includes:

- From our Proposal Presentation feedback we rescoped our project by

- Removing hand gesture input and profile features to focus on creating a robust and reliable Pose Detection and Correction algorithm

- Testing the algorithm on the computer for now and removing the Raspi integration

- Solidified the workflow of the project and further divided out tasks

Ethical and Non-Engineering Considerations:

- Device must be inclusive of all body types, shapes and sizes and provide the same objective user experience to all people irrespective of these physical differences

- PosePal should not collect or store personally identifiable information to protect user data and establish user trust in using the system solely for their own personal fitness

- Corrective suggestions should be delivered positively in a way to enhance the learning experience, with positive feedback given by the device to effectively support users

- As we hope users can rely on PosePal to learn yoga, we need to ensure that the corrections given are actually correct and match what a human yoga instructor would give to avoid leading users incorrectly as they learn different poses

Next Steps:

- Build a pose correction library based on the discussed plan from this week

- Complete meeting with yoga instructor to get reference yoga pose models and validate our methodology of error detection and correction (i.e. do the signals we look for to correct poses match what the instructor would look for in an in-person class?)