This past week I spent some time finalizing my prep for the final presentation, then I moved to focus on working on our poster and planning testing. I picked up our new mics, which we were able to test out on Friday. The new mics take noticeably better recordings than our UMA-8 array.

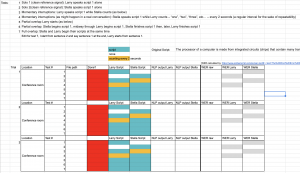

Before the Friday testing, I wrote up the tests that we should perform, how many times we should perform them, and created a spreadsheet to keep track of our tests and results so that we could get through data collection and analysis more efficiently. I decided on 3 trials per test, as this is a standard minimum number of trials for an experiment.

In our last meeting, Dr. Sullivan gave us some advice as to how to test our system more effectively, and I incorporated that advice into the new testing plan. For one, Larry and I used the same script as each other for this round of testing. In test 7, where we spoke at the same time, I started on sentence 2 and spoke sentence 1 at the end, and Larry started on sentence 1. Initially we tried simply speaking the same script starting from the top, but Larry and I were speaking each word at the same time, so the resulting word error rates would not have been a good measure of our system’s ability to separate different speakers.

Another testing suggestion I added for this round was having a reversed version of each test, so that we could tell if there was any difference between word error rate for my voice (higher pitched) vs. Larry’s voice (lower pitched).

One suggestion that we have not yet used is testing in a wider open space, such as an auditorium or concert hall. For the sake of time (setup and data collection took 3-4hrs), we decided to only get conference room data for now.

We are currently on schedule.

On Sunday, I will complete the poster. Next week, I will generate the appropriate audio files and calculate WER for the following sets of processing steps:

0. Just deep learning (WERs already computed by Charlie)

1. SSF then deep learning, to see if SSF reverb reduction improves the performance of the deep learning algorithm

2. SSF then PDCW, to see if this signal processing approach works well enough for us now that we have better mics