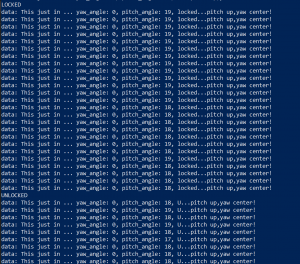

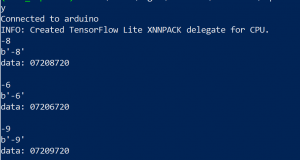

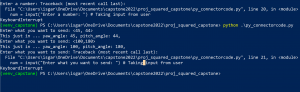

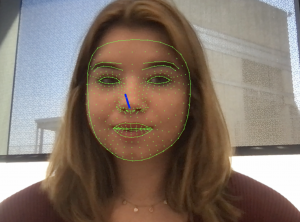

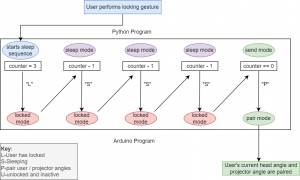

Earlier this week, before Wednesday, I completed the full new version of the calibration state machine and tested to make sure the stages were switching through correctly. I also made a python testing suite to test all the mathematical functions I was using within the arduino code-the reason being, in python it’s much easier and faster to print out debugging material, and debugging trig and geometric conversion code can be a headache at the best of times, so I needed as much feedback as possible. On Wednesday during our practice demo, I noticed the locking mechanism happens a bit too fast for a user to properly interact with it, so I designed and implemented a mechanism within the calibration phases to provide some delay between the user locking the projector and the program actually pairing the user/projector angles with each other. I made a diagram of this process:

Now the “L” still stands for lock, and stops the motors, the “S” means the python program is ‘sleeping’, and the “P” signals for the arduino program to pair the angles. The reason I did this in stages is to leave room for a possible python program addition that counts down the seconds until pairing so the user knows they should look at the projector.

Next week, I need to explain to my teammates how to set up the physical system and work the calibration program. I can’t leave my home until Friday because I’ve got COVID, and I’ve been pretty sick but have been slowly recovering. The good thing is the bulk of this is built and debugged, we just need to get the physical setup going now. Our goal is to get this ready by the Wednesday demo, and I will support this remotely in whatever way I can.

I’m still on schedule for everything except the lidar. Since I had to isolate this weekend, I couldn’t go into the lab and make the lidar circuit. I’ll have to do this next weekend.