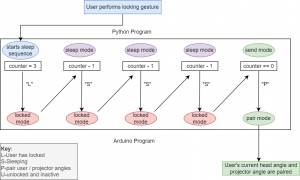

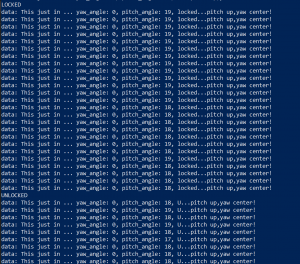

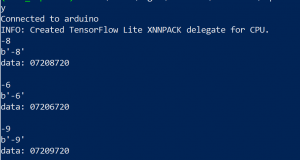

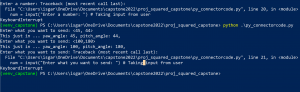

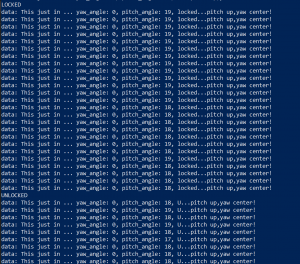

In addition to redrawing the state machine for the calibration (shown Wednesday), I’ve since been working on refining the linear motor movement program. Rama has been giving me her interface notes and functions that she wrote for motor movement, so I’ve been incorporating those with the arduino state machine to move the motor vertically as well as horizontally. Finally, I’ve added a fix for the issue of the motor not stopping between the user turning their head by adding a ‘center’ signal between ‘left’ and ‘right’, which sends a stop signal. I’ve also integrated Olivia’s code for the locking signal, and tested that it makes it over to the arduino and registers correctly (the ‘locked’ means locked, and the ‘U’ means unlocked):

Something I’ve been considering with Olivia is how to improve the accuracy of our locking gesture. Today I had a friend with thick glasses test the program, and it had trouble detecting the three blinks. We might consider a hand gesture or head nod as an alternative to this.

Over the next week I will continue testing each stage of the calibration process and continue making refinement notes (which I am tracking in a document), so we can consider these refinement notes when we do end-to-end user testing after this next week. Some examples of these notes are:

-send floats over serial port rather than int

-Consider the thresholding for the first head movement phase

-Decrease the threshold for blink detection

And so on. My goal is to finish the testing by the end of the week, and hopefully clean up the code a little so it’ll be more readable to my teammates.

Currently I’m on schedule for the calibration, although the testing and refinement phases have been merging in with each other more than expected. The lidar just arrived, and my plan as of now is to integrate it next weekend after I can walk my teammates through the current calibration code. This is mainly so any roadblocks with the lidar don’t jeopardize the demo, since we’ll do all the preparation for that this week.