Our final report has been posted here: http://course.ece.cmu.edu/~ece500/projects/s22-teamc6/final-report/. Thanks for a wonderful semester!

Isabel’s Status Report for 4/30

This week, we finished up our initial user studies on Sunday, and then completed a more informal user study today to fine tune our system. I was mainly focusing on preparing for the presentation, since I was the designated presenter for this time. I worked on the slides and performed some small code updates for improvements we came up with during testing to take out some of the jitteriness from our system, refine the calibration process, and clean up the code, but this week was less of a large update compared to the previous weeks.

We’re still on schedule and it looks like things will be wrapping up without any trouble next week. Next week I will help with planning and editing the video, putting together and refining our final report, and preparing for our demo!

Isabel’s Status Report for 4/23

This week, I’ve been mainly working with the entire team with debugging and improving the overall system. On Monday, I tested 3 different software serial programs to integrate the lidar values. I ended up going with SoftwareSerial even though it’s a blocking program, because it worked the most consistently with verifying the data from the lidar, so I found a way to quarantine the distance detection into the arduino’s setup code.

After Monday, the biggest two changes I implemented were one for smoothing the horizontal movement of the projector, and optimizing the pipeline. And the movement of the projector now runs noticeably smoother and faster! Which is good, because we have been finding lots of new bugs to work through during the user tests.

With the lidar integrated, now I am back on schedule. We anticipated that the last few weeks would be for our whole team to work on refinement, so everything I’ve been doing is falling into that category.

For this next week, I need to redo the block diagrams for our system, prepare for the presentation on Monday, smooth the vertical movement of the projector, and I hope to make another small redesign of the calibration program to make it much easier on the user, since we’ve been noticing from our tests that it can be difficult to move around the projector to two different angles, and then look at them correctly. Our calibration code is picky and very sensitive to error, so I want to make it as robust as possible before the demo.

Team Status Report for 4/16

This week we had an unfortunate incident where the battery overheated and fried our vertical motor. We had to rush order a new one, and we’re planning to cope with this to get it set up as best as we can in time for our user study in the second half of this week. If something goes wrong with getting the replacement, our contingency plan is to just default to the horizontal motor and try to set up our apparatus in such a way that the projector is in line with an average user’s height.

We aren’t going to be making any large changes to the system at this point, we’ve just been coming up with ways to make our program more robust (ex. averaging the user’s angle during the pairing process to soften if we get one bad reading, etc.). Again, we are planning as a team to get some people to test our system as a user study, as soon as we can (hopefully) get the new vertical motor set up.

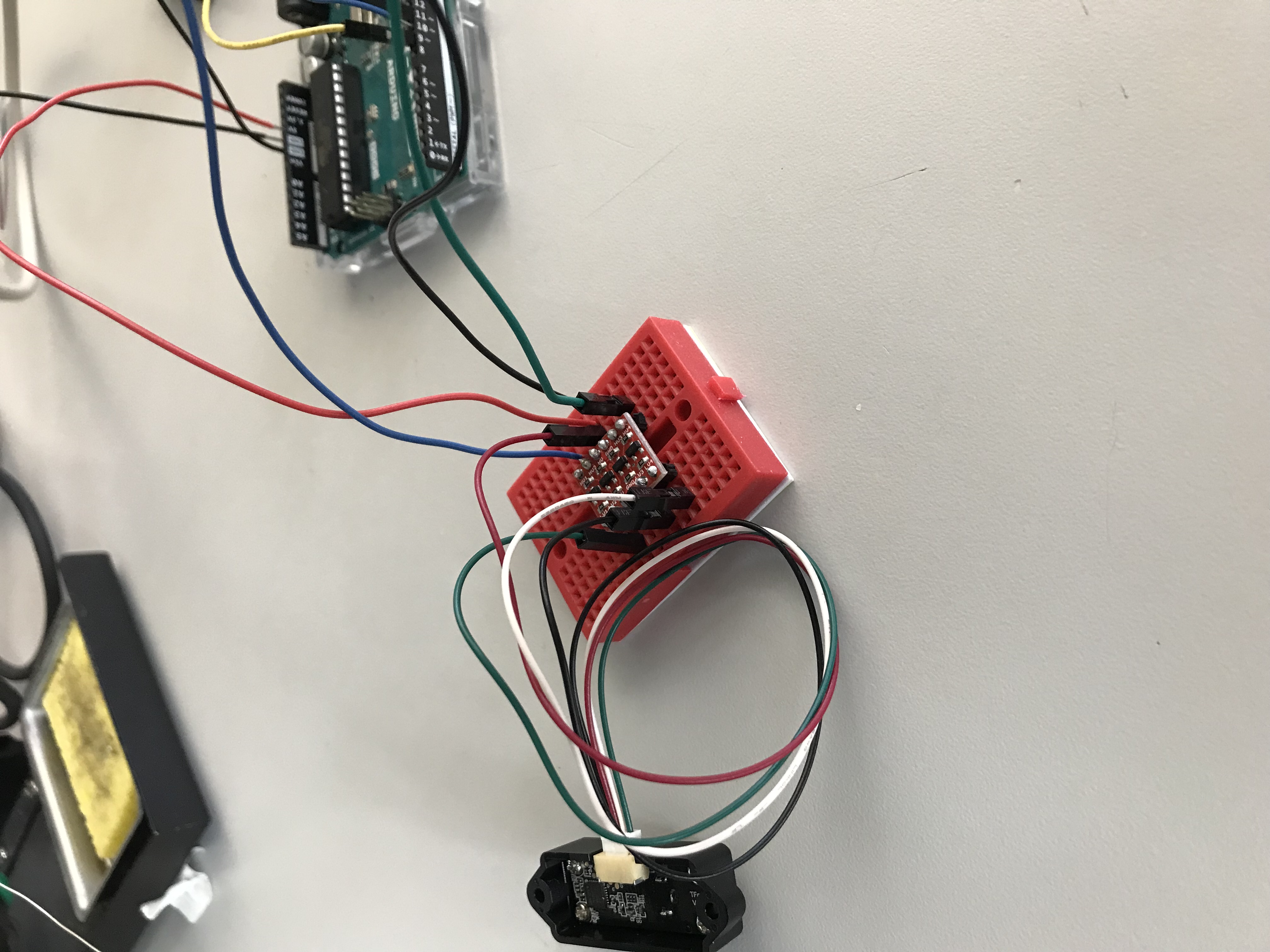

We’ve got the full setup on the tripod, and just need to find a way to attach our various circuits (lidar, arduino) onto the tripod to make it more portable. The lidar circuit is also now built and confirmed that it works properly in communication with the arduino.

Isabel’s Status Report for 4/16

This week, I couldn’t be there in-person for the demo due to COVID, but I was still able to work on the project remotely thanks for my Arduino at home. I could also join zoom remotely during lab time, so I was able to help my teammates with running the full pipeline. I’ve been mostly debugging with our team and helping come up with new ideas to improve our pipeline. I was considering setting up some sort of sound system to guide the user through the locking and pairing procedure. Also, I was able to solder and build the lidar circuit when I got out of quarantine on Friday, and got it running with some test arduino code from this website: https://github.com/TFmini/TFmini-Arduino/blob/master/TFmini_Arduino_SoftwareSerial/TFmini_Arduino_SoftwareSerial.ino which fixed a checksum issue I was having with the basic reading code that shipped with the tfmini library.

With this lidar setup, I’m almost back on schedule. I hope to finish integrating the code by Monday, so that then I will be completely caught up and we’ll be thoroughly in the refinement stage for this last week.

Over the next week, I will complete any debugging or improvement patches to our calibration code, finish integrating the lidar, and help out with the user study we will be conducting in the second half of the week. I will also help with the completion of the final presentation slides.

Isabel’s Status Report for 4/10

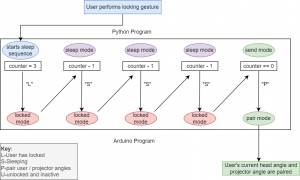

Earlier this week, before Wednesday, I completed the full new version of the calibration state machine and tested to make sure the stages were switching through correctly. I also made a python testing suite to test all the mathematical functions I was using within the arduino code-the reason being, in python it’s much easier and faster to print out debugging material, and debugging trig and geometric conversion code can be a headache at the best of times, so I needed as much feedback as possible. On Wednesday during our practice demo, I noticed the locking mechanism happens a bit too fast for a user to properly interact with it, so I designed and implemented a mechanism within the calibration phases to provide some delay between the user locking the projector and the program actually pairing the user/projector angles with each other. I made a diagram of this process:

Now the “L” still stands for lock, and stops the motors, the “S” means the python program is ‘sleeping’, and the “P” signals for the arduino program to pair the angles. The reason I did this in stages is to leave room for a possible python program addition that counts down the seconds until pairing so the user knows they should look at the projector.

Next week, I need to explain to my teammates how to set up the physical system and work the calibration program. I can’t leave my home until Friday because I’ve got COVID, and I’ve been pretty sick but have been slowly recovering. The good thing is the bulk of this is built and debugged, we just need to get the physical setup going now. Our goal is to get this ready by the Wednesday demo, and I will support this remotely in whatever way I can.

I’m still on schedule for everything except the lidar. Since I had to isolate this weekend, I couldn’t go into the lab and make the lidar circuit. I’ll have to do this next weekend.

Isabel’s Status Report for 4/2

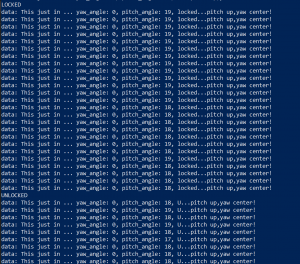

In addition to redrawing the state machine for the calibration (shown Wednesday), I’ve since been working on refining the linear motor movement program. Rama has been giving me her interface notes and functions that she wrote for motor movement, so I’ve been incorporating those with the arduino state machine to move the motor vertically as well as horizontally. Finally, I’ve added a fix for the issue of the motor not stopping between the user turning their head by adding a ‘center’ signal between ‘left’ and ‘right’, which sends a stop signal. I’ve also integrated Olivia’s code for the locking signal, and tested that it makes it over to the arduino and registers correctly (the ‘locked’ means locked, and the ‘U’ means unlocked):

Something I’ve been considering with Olivia is how to improve the accuracy of our locking gesture. Today I had a friend with thick glasses test the program, and it had trouble detecting the three blinks. We might consider a hand gesture or head nod as an alternative to this.

Over the next week I will continue testing each stage of the calibration process and continue making refinement notes (which I am tracking in a document), so we can consider these refinement notes when we do end-to-end user testing after this next week. Some examples of these notes are:

-send floats over serial port rather than int

-Consider the thresholding for the first head movement phase

-Decrease the threshold for blink detection

And so on. My goal is to finish the testing by the end of the week, and hopefully clean up the code a little so it’ll be more readable to my teammates.

Currently I’m on schedule for the calibration, although the testing and refinement phases have been merging in with each other more than expected. The lidar just arrived, and my plan as of now is to integrate it next weekend after I can walk my teammates through the current calibration code. This is mainly so any roadblocks with the lidar don’t jeopardize the demo, since we’ll do all the preparation for that this week.

Team Status Report for 3/26

This week, we were able to finish out some of the last pieces we need for an end-to-end pipeline. The circuit for out motor-to-arduino connection was completed, the calibration translation serial port was rewritten and debugged, and our computer vision pipeline now has a non-camera display version and a locking feature in progress. Once our members can join together on Monday, we should be able to put together our parts and begin debugging the entire pipeline, with the goal of presenting it on Wednesday.

The largest change was to the internal design of the calibration system. Now instead of sending data over the serial port one at a time, the byte stream for user pitch and yaw will be sent in one string, and then parsed by the arduino. This design involves more complex code on the arduino side, but makes our system more efficient and less error-prone.

The biggest risk currently is there may be unmitigated problems in our system that might pop up as we are putting together the pipeline on Monday, but our team is expecting this and we can each set aside time to debug the different problems that may arise. Once we can get through this process, we can begin gathering users for usability testing! Additionally we are still waiting for our camera and lidar to arrive, the camera delivery had an issue where the camera wasn’t in the package, so we need to wait a little more before integrating these tools. Luckily we don’t need them to debug our full system, so it doesn’t put us behind schedule, but we may need to put in some extra hours once those tools come in to learn how to read the data and input it into our program.

Isabel’s Status Report for 3/26

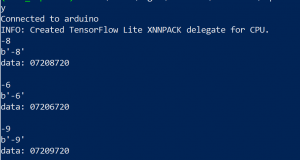

This week was also fairly productive. While I thought I was facing a single issue with the serial port last week when creating the calibration pipeline, in fact I was facing a set of issues. When I got the serial port running, the bytes that came back would be slightly out of order, or seemed randomly to be either what I was expecting or some disorganized mishmash of the codes I was using to signify ‘right’ (720).

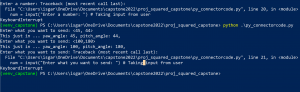

I decided to debug it more closely using by a test file ‘py_connectorcode.py’. I researched more on the serial port bugs and read through arduino forums, and found this useful resource https://forum.arduino.cc/t/serial-input-basics/278284/2 about creating a non-blocking function with start and end markers to prevent the data from overwriting itself. The non-blocking element is important so that our functions that are writing angles to the motor don’t stop while waiting for the serial port to finish. I also decided to remake my design to send only one pre-compiled message per round (for yaw and pitch of the person), and then print only one line back from the arduino, to clean up the messages.

The calibration code is complete, but still needs to be synthesized with the motor code before we can test it. Currently our team plan is to put together the pieces on Monday, so I’ll have this code in the head_connector.py file by the end of Sunday. Then next week I hope to help the rest of the team with debugging the code, specifically for translation, before coming up with ideas and most likely debugging the initial calibration process. Currently I am on schedule with the calibration process, but slightly behind with Lidar, since I was planning to integrate it this week but couldn’t due to it not having arrived yet. I’ve already researched a tutorial on how to put it together, so once it arrives I have a plan to put it together, and can make extra time in the lab to get that circuit out.

Isabel’s Status Report for 3/19

This week was fairly productive, as we are beginning to build our full pipeline, with the goal of finishing it out next week. Most of my hours went towards designing a state machine for the arduino program, which will need to switch through different parts of code for the different calibration phases (user height, then left and right measurements). For these phases, I’m using while loops with a timeout mechanism, as in, once the user stops moving the projector around for one calibration phase, it will automatically lock in and switch to the next phase. During each phase, the user will move the projector around with their head, which will signal the motor to move at a flat rate in the direction the user is facing. This mechanism may be redesigned in the future for usability, but for our preliminary tests it should work to be streamlined with the current computer vision. I’ve been commenting ‘//TUNE’ next to constants we may be able to tune once we begin testing our code. I also ordered our lidar and did some research on how I will hook it up once it comes in.

Currently I am right on schedule with the calibration, as long as I continue at this pace to finish the first draft of calibration code within the next week. I’m aiming to at least get a usable version by Wednesday. The main challenge for this isn’t writing the code, since I’ve already designed this, but currently I’m struggling to debug the Serial port since it doesn’t seem to be reading back the angles that we’re writing over it. If I’m still stuck on that feature on Monday, I might ask a TA that’s used pySerial if they have any input on why it doesn’t seem to be working. I am slightly behind schedule for the lidar because it might take a while to come in, but my mitigation for this would be manually setting the wall distance for now.

My main deliverables for next week will be: the calibration program, and some communication designs for my teammates since we’ll all be working very closely with this calibration code in the future.